I've migrated six production apps from Lambda to Workers over the past 18 months. Each time the pattern was the same: frustrated with cold starts killing user experience, fed up with paying for idle time, and sick of manually configuring regions.

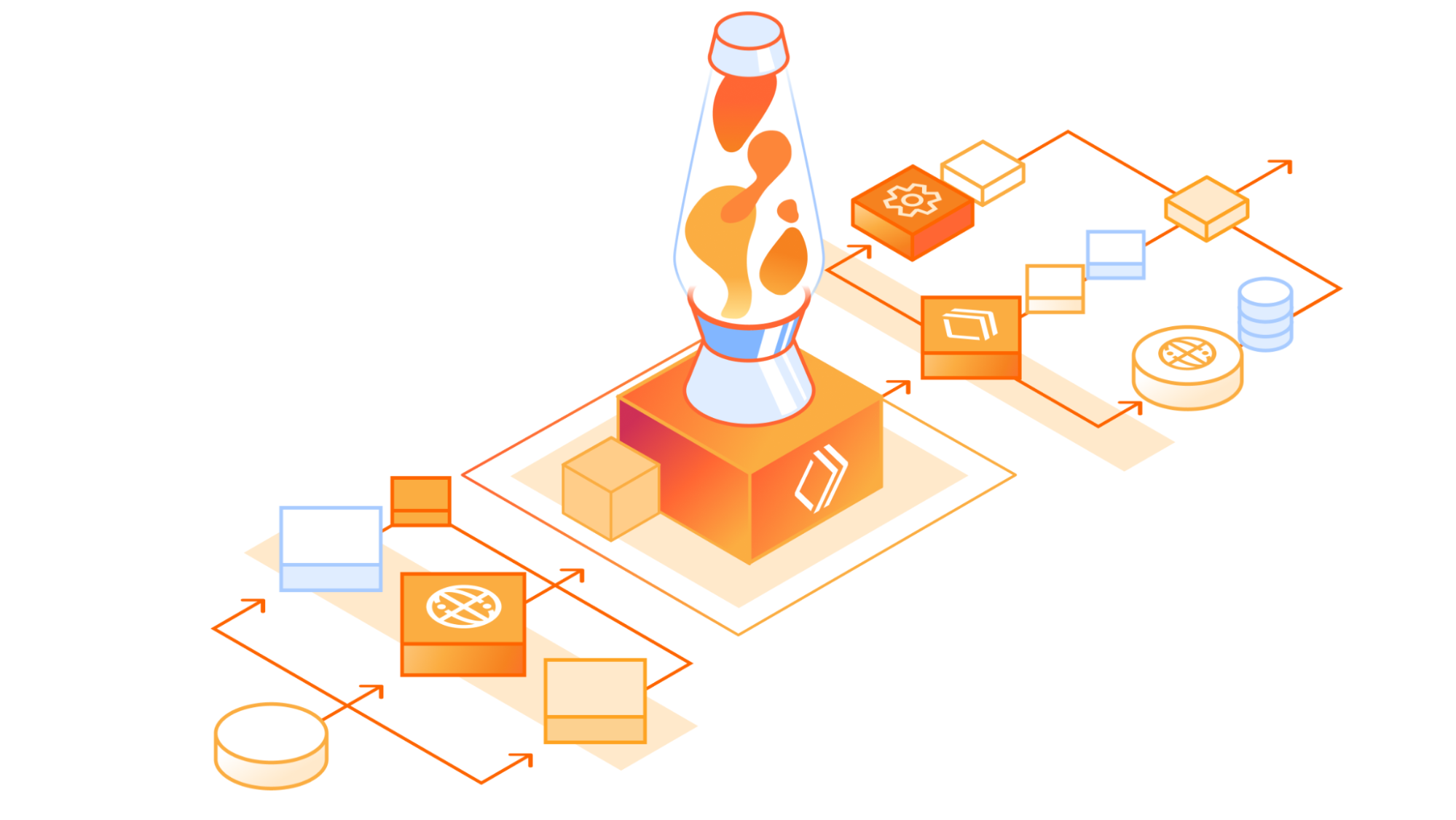

The switch to V8 isolates instead of containers eliminates the cold start problem entirely. Lambda spins up a new container for every function call - Workers run your code in V8 isolates that start in under 5ms consistently.

What Actually Breaks During Migration

Node.js Compatibility Issues Hit Everyone:

- Filesystem APIs don't exist (

fs.readFile,path.resolve) - Some crypto operations use Node.js-specific implementations

- Native modules compiled for Node.js won't work

- Process environment variables work differently

I spent three days rewriting our image processing service because it relied heavily on filesystem operations. The fix was moving file operations to Cloudflare R2 and doing image transforms through Workers Image Resizing.

Lambda-Specific Code That Needs Rewrites:

context.getRemainingTimeInMillis()doesn't exist- Lambda event structures are different from Workers Request objects

- API Gateway integration assumes Lambda's response format

- CloudWatch logging calls won't work

The 2025 Migration Advantage

Containers in Beta Changes Everything:

Since June 2025, you can run Docker containers on Workers for code that can't fit the V8 isolate model. This eliminates the biggest migration blocker - you can lift-and-shift Lambda functions that do heavy filesystem operations or use native libraries.

Workflows is Production Ready:

Step functions replacements are no longer beta. The durable execution engine handles multi-step processes with automatic retries and state persistence.

Production Monitoring Actually Works:

Workers Observability now includes real-time logs, distributed tracing, and error alerting that doesn't suck like early 2024.

Database Connections Stop Being Hell

Hyperdrive Fixed Connection Pooling:

Connection pooling to PostgreSQL actually works now. I've connected to Neon, Supabase, and PlanetScale without the connection limit nightmares that plague Lambda.

Lambda functions create new database connections for every invocation. With 1000 concurrent requests, you hit connection limits immediately. Hyperdrive pools connections properly and maintains them across requests.

D1 for Edge-Native Storage:

D1 SQLite database replicates globally. Queries run locally instead of round-tripping to us-east-1. For session storage and configuration data, it's faster than DynamoDB and costs less.

Real Production Migration Timeline

Week 1: Assessment and Setup

- Audit Lambda functions for Node.js compatibility issues

- Set up Wrangler CLI and local development

- Migrate environment variables to Workers secrets

Week 2-3: Code Migration

- Rewrite filesystem operations to use R2 or external APIs

- Replace CloudWatch logging with Workers logging

- Convert Lambda event handlers to Workers Request/Response pattern

- Set up staging environment

Week 4: Production Deployment

- Blue-green deployment with gradual traffic shifting

- Monitor performance with Workers Analytics

- Configure custom domains and SSL

The Lambda Bill Reality Check

Lambda charges for wall-clock time - even when your function sits idle waiting for database queries. Workers charge for CPU time only. If your function spends 80% of its time waiting for I/O, you pay for 80% less compute.

I've seen monthly AWS bills drop from $2,400 to $890 just from switching I/O-heavy API endpoints. The free tier is generous too: 100k requests daily covers most side projects.

Global deployment happens automatically. Lambda makes you choose regions and manage deployments manually. Workers deploy to 330+ locations without configuration.

Migration Gotchas That Bite Everyone

Memory Limits Will Surprise You:

Workers get 128MB memory in the free tier, 128MB-1GB paid. Lambda goes up to 10GB. If you're processing large datasets in memory, you'll hit limits fast.

Execution Time Constraints:

30 seconds free, 15 minutes paid maximum. Long-running data processing jobs need to be redesigned or moved to Workers Containers.

Local Development Differences:

Miniflare local development is good but not perfect. I've hit cases where code works locally but fails on the edge. Budget extra testing time.

When Workers Isn't The Right Choice

Heavy Computation:

Workers excel at I/O-bound workloads (APIs, webhooks, edge logic). For CPU-intensive tasks like video processing or machine learning inference, Lambda or ECS might be better fits.

Filesystem Dependencies:

If your Lambda function relies heavily on reading/writing local files and you can't refactor to use object storage, the migration complexity might not be worth it.

Team Familiarity:

If your team is deeply invested in AWS services and workflows, the learning curve and integration changes might outweigh the performance and cost benefits.

That said, Workers Containers launching in 2025 addresses most of these limitations. You can run traditional containerized applications while keeping the Workers development experience.