You've got three options for deploying Claude Sonnet 4 in production. I've been burned by all of them, so here's what actually happens when you try to make this work at scale. Spoiler: the marketing materials lie.

AWS Bedrock: "Enterprise-Grade" Until It Isn't

AWS Bedrock is what most enterprises pick because it feels safe. AWS handles the infrastructure, you get your compliance checkboxes ticked, and your CISO stops asking uncomfortable questions. But here's what they don't mention in the sales pitch:

Rate Limits Are a Fucking Nightmare: Every morning at 9am PT, your East Coast users start hitting ThrottlingException errors because everyone else is also using Claude. The error message is useless: "Rate limit exceeded for Claude Sonnet 4" with no indication when it'll reset. Your help desk gets flooded, executives start asking questions, and you're frantically opening AWS support tickets that take 4 hours to get a response.

IAM Integration is a Joke: Sure, Bedrock supports IAM, but good luck debugging why Jennifer from Marketing can't access Claude while Bob from IT can. The permission model is documented like shit, and error messages just say "Access Denied" without telling you which of the 47 different policies is blocking the request.

Reserved Capacity Sounds Great (Until You're Stuck): AWS promises 30% savings with reserved capacity. What they don't tell you is you're locked in for a year, and if your usage drops (layoffs, anyone?), you're still paying for tokens you'll never use. We wasted stupid money on reserved capacity we never used after a project got axed.

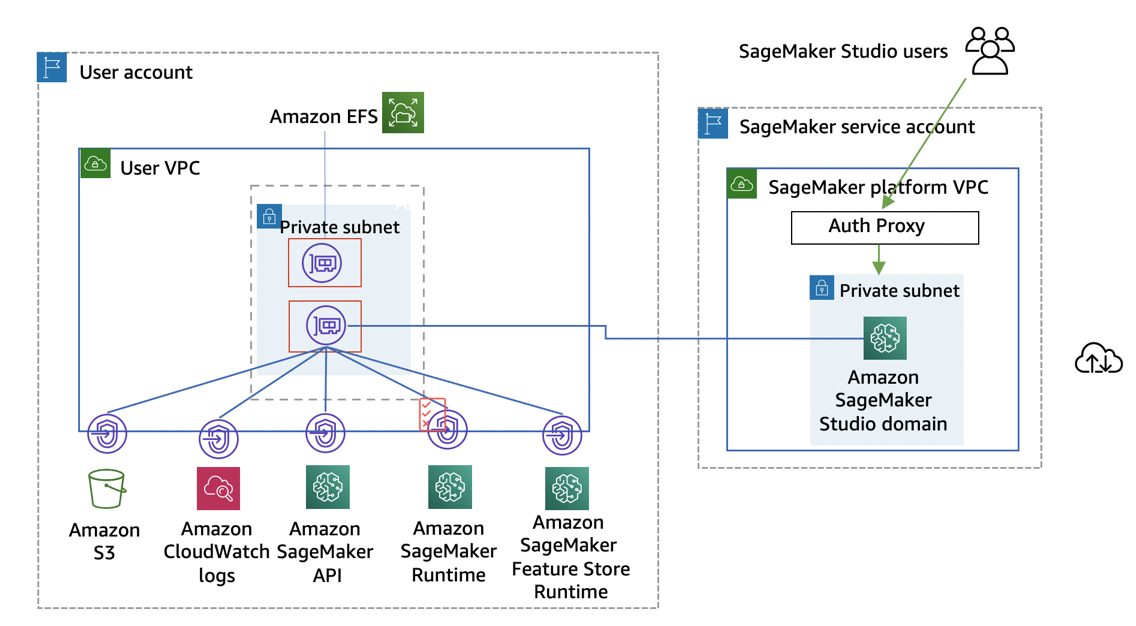

Look, when it's not being a pain in the ass, it actually works decent. VPC isolation keeps your data in your network, and you can scale up without calling anyone. Just budget 2-3x your projected costs because AWS billing is creative with token counting.

Google Vertex AI: For When You Hate Yourself

Google Cloud Vertex AI is what you pick if you're already all-in on Google's ecosystem and enjoy debugging authentication issues that make no sense. The integration with BigQuery is actually pretty slick, and you get the full 1M context window without AWS's weird token counting tricks.

But holy shit, the setup is complicated. You need a PhD in GCP IAM to get anything working, and Google's documentation reads like it was written by robots for robots. Expect to spend 3-4 weeks just getting Claude to respond to "hello world" because of some obscure permission buried in their service accounts maze.

Their pricing calculator is bullshit - it'll say $500/month and your first bill is $2,800 because of some data processing fees nobody mentioned.

Direct Anthropic API: Fast Until It Breaks

The direct Anthropic API is what you use when you want the latest features and don't mind building everything yourself. You get Claude Sonnet 4's new capabilities months before AWS gets around to supporting them, and the rate limits are actually reasonable during business hours.

Here's the catch: when Anthropic has an outage (and they do), your entire product goes down and there's nobody to call. Support tickets get answered in 12-24 hours if you're lucky, 3-5 days if you're not paying for enterprise support. I've had prod down for 6 hours waiting for them to acknowledge a regional API issue.

You're also on your own for security, monitoring, and compliance. Want SOC2 compliance? Build your own audit trail. Need to integrate with your SSO? Hope you like writing OAuth flows. The trade-off is worth it if you need cutting-edge features, but budget extra engineering time for all the enterprise shit you have to build yourself.

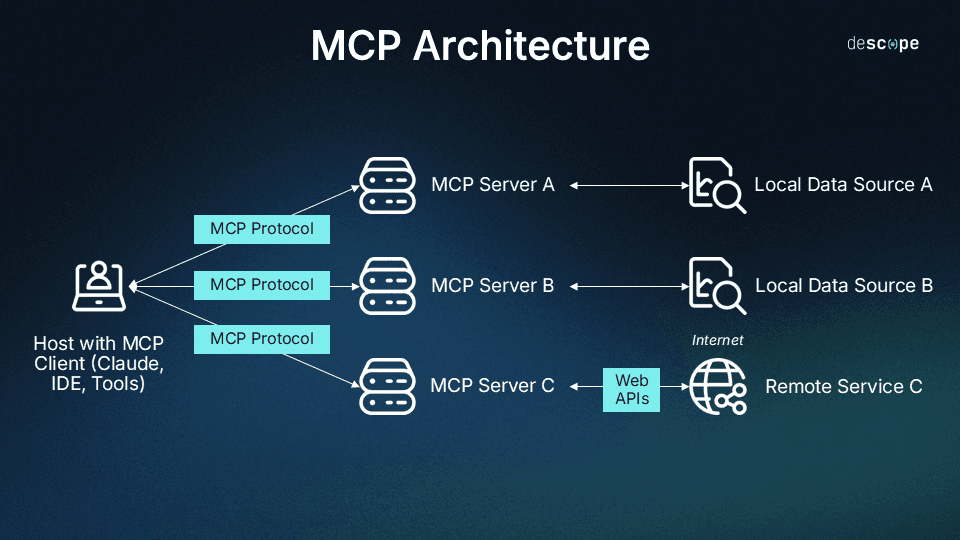

Multi-Cloud (Or: How to Triple Your Complexity)

Some masochistic enterprises try to use all three platforms for different use cases. The theory sounds good:

- Dev/Test: Direct API for latest features

- Production: Bedrock for "enterprise reliability"

- Analytics: Vertex AI for BigQuery integration

In practice, you're now debugging three different authentication systems, tracking costs across three billing systems, and training your team on three different APIs. Every outage becomes a game of "which provider is broken today?"

I've seen teams spend 6 months building abstraction layers to hide the differences between providers, only to discover that each platform has unique quirks that break the abstraction. Pick one provider and stick with it. Multi-cloud sounds smart until you're debugging three different auth systems at 2am.

Real Talk: Start with AWS Bedrock if you need enterprise compliance, or direct API if you don't. You can always migrate later, but trying to be multi-cloud from day one is a recipe for burnout.