Claude Sonnet 4 launched on May 22, 2025, and it's the first AI model that doesn't make me want to throw my laptop out the window. After spending months debugging Claude 3.5's weird hallucinations and paying through the nose for Opus, Sonnet 4 actually delivers what they promised.

Here's the reality: it costs $3/$15 per million tokens, which is 5x cheaper than Opus while handling most of the same complex coding tasks. The big difference is the dual-mode setup - standard responses for when you just need to fix a stupid syntax error, and extended thinking when you're staring at a bug that's been haunting your codebase for weeks.

Actually Useful Context Window (With Caveats)

The 200K context window is legit - you can dump entire codebases without worrying about truncation. The 1M token beta works but performance gets weird past 500K tokens and costs spiral fast.

I've been testing it on a React app with 50+ components and it actually maintains context across files - no more "sorry, I forgot what we were doing" bullshit. But watch your API usage because extended thinking gets expensive fast - I've seen $50+ bills from single debugging sessions.

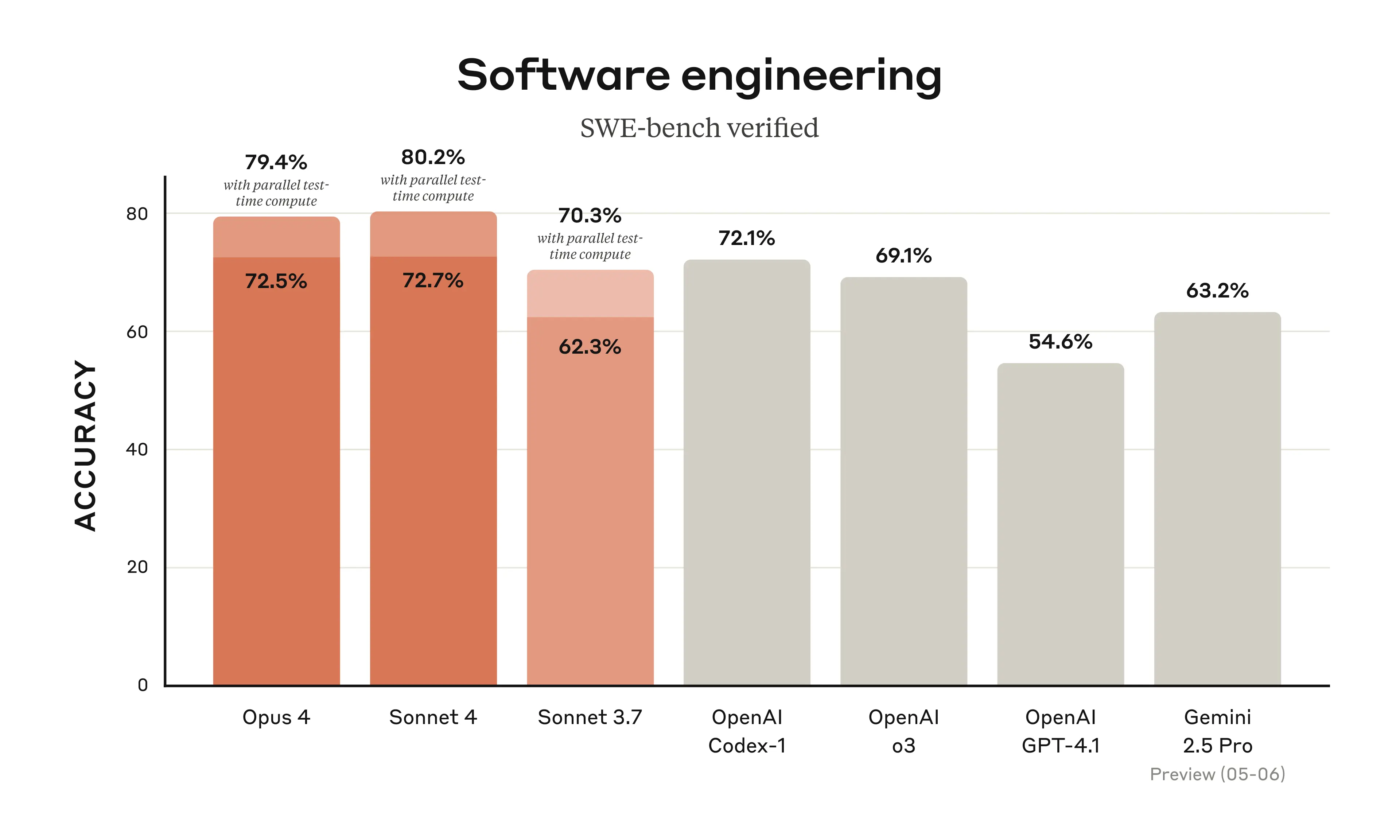

SWE-bench Reality Check

Sonnet 4 scores 72.7% on SWE-bench Verified, which sounds impressive until you realize SWE-bench tests are cherry-picked GitHub issues. In practice, it's way better than 3.5 for debugging React hydration errors and finding edge cases in async code, but it still hallucinates function names sometimes.

The vision support is actually solid - it can read error screenshots and suggest fixes. Parallel tool execution means it doesn't take 30 seconds to run multiple API calls anymore, which was driving me insane with previous models.

Training Data Actually Matters

March 2025 training cutoff means it knows about React 19, Next.js App Router patterns, and TypeScript 5.x quirks that older models completely miss. It can help with Vite 6.0 migration, Tailwind v4 changes, and other recent framework updates that would leave GPT-4 scratching its digital head.

Extended thinking is where Sonnet 4 actually shines - it thinks through problems instead of barfing out garbage. I used it to debug some recursive component re-render nightmare that had me stumped for like 2 days. Worth every extra token when you're dealing with complex React patterns.

The Platform Mess (Choose Your Poison)

You can run Sonnet 4 through Anthropic's direct API, AWS Bedrock, or Google Cloud Vertex AI. AWS has been solid for production but rate limits are annoying. The direct API works fine but you'll hit demand spikes during peak hours.

Claude Code is their VS Code extension and it's honestly pretty good once you get past the initial setup headaches. Just don't enable extended thinking by default or you'll get surprise bills like I did in week one - burned through like 200 bucks before I figured out what happened.

Sonnet 4 destroys GPT-4 for coding tasks

Sonnet 4 destroys GPT-4 for coding tasks  Rate limiting during peak hours (US business hours are brutal).

Rate limiting during peak hours (US business hours are brutal).