Computer Use breaks in the same five ways every damn time. Usually when you're demoing it to your boss at 2am, because of course it does.

Alright, enough bitching. Here's how to figure out what the hell broke this time when Claude stops working:

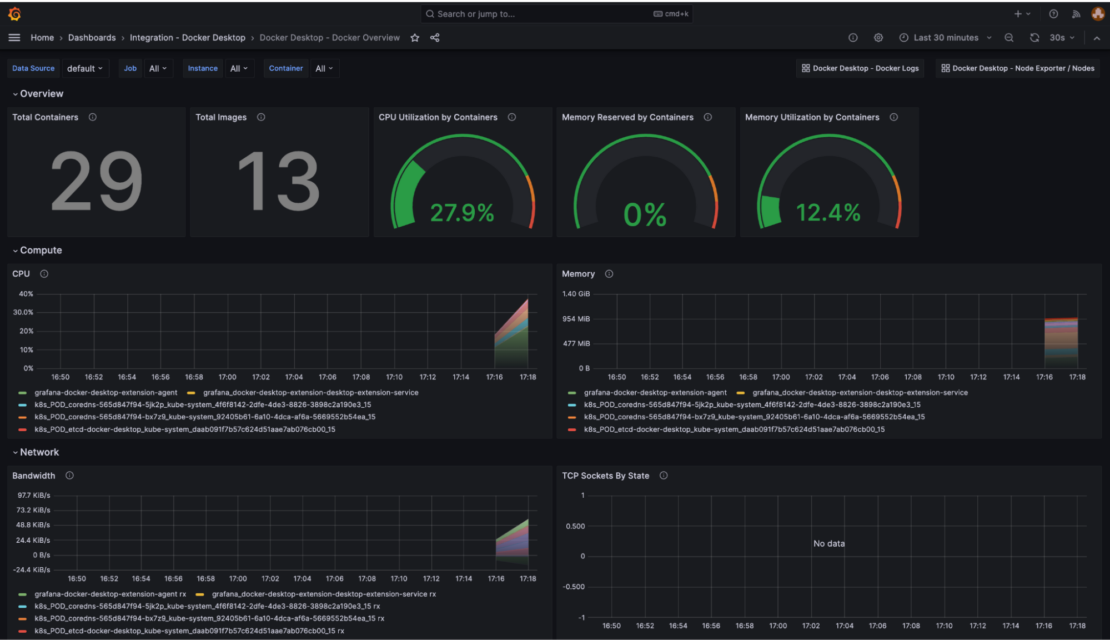

Container Health (Check This First)

Before blaming Claude, check if your Docker setup is fundamentally broken. Most "AI failures" are actually infrastructure problems.

Quick Container Diagnostic:

## Check if container is actually running

docker ps -a | grep computer-use

## Check resource usage (out of memory kills Claude)

docker stats computer-use

## Check container logs for crashes

docker logs computer-use --tail 50

## Test VNC connection directly

curl -I localhost:8080

Common Red Flags:

- Container shows "Exited (137)" = Out of memory (the classic)

- Container shows "Exited (125)" = Docker run error (usually port conflicts)

- VNC returns 404 = Web server not running in container

- High CPU usage = Stuck in screenshot loop (check your bill immediately)

- "Error: address already in use" = Port 8080 is taken (happens with Jupyter/Django)

Network Bullshit

Computer Use needs to reach multiple endpoints. Network problems cause mysterious failures.

Test All Required Connections:

## From inside the container, test API connectivity

docker exec -it computer-use curl -I https://docs.anthropic.com/

## Test DNS resolution (corporate firewalls break this)

docker exec -it computer-use nslookup api.anthropic.com

## Check if proxy/firewall blocks Anthropic

curl -v https://docs.anthropic.com/en/api/getting-started

Corporate networks will screw you over in these predictable ways:

- Proxy blocks api.anthropic.com (obviously)

- SSL inspection breaks everything (classic IT)

- DNS redirects Anthropic to security scanners (paranoid bastards)

- Firewall blocks HTTPS on weird ports (because why make life easy?)

How to unfuck corporate networks:

## docker-compose.yml

services:

computer-use:

environment:

- HTTP_PROXY=http://your-proxy:8080

- HTTPS_PROXY=http://your-proxy:8080

- NO_PROXY=localhost,127.0.0.1

Screenshot Analysis Problems

Claude's screenshot analysis fails in specific, debuggable ways. Here's how to identify vision problems.

Manual Screenshot Testing:

## Take a screenshot like Claude does

docker exec computer-use scrot /tmp/debug.png

## Copy it out for analysis

docker cp computer-use:/tmp/debug.png ./debug_screenshot.png

Visual Debugging Checklist:

- Resolution problems: Image should be exactly 1280x800

- Color issues: Check if image is grayscale (color mapping broken)

- Partial captures: Incomplete screenshots indicate display driver issues

- Font rendering: Blurry text means DPI scaling problems

- UI element visibility: Check if buttons/forms are actually visible

Resolution Fix:

## Force container to exact resolution

docker exec computer-use xrandr --output VNC-0 --mode 1280x800

For more X11 forwarding troubleshooting, check the display settings and VNC configuration.

API Response Analysis

When Claude sends weird responses, the API interaction is breaking down.

API Debugging Script:

import anthropic

import json

import base64

client = anthropic.Anthropic(api_key="your-key")

## Test with same screenshot Claude uses - this broke in Sept 2025 update

with open("debug_screenshot.png", "rb") as f:

image_data = base64.b64encode(f.read()).decode()

## Check file size - Computer Use has 100MB limit

file_size_mb = len(image_data) * 3/4 / 1024 / 1024

if file_size_mb > 100:

print(f"WARNING: Screenshot {file_size_mb:.1f}MB exceeds 100MB limit")

response = client.messages.create(

model="claude-3-5-sonnet-20250109", # Latest Computer Use model

max_tokens=1000,

tools=[{

"type": "computer_20250124", # Updated tool version

"name": "computer",

"display_width_px": 1280,

"display_height_px": 800

}],

messages=[{

"role": "user",

"content": [

{"type": "text", "text": "Take a screenshot"},

{"type": "image", "source": {

"type": "base64",

"media_type": "image/png",

"data": image_data

}}

]

}]

)

print(json.dumps(response.model_dump(), indent=2))

API Error Patterns:

rate_limit_error: You're hitting API limits (add delays)request_too_large: Screenshot file too big (compress images)invalid_request_error: Missing beta header or malformed requestoverloaded_error: Anthropic's servers are struggling (retry with backoff)

Action Execution Failures

Claude sees the screen correctly but clicks fail or do nothing.

Mouse Click Debugging:

## Test X11 mouse simulation directly

docker exec computer-use xdotool mousemove 640 400 click 1

## Check if click registered in logs

docker logs computer-use | grep -i click

## Test keyboard input

docker exec computer-use xdotool type "test input"

Click Failure Causes:

- Wrong window focus: Click goes to background application

- Coordinate offset: DPI scaling or window decoration issues

- Security restrictions: X11 permissions block input simulation

- Timing issues: UI changed between screenshot and click

Focus Debugging:

## Check which window has focus

docker exec computer-use xdotool getwindowfocus getwindowname

## Force focus to main application

docker exec computer-use xdotool search --name "Firefox" windowactivate

Cost & Performance Monitoring

Track what's actually happening vs. what you think is happening. Monitor container performance to catch issues early.

Real-time Monitoring Script:

## Monitoring script I hacked together at 3am after waking up to a $500 API bill because Claude spent 8 hours taking screenshots of a goddamn modal dialog

import time

import subprocess

from datetime import datetime

def monitor_computer_use():

start_time = datetime.now()

screenshot_count = 0

while True:

# Check if container is still alive

result = subprocess.run(["docker", "ps", "-q", "-f", "name=computer-use"],

capture_output=True, text=True)

if not result.stdout.strip():

print("CONTAINER IS DEAD")

break

# Rough cost estimate (screenshots are ~2 cents each)

estimated_cost = screenshot_count * 0.02

print(f"Runtime: {datetime.now() - start_time}")

print(f"Screenshots: {screenshot_count}")

print(f"Rough cost: ${estimated_cost:.2f}")

if estimated_cost > 20:

print("COSTS GETTING HIGH - CHECK WHAT'S HAPPENING")

time.sleep(30)

Performance Red Flags:

- More than 1 screenshot per 3 seconds = Loop or lag

- Cost increasing faster than task completion = Inefficiency

- Container CPU > 80% sustained = Resource starvation

- Memory usage growing = Memory leak in automation code

Advanced Debugging Techniques

When basic diagnostics don't reveal the problem.

Full Request/Response Logging:

## Enable debug mode in Anthropic client

import logging

logging.basicConfig(level=logging.DEBUG)

## Log all HTTP traffic

import http.client as http_client

http_client.HTTPConnection.debuglevel = 1

X11 Event Monitoring:

## Watch all X11 events in real-time

docker exec computer-use xinput test-xi2 --root

## Monitor window events

docker exec computer-use xev | grep -E '(Button|Key|Enter|Leave)'

Screenshot Comparison Tool:

from PIL import Image, ImageChops

import numpy as np

def compare_screenshots(img1_path, img2_path):

"""Find what changed between screenshots"""

img1 = Image.open(img1_path)

img2 = Image.open(img2_path)

diff = ImageChops.difference(img1, img2)

# Convert to numpy for analysis

diff_array = np.array(diff)

changed_pixels = np.sum(diff_array > 10) # Threshold for "changed"

print(f"Changed pixels: {changed_pixels}")

print(f"Change percentage: {(changed_pixels / diff_array.size) * 100:.2f}%")

# Save difference image for visual inspection

diff.save("screenshot_diff.png")

This diagnostic approach catches 90% of Computer Use problems. When you find yourself debugging for hours, step back and work through this checklist systematically. Most issues are infrastructure problems disguised as AI failures.