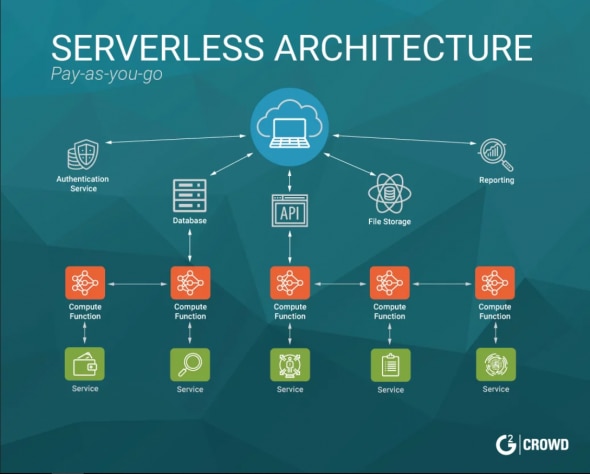

After two years running production workloads on both platforms, here's the marketing bullshit vs reality about Google Cloud Run vs AWS Fargate.

Cloud Run's "Simple" Deployment Broke Our Production

Google's one-command deployment sounds amazing until you need anything beyond a Hello World app.

Our first production deployment took 3 weeks instead of the promised "minutes" because:

The VPC Connector Hell

Cloud Run's networking is broken by design.

The moment you need to connect to a Cloud SQL instance in a VPC, you're fucked. VPC connectors randomly timeout with zero error messages.

Found this out at 2am when our API started returning 503s.

The troubleshooting docs are useless.

Real fix? Pray and redeploy. Sometimes it works, sometimes it doesn't. Developers on Stack Overflow are still hitting the same timeout hell

- Google's "direct VPC egress" was supposed to fix this shit, but it didn't.

Memory Limits That Make No Sense

Cloud Run claims to support up to 32GB memory, but good luck using more than 4GB without random Container startup timeouts.

Our Node.js app with 6GB allocation failed 30% of the time during cold starts. No logs, no explanation.

This production disaster sounds exactly like what we went through.

The solution? Scale down memory and accept shittier performance.

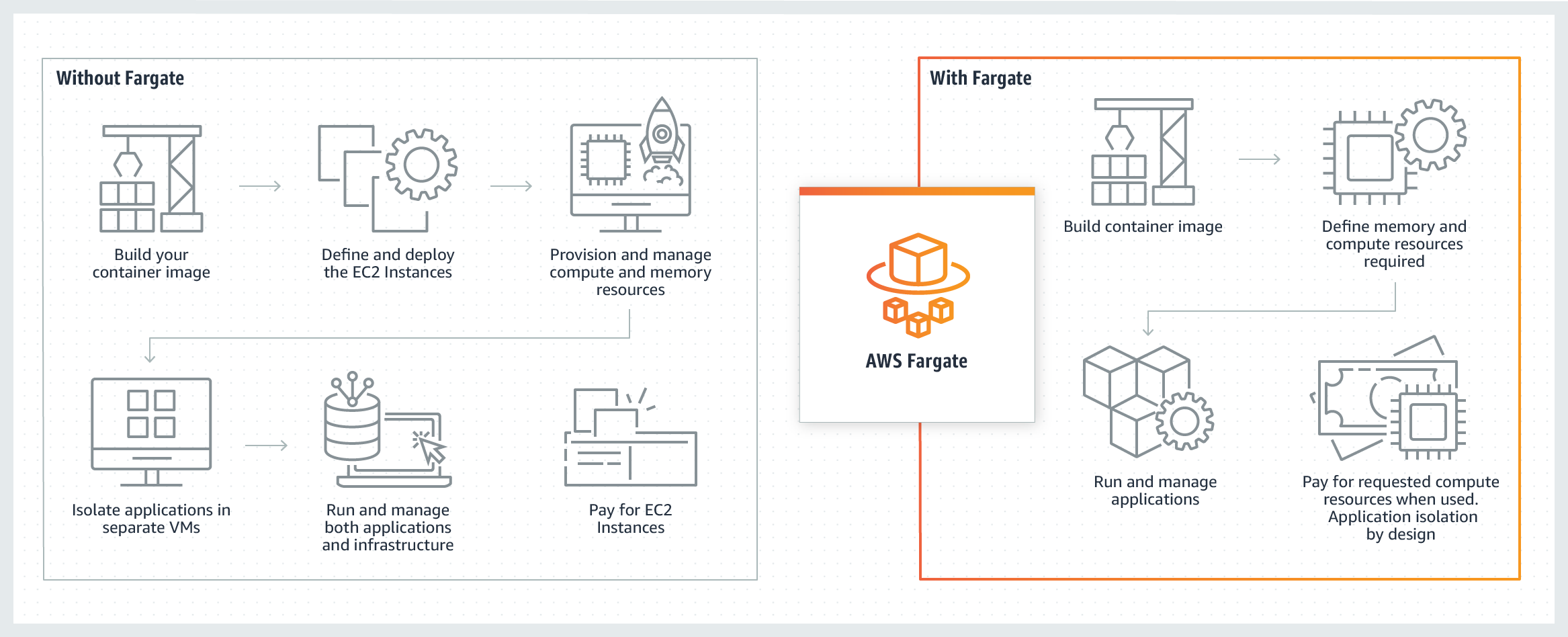

Fargate's Hidden Cost Traps

AWS markets Fargate as "pay only for what you use" but conveniently ignores the hidden costs that will bankrupt you:

Data Egress Costs Nobody Mentions

Our Fargate bill jumped from like $780 to $3,180 in one month because data egress costs aren't included in their calculator.

Moving 2TB of data between availability zones? That's $380-420 you didn't see coming.

AWS billing surprises are common

- misconfigured autoscaling can generate massive bills in hours.

ECS Task Definitions Are YAML Hell

Fargate requires [ECS task definitions](https://docs.aws.amazon.com/Amazon

ECS/latest/developerguide/task_definition_parameters.html) that make Kubernetes look simple.

Want to update an environment variable? Rebuild the entire task definition and redeploy. Zero hot reloading, zero developer experience.

Our task definition JSON is 200 lines for a simple web service.

Compare that to Cloud Run's single gcloud run deploy command.

Cost Reality Check: Real Production Numbers

The pricing comparisons everyone cites are bullshit because they ignore:

- Load balancer costs: $18/month minimum on AWS, free on Cloud Run

- NAT Gateway fees: $45/month if you need outbound internet access

- Container registry storage: $0.10/GB/month adds up fast

- Data transfer charges:

The real killer for high-traffic apps

Our actual production costs for identical workloads:

- Cloud Run: $340/month for 100k requests/day (varies between $280-420 depending on traffic)

- Fargate: $580/month for the same workload (including hidden costs they don't mention)

But here's the kicker that nearly got me fired: [Fargate autoscaling without limits](https://docs.aws.amazon.com/Amazon

ECS/latest/developerguide/service-auto-scaling.html) during a traffic spike cost us over $2,000 for one week.

Cloud Run handled a similar spike for around $90 extra.

The Reliability Reality

Cloud Run's Mysterious Failures

Silent job failures are common.

Our batch jobs would fail without logs, even with maxRetries: 0.

Google's troubleshooting guide basically says "have you tried turning it off and on again?"

Fargate's 502 Error Nightmare

This real-world debugging session took our team 3 days to solve.

ALB health checks failing, containers stuck in PENDING state, no clear error messages. The fix? Change one parameter in the target group configuration that wasn't in any documentation.

Performance Claims vs Reality

The benchmark numbers everyone quotes are lab conditions, not production reality:

Cold starts in production:

- Cloud Run: 2-8 seconds for our Node.js app (not the sub-100ms they claim in marketing)

- Fargate: 15-45 seconds, sometimes over a minute with distant container registries

Scaling speed:

- Cloud Run:

Scales fast, but then your database dies because 500 containers connect at once

- Fargate: Takes 8-12 minutes to scale up, longer to scale down

What Actually Works

After burning through $12k in surprise bills and debugging containers at 3am, here's what we learned:

Choose Cloud Run if:

- You can live with VPC networking limitations

- Your app handles traffic spikes gracefully with concurrency controls

- You prefer fighting Google's mysterious failures over AWS's complex configuration

**

Choose Fargate if:**

- You have AWS expertise to navigate ECS complexities

- You can stomach hidden costs for enterprise features

- You need unlimited execution time for batch jobs

Both platforms will screw you over in different ways. The choice isn't which one is better

- it's which flavor of pain you can tolerate while your containers explode from 1 to 500 at 3am and you're frantically trying to figure out why everything's on fire. I've been through both circles of hell and lived to warn you about it. The breakdown below shows where each platform will stab you in the back.