What is Weights & Biases?

Sick of tracking experiments with spreadsheets? Weights & Biases (W&B) is an ML experiment tracking platform that tracks your shit without falling over. If you've ever lost track of which hyperparameters produced your best model, or spent hours trying to reproduce results from three weeks ago, W&B solves that problem.

Started by engineers who got tired of managing experiments with spreadsheets and folder names like "model_final_v2_ACTUALLY_FINAL", W&B tracks your training runs automatically. The web UI doesn't look like it was built in 2005, which is already better than most ML tools. Big companies use it because it scales from solo PhD projects to enterprise teams without completely falling apart.

What W&B Actually Does

Experiment Tracking: Logs metrics, hyperparameters, and system info as your models train. Finally you can compare hundreds of runs side-by-side instead of squinting at terminal output or trying to remember what you changed between runs.

Model Registry: Stores your trained models so you can actually find the model you deployed to production. No more "model_best.pkl" vs "model_best_final.pkl" debates.

Dataset Versioning: Tracks dataset changes. Useful when your data team updates the training set and suddenly your model performance tanks.

Hyperparameter Sweeps: Automatically tries different parameter combinations. Beats manually editing config files and forgetting what you tested.

Integration Reality Check

W&B works with the usual suspects: PyTorch, TensorFlow, Hugging Face, scikit-learn. Adding wandb.init() to your training script is easy. Getting it to work when PyTorch 2.1.0 breaks their auto-logging, your custom data loader throws NoneType errors, and your Docker container can't reach their servers because of networking bullshit? That's where you'll spend your weekend and most of Monday morning.

The integrations mostly work as advertised, until you need distributed training with a custom dataset and suddenly you're debugging TypeError: 'NoneType' object is not subscriptable at 2AM. Their docs cover the happy path well enough, but anything remotely complex means digging through 500 GitHub issues to find the one comment that actually fixes your problem.

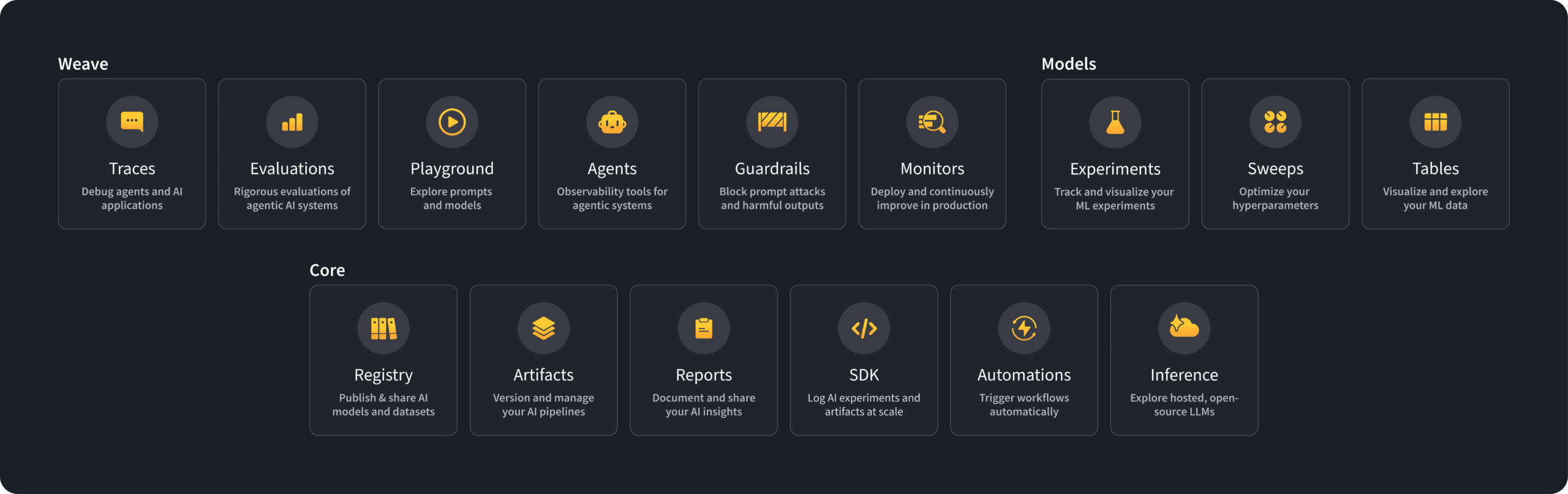

Two Main Products

W&B Models: The original experiment tracking everyone uses. Solid for traditional ML workflows.

W&B Weave: Their newer LLM and AI application platform. Still beta but doesn't completely suck for prompt engineering and LLM evaluation work.