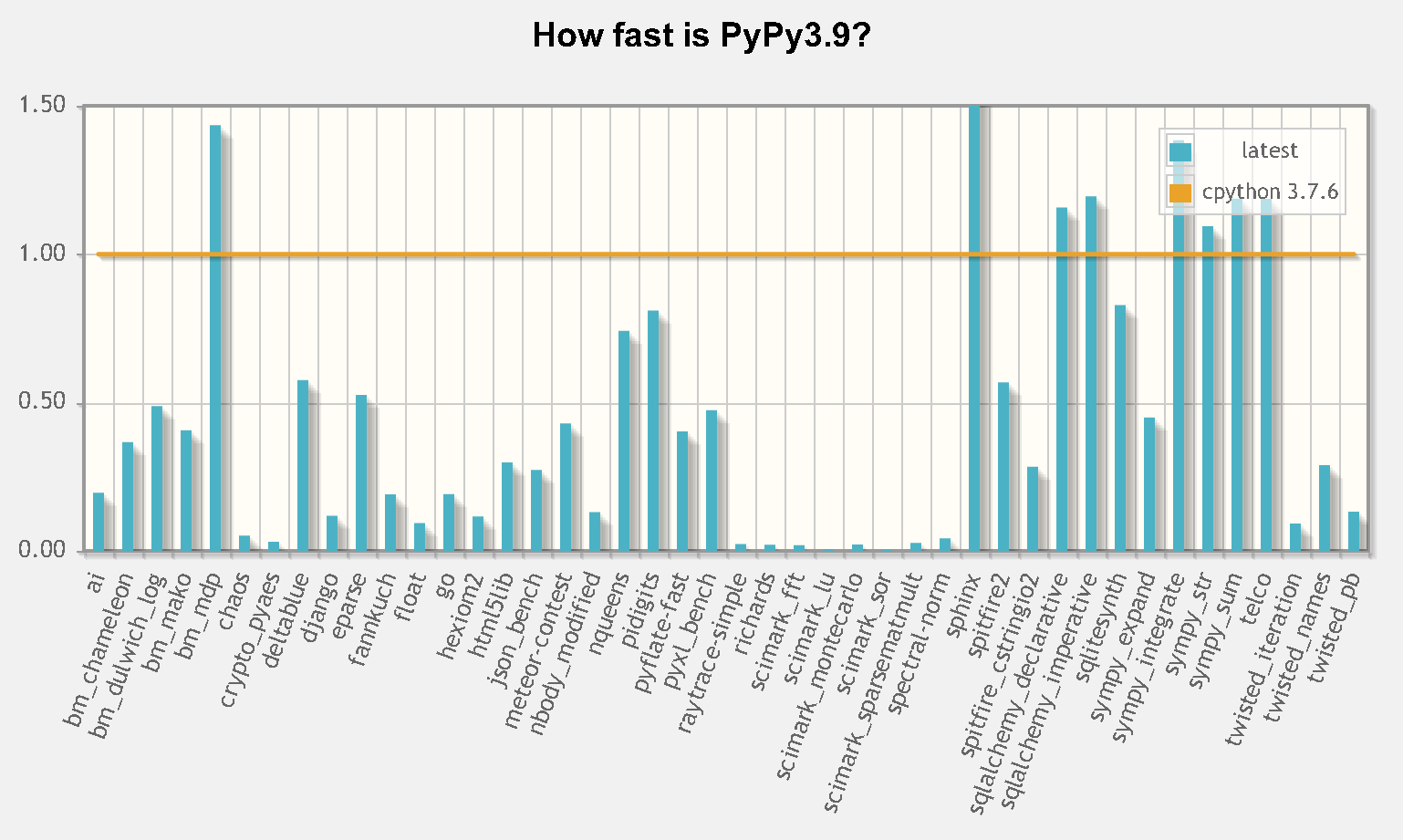

PyPy is a Python interpreter that compiles your code while it runs. Instead of interpreting bytecode like CPython, it watches for hot loops and compiles them to machine code. The result is usually about 3x faster execution.

PyPy 7.3.20 came out in July 2025 and finally supports Python 3.11. It also still supports Python 2.7 because some legacy code just won't die. Python isn't inherently slow - CPython is just designed for simplicity over speed.

How PyPy Actually Works

The JIT compiler runs alongside your Python code. It profiles execution to find hot spots - code that runs repeatedly. Those hot paths get compiled to native machine code, which runs much faster than interpreted bytecode.

You'll see about 3x speedup on CPU-bound pure Python code. Loops, math, and algorithmic work benefit the most. Web applications speed up too if they're doing actual computation rather than just database calls.

The Memory Trade-off

PyPy eats 40% more RAM than CPython. The JIT needs space to store compiled machine code, and the garbage collector works differently. Your monitoring alerts will freak out because memory usage is spiky instead of the smooth slopes you get with CPython's reference counting.

I'm running PyPy on our API servers. Memory usage looks like a broken heart monitor - these weird spikes that look like memory leaks but aren't. Our ops team panicked until we explained the GC patterns. Took three meetings and a lot of coffee.

C Extension Compatibility Hell

This is where PyPy becomes a pain in the ass. Most Python packages with C extensions will break spectacularly. numpy, pandas, lxml, psycopg2 - anything that uses native code will probably fail to install or crash at runtime.

pip install numpy might work, might not. You'll spend hours figuring out why your deployment suddenly breaks on a package that's worked fine for years. Took us 2 weeks to replace all the C extensions in our stack. PyPy has CFFI as a replacement, but that means finding or writing CFFI versions of everything you need.

When PyPy Actually Helps

PyPy works well for:

- Long-running web services where the JIT has time to optimize hot paths

- CPU-intensive pure Python code that runs for more than a few seconds

- Algorithmic work, simulations, and computational tasks

- Backend services that do actual computation rather than just database queries

Skip PyPy for:

- Scripts that run and exit quickly (JIT warmup overhead kills any gains)

- Data science workloads heavy on numpy/pandas (you lose the performance benefits)

- Anything that depends heavily on C extensions you can't replace

The JIT compiler analyzes your code at runtime and optimizes the hot paths. You get better performance the longer your application runs. Perfect for long-running services, useless for short scripts.

How does PyPy actually stack up against other Python implementations? The benchmarks look great, but reality is messier.