PyPI is the official package repo where Python packages live and sometimes die. With 665k+ projects as of September 2025, it's basically a massive digital hoarder's paradise where someone's weekend project sits next to enterprise-grade libraries.

What Happens When You Run pip install

When you type pip install requests, here's what actually happens:

- pip hits PyPI's servers (usually works)

- Downloads the package metadata (usually works)

- Figures out dependencies (this is where things get spicy)

- Downloads everything (pray your internet doesn't die)

- Installs it (hope you're not on Windows with a C extension)

The whole thing works great until it doesn't. Then you get to play detective with error messages like `error: Microsoft Visual C++ 14.0 is required` or my personal favorite: `Failed building wheel for [some-random-dependency-you-never-heard-of]`.

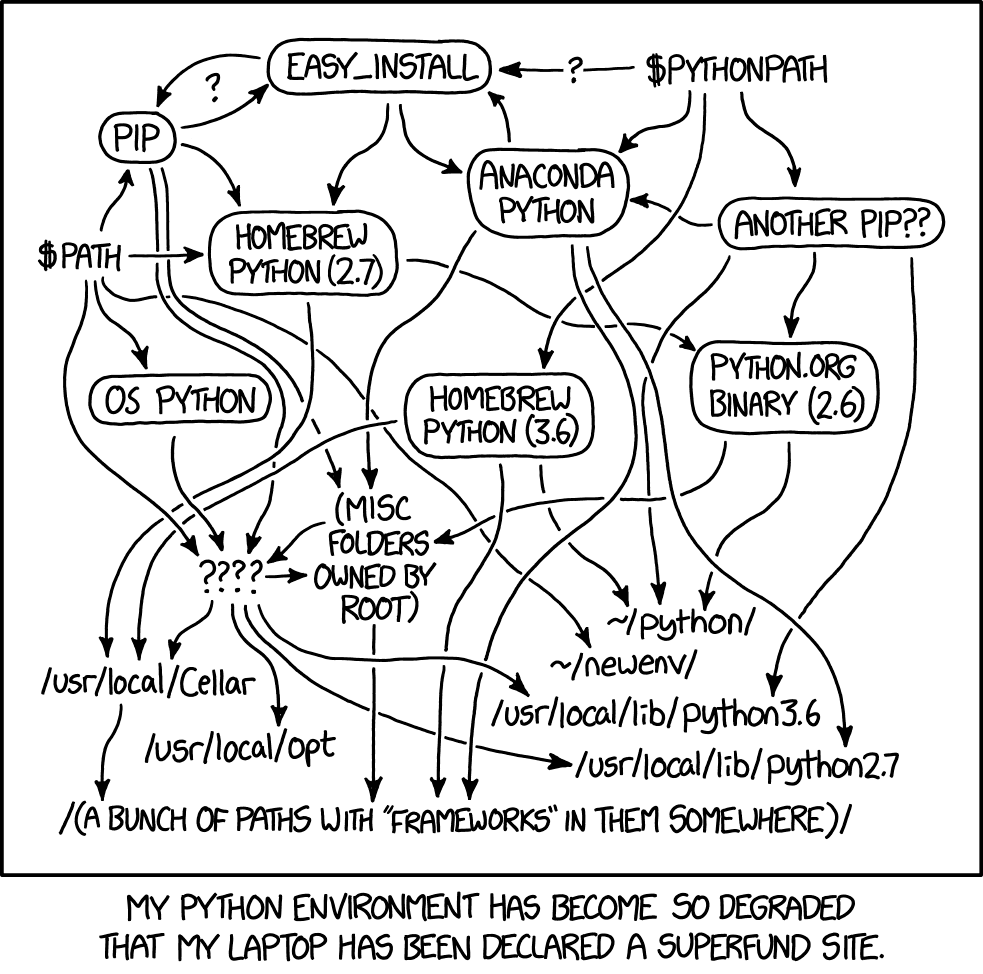

The Dependency Hell Reality

PyPI hosts 7.4 million releases because Python developers have commitment issues. Every minor version bump creates a new release, which is great until you discover that package-a 1.2.3 requires package-b >=2.0 but package-c (which you also need) only works with package-b <2.0. The Python packaging docs call this "dependency resolution" - which is fancy corporate speak for "good luck figuring it out." Tools like pip-tools and Poetry exist specifically to deal with this dependency hell.

This is when you learn about virtual environments the hard way. Pro tip: Learn `python -m venv myenv` or suffer forever.

When Installation Goes Wrong

Common scenarios that will ruin your day:

Windows C Extension Hell: Installing anything with C extensions on Windows is like playing Russian roulette. NumPy? Good luck. Pandas? Better pray you have Visual Studio installed. Scientific packages? Just use conda and save yourself 3 hours of Stack Overflow diving. The Python wiki has the gory details about Windows compilers.

M1 Compatibility Pain: ERROR: No matching distribution found for tensorflow==2.10.0. Welcome to the future, where your shiny new hardware breaks half the Python ecosystem and you get cryptic "no matching distribution" errors instead of helpful messages like "this package doesn't support ARM64 yet." Universal2 wheels are supposed to fix this, but adoption is slow.

Linux Dependency Chains: Even on Linux, you'll occasionally hit that one package that needs 47 system dependencies, none of which are documented properly.

Package Quality: It's Complicated

PyPI does basic quality checks, but you still need to vet packages yourself. The platform has security scanning and metadata validation, but "popular" doesn't always mean "good." I've seen packages with millions of downloads that are essentially abandoned. Tools like Safety and pip-audit help catch known vulnerabilities.

The reality check: Look at the GitHub repo, check when it was last updated, read the issues. If the maintainer hasn't responded to bug reports in 6 months, find an alternative. I learned this the hard way when a "popular" package with millions of downloads broke our production deployment because it had an unpatched security vulnerability that sat there for months. Resources like Libraries.io help track package health.

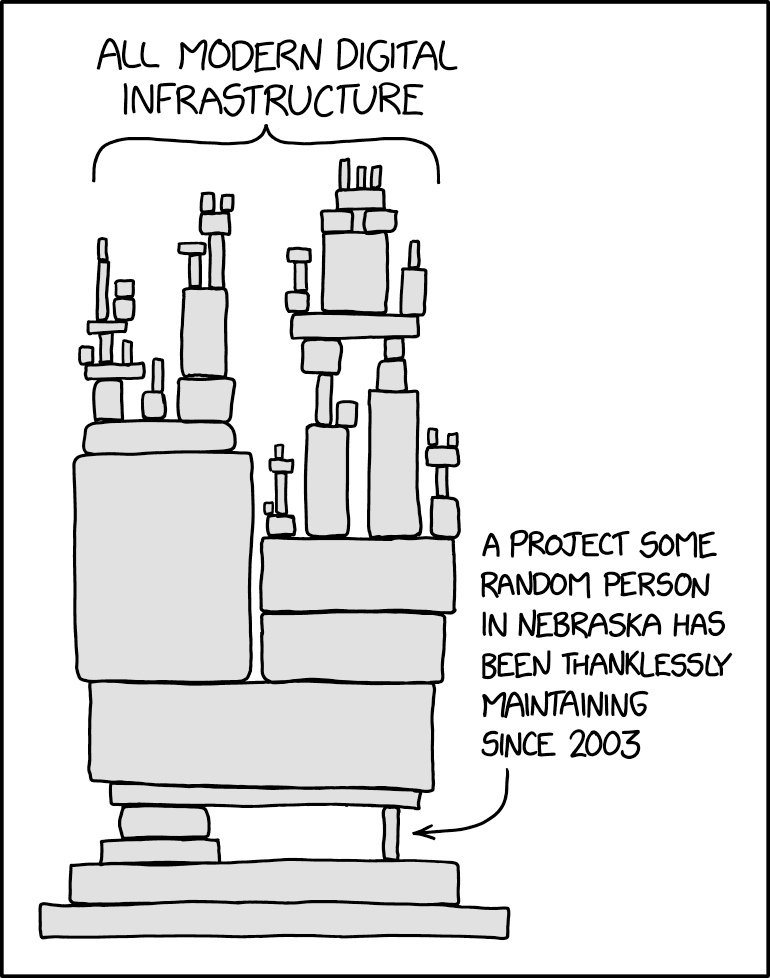

Why PyPI Usually Works

Despite all the chaos, PyPI actually works pretty well. It serves 29.9 TB of package data without falling over, which is impressive. TensorFlow alone takes up 404 GB of space, and somehow they manage to serve millions of downloads daily without everything catching fire. The Warehouse project powers the modern PyPI infrastructure.

They use Fastly as a CDN, so your packages download fast from anywhere. They've got redundant storage with Backblaze B2 and AWS S3 fallback, because nobody wants to explain to angry developers why pip is broken worldwide. The infrastructure details are surprisingly transparent.