Got woken up last weekend because containers couldn't talk to each other. API was throwing connection refused errors, users were pissed, and we were hemorrhaging money. I SSH'd into the host to debug and realized - of course - no tcpdump installed. So there I was, deciding between spending 20 minutes installing debugging tools while everything burned, or just running netshoot.

Nicola Kabar built this because he got tired of the same bullshit I was dealing with - debugging containers without proper tools. It's a 200MB container packed with actual networking utilities, so you can attach to any broken container's network namespace and figure out what's wrong.

Real Production Problems

Last month our API started throwing connection errors to PostgreSQL. Took like 2 hours to figure out it was ECONNREFUSED 127.0.0.1:5432 - the logs were garbage. Database looked fine - at least kubectl get pods said it was Running, which means absolutely nothing. Spent way too long chasing bullshit - first I thought it was the connection pool, then I blamed AWS load balancer, then I restarted half our infrastructure.

Without netshoot, you're fucked - kubectl exec into containers that have zero useful tools. Our containers are stripped down to nothing because security.

With netshoot I could finally debug:

kubectl debug api-pod-xyz -it --image=nicolaka/netshoot

Turns out DNS was fucked somehow. Took me 2 hours and 47 minutes to figure out because DNS was the last thing I checked, like an idiot. It's always DNS but you never check DNS first.

What's Actually In This Thing

Packet Analysis: tcpdump for seeing what's hitting the wire, termshark for a terminal-based Wireshark, and tshark for command-line packet inspection. I've caught MTU issues breaking jumbo frames and load balancers silently dropping connections with these tools.

Connectivity Testing: curl, telnet, nc (netcat), and nmap for testing if services are reachable. ping and traceroute for layer 3 stuff. These saved me when Istio was randomly black-holing 5% of requests - turns out the service mesh config was screwed.

DNS Debugging: dig, nslookup, host, and drill for when DNS breaks. Which is constantly. Kubernetes DNS fails intermittently in ways that make you question your life choices.

Performance Testing: iperf3 for bandwidth, fortio for HTTP load testing. Used these to prove our "network issues" were actually the application being slow, not the network.

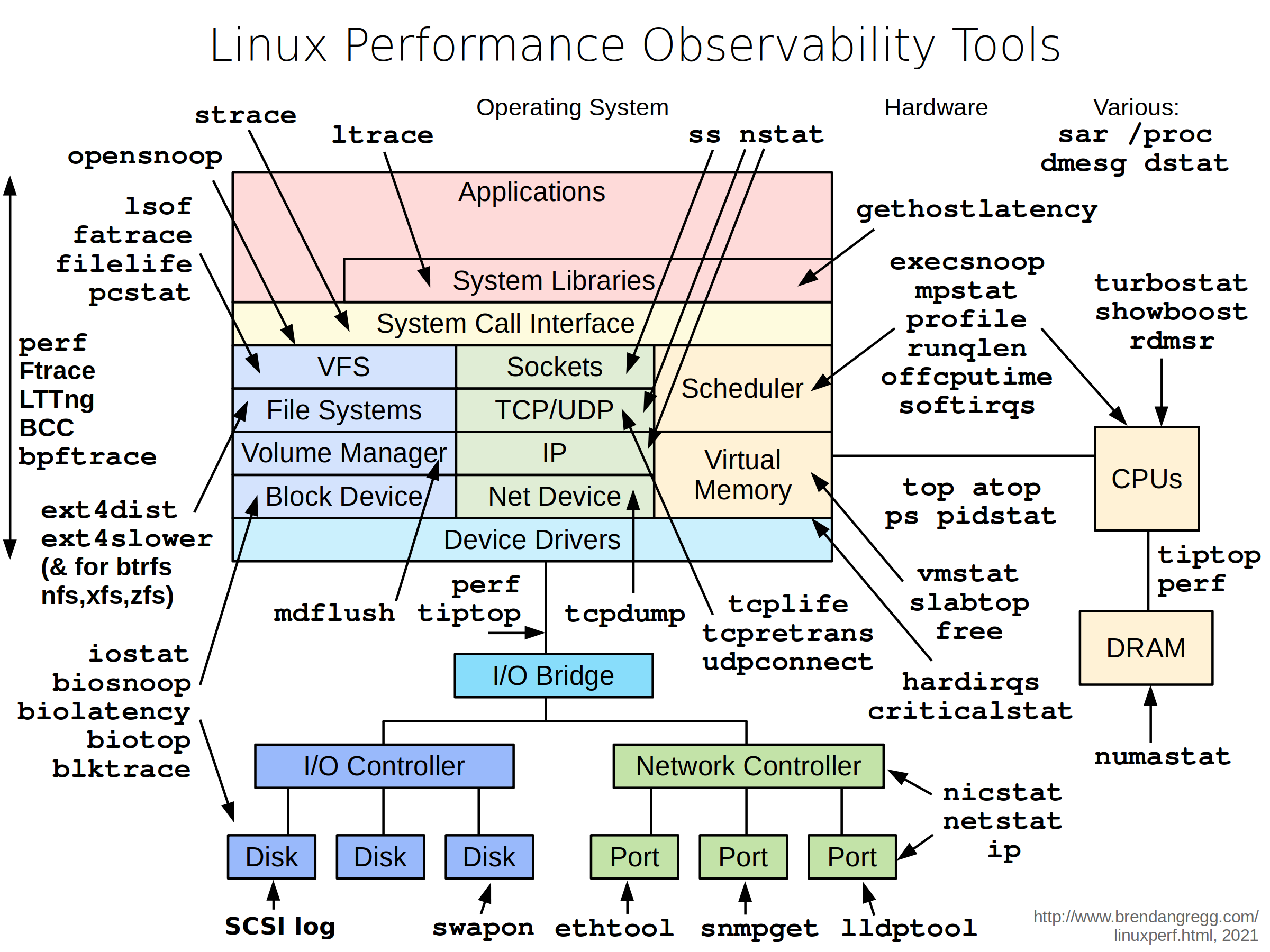

Brendan Gregg's tool diagram - if you've seen this before, you know why netshoot exists

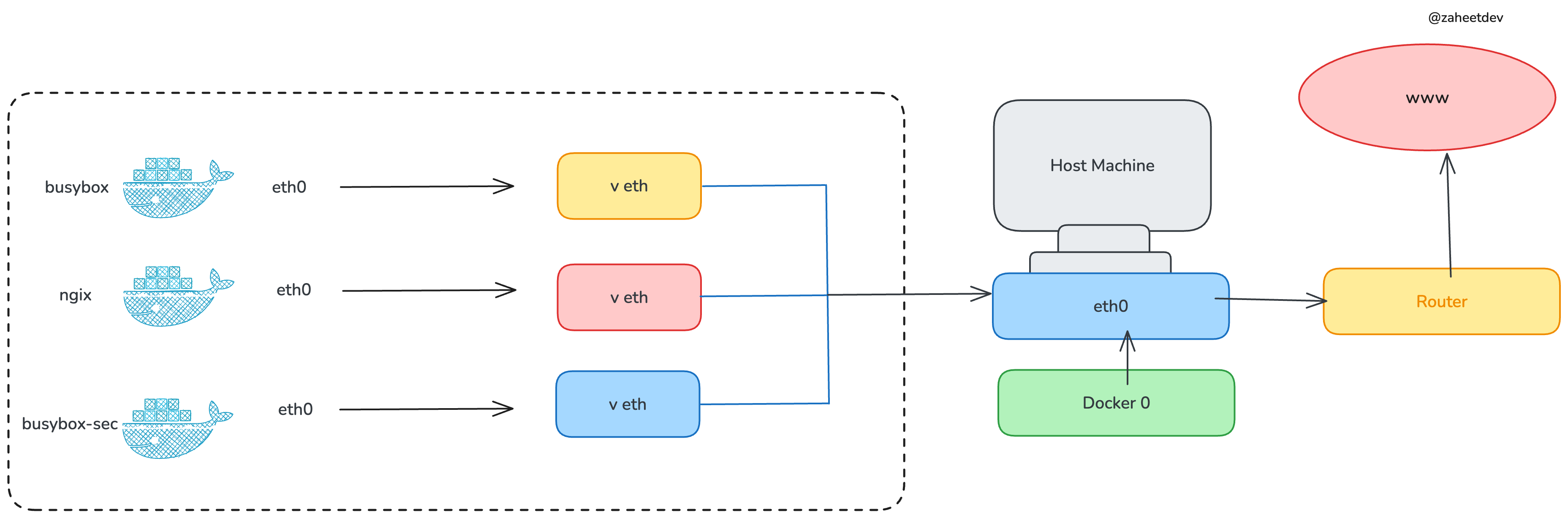

Container network isolation - each container gets its own network namespace

Here's why netshoot actually works: it shares the network namespace with whatever container you're debugging. If your app container can't reach the database, netshoot attached to that same container will have identical connectivity problems. No "works on my machine" bullshit - you're debugging the exact same network stack that's failing.

Network namespace isolation - containers see their own isolated network stack

Netshoot runs on AMD64 and ARM64, works with ephemeral containers in Kubernetes 1.25+, and has 9,800+ GitHub stars from engineers who've been there during weekend outages trying to debug why containers can't talk to each other. You'll find it mentioned constantly in Stack Overflow threads and GitHub issues whenever container networking breaks. It's become the standard debugging tool referenced in Kubernetes docs and pretty much every cloud provider's troubleshooting guides.