If you've landed here, you've probably experienced the soul-crushing frustration firsthand. MySQL Workbench 8.0.40 STILL hasn't fixed the fundamental performance issues that have been pissing us off for years. The memory leaks persist, large dataset operations still crash randomly, and connection timeouts happen precisely when you're debugging production at 3AM.

Why Workbench Performance Sucks (And It's Not Just You)

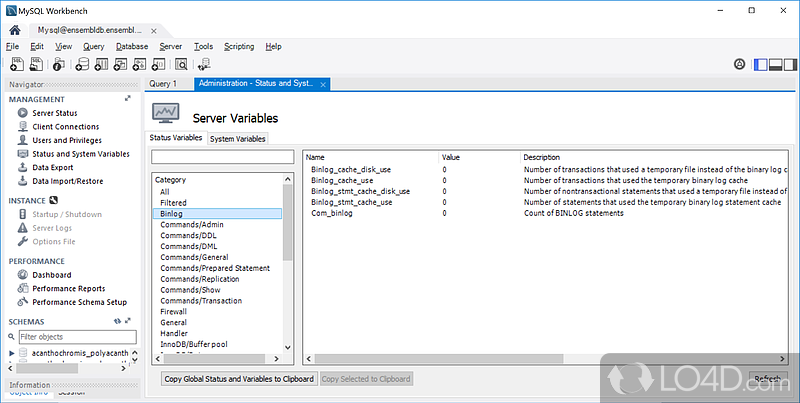

The core problem is that MySQL Workbench tries to be everything to everyone: visual modeler, SQL editor, administration console, migration tool, and performance monitor all in one desktop application. This kitchen-sink approach results in a bloated architecture that consumes massive amounts of system resources.

The Architecture Problem: Workbench uses Python for many operations, including the data import/export wizards. When you see those cryptic error messages during imports, you're looking at Python stack traces. This interpreted language layer adds significant overhead compared to native database operations like mysqldump or LOAD DATA INFILE.

Memory Management Disaster: Workbench loads entire result sets into memory before displaying them. Try selecting 500K rows and watch your RAM usage spike to 4GB while the application becomes unresponsive. The GUI toolkit doesn't handle large datasets gracefully, and memory isn't properly released after operations complete.

Connection Pool Hell: Unlike proper database tools that maintain efficient connection pools, Workbench's connection management is naive. Each query tab potentially opens new connections, connection timeouts aren't handled gracefully, and SSH tunneling adds another layer of potential failure points.

The Performance Problems That Actually Matter

Memory Leaks That Kill Productivity

Every MySQL Workbench user discovers this eventually: the application steadily consumes more memory over time until it becomes unusably slow. On Windows systems, Task Manager shows Workbench climbing from 200MB at startup to over 2GB after a few hours of normal use.

The leak manifests most obviously when working with result sets. Execute a query that returns 100K rows, browse through the results, then close the tab. That memory isn't freed - it accumulates until you restart the entire application. Users dealing with production databases learn to restart Workbench daily, sometimes hourly.

Real-world nightmare: During a critical production incident in March, our team spent 45 minutes troubleshooting what looked like database performance issues. Users couldn't checkout and the CEO was breathing down our necks. First thing we did was fire up Workbench to check slow queries. The piece of shit took 30 seconds to execute a simple SELECT * FROM orders LIMIT 10 - something that should return instantly.

We were convinced the database server was fucked. Started checking CPU, memory, I/O stats on the server - everything looked fine. Then my colleague pointed out that Workbench was using 2.8GB of RAM on my laptop. Restarting the application suddenly made those same queries return in 50ms. We'd wasted nearly an hour debugging a "database performance problem" that was actually just Workbench being a memory-hogging piece of shit.

Export/Import Operations From Hell

The Table Data Import/Export Wizard is where Workbench truly fails. Importing a CSV with 20 million rows? Plan on waiting a month if it doesn't crash first. Stack Overflow is littered with complaints about import operations taking literal days for datasets that should process in minutes.

The wizard processes rows one at a time, committing after each insert. For a 1 million row CSV, that's 1 million individual database transactions instead of bulk operations. Professional database administrators avoid the import wizard entirely, using LOAD DATA INFILE commands that complete the same operation in under a minute.

I once tried importing a 500K row customer data file using the wizard. Started it on Friday afternoon, came back Monday morning to find it had crashed somewhere around 200-something thousand rows with a generic "MySQL server has gone away" error. No partial data imported, no useful error details, just three days of wasted time. Rewrote it as a LOAD DATA INFILE command and the whole thing finished in 12 seconds.

The export side is equally broken: Attempting to export more than 100K rows often results in application crashes with no useful error messages. The export process doesn't stream data efficiently, instead trying to build the entire output file in memory before writing it to disk. Last month I needed to export user analytics data for our marketing team - 750K rows, nothing crazy. Workbench died three times before I gave up and used mysqldump.

Connection Timeout Roulette

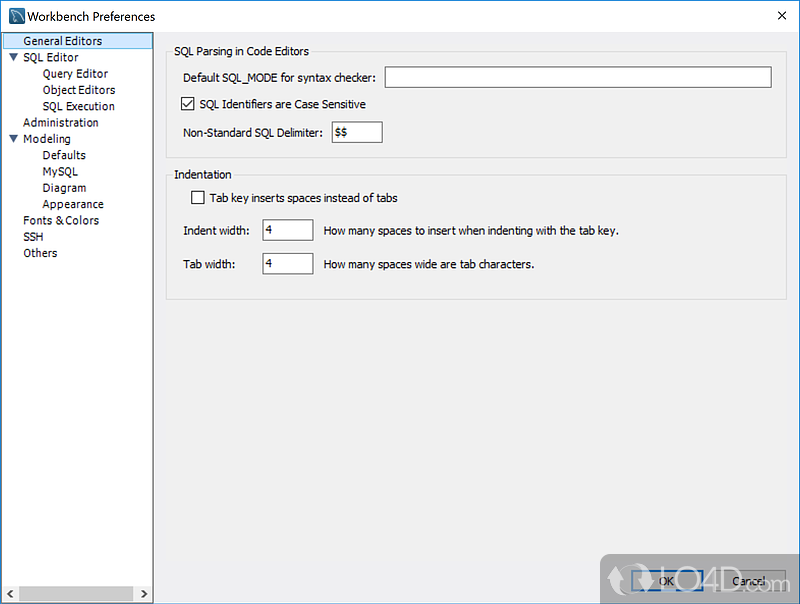

Connection management in Workbench is unreliable, especially for remote database connections. The default timeout settings are too aggressive for real-world network conditions, SSH tunnel configuration is buried in confusing dialogs, and SSL connection errors provide cryptic messages that require diving into log files to diagnose.

Production debugging nightmare: Picture this scenario - production database is experiencing issues, users are complaining, and you need to run diagnostic queries immediately. You open Workbench, attempt to connect to the production server, and get "Connection timeout" errors. While you're troubleshooting Workbench's connection problems, the actual database issue is getting worse.

This happened to me during Black Friday last year - site was crawling, transactions backing up, and the VP of Engineering was asking for updates every 2 minutes. I needed to identify the blocking queries fast. Workbench gave me "Can't connect to MySQL server on 'prod-db' (110)" errors for 10 straight minutes while I fumbled with timeout settings. Finally said fuck it and SSH'd into the database server directly. Found the problem immediately with SHOW PROCESSLIST - some asshole developer had left a SELECT COUNT(*) running on our 50 million row orders table without a LIMIT.

The SSH tunneling feature is particularly problematic. Configuration options are scattered across multiple dialog tabs, error messages don't indicate whether the problem is SSH authentication or database connectivity, and successful connections sometimes drop randomly during long-running operations. The "Test Connection" button lies - it'll show green, then immediately fail when you try to actually query data.

Most experienced developers maintain multiple database tools specifically because Workbench's connection reliability can't be trusted when it matters most. DBeaver, TablePlus, HeidiSQL, Sequel Ace, phpMyAdmin, MySQL Shell, or even command-line MySQL clients become the fallback options when Workbench fails. For monitoring, Percona Toolkit provides superior diagnostic capabilities.