Last month our MongoDB cluster shit itself at 2 AM because someone decided to run an aggregation pipeline on millions of documents without a single index. The query took forever - maybe 50 seconds? - and brought down our entire API. This is why you need the profiler enabled before disasters happen, not after.

Turn On The Profiler Before Everything Breaks

The profiler is off by default because MongoDB thinks you don't need it. You do. Turn it on right now:

db.setProfilingLevel(1, { slowms: 100 })

Three levels of pain:

- Level 0: Off (stupid default setting)

- Level 1: Only log slow shit (what you want)

- Level 2: Log everything (will destroy your disk space in minutes)

Level 2 is basically a DoS attack on your own database. I learned this the hard way when it filled up like 200GB super fast and crashed the cluster.

Check if it's actually running:

db.getProfilingStatus()

Reading the Tea Leaves (Profile Data)

The profiler dumps everything into db.system.profile. Here's how to find the queries that are ruining your life using MongoDB's profiling system:

db.system.profile.find()

.limit(5)

.sort({ millis: -1 })

.pretty()

What to look for:

millis: How long your query took to suckplanSummary: Did it use an index or scan the whole fucking collectiondocsExamined: How many docs it looked atdocsReturned: How many it actually neededts: When this clusterfuck happened

If docsExamined is way higher than docsReturned, you're doing collection scans. That's bad. Really bad.

explain() - The Query Autopsy Tool

Before you run a scary query, use `explain()` to see what MongoDB plans to do:

db.orders.find({ status: \"shipped\", customer_id: ObjectId(\"...\") })

.explain(\"executionStats\")

Critical shit to check:

- totalDocsExamined: If this is huge, you're fucked

- totalDocsReturned: What you actually needed

- executionTimeMillis: How long it took to fail

- COLLSCAN vs IXSCAN: Collection scan = bad, index scan = good

I once found a query that scanned millions of documents to return 3 results. The efficiency was fucking terrible - like examining hundreds of thousands of docs for each result. That's not efficiency, that's incompetence.

MongoDB Version Hell - The 7.0 Disaster

MongoDB 7.0 has a nasty performance bug that reduces concurrent transactions from 128 to about 8. This destroyed performance for everyone who upgraded without reading the fine print. The MongoDB release notes barely mention this regression.

The emergency fix for 7.0:

db.adminCommand({

setParameter: 1,

storageEngineConcurrentWriteTransactions: 128,

storageEngineConcurrentReadTransactions: 128

})

MongoDB 8.0 fixes this shit, but upgrading has its own problems. Test in staging first or you'll be the one getting calls at 3 AM. Check the MongoDB 8.0 compatibility guide before upgrading.

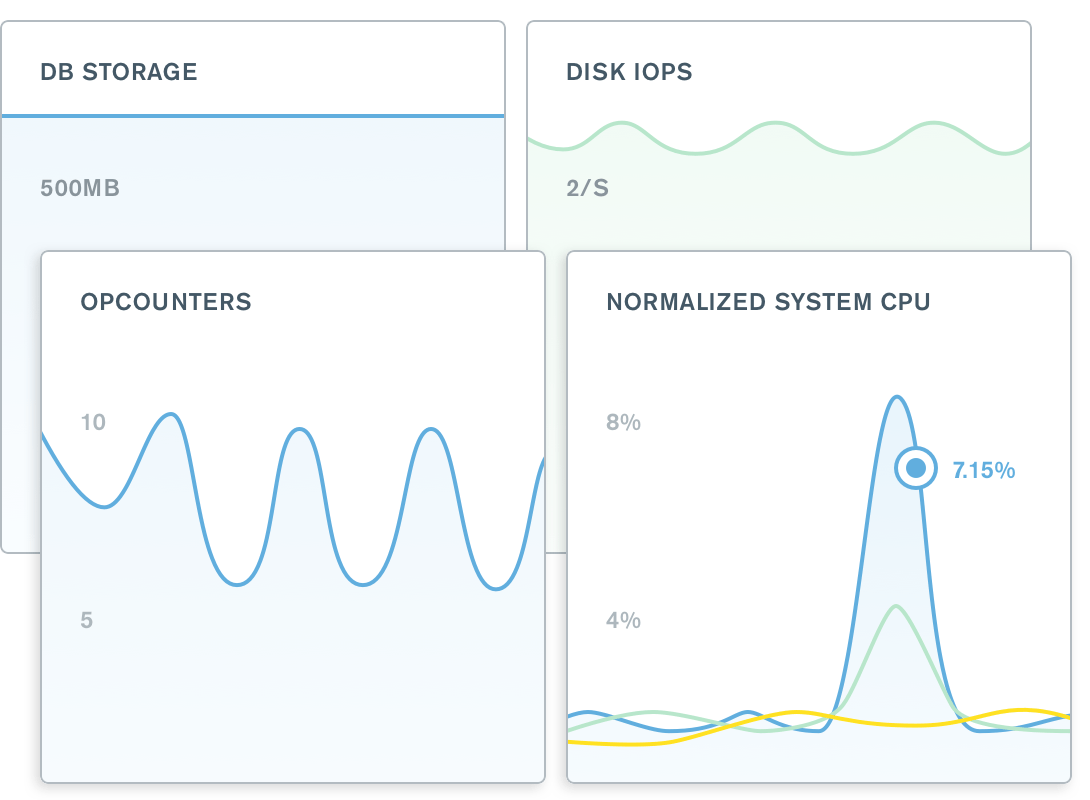

Atlas Performance Advisor (Actually Useful)

Atlas has a Performance Advisor that automatically finds your shitty queries and suggests fixes. It's actually pretty good, unlike most MongoDB tooling.

It tells you:

- Which queries are slow as hell

- What indexes would fix them

- How much performance you'd gain

- Which indexes you created but never use

The best part? It runs automatically. No need to remember to check it while you're fighting fires.

Production War Stories

The Atlas Bill From Hell: A developer created a text index on a huge collection in production. Took like 18 hours to build and the compute bill was brutal - I think around 15 grand. The index was for a search feature that never shipped.

The Collection Scan From Hell: db.users.find({}) on millions and millions of documents. No limit, no index, just raw stupidity. It ran for hours before I killed it. The developer said "it worked fine in dev" (which had like 10 users).

The Aggregation Pipeline of Doom: Someone wrote this insane multi-stage aggregation that did a $lookup on every document. MongoDB tried to load way too much into memory and crashed the primary. Took forever to failover and recover.

The Connection Pool Massacre: Application was creating new connections for every request instead of using a pool. Hit the connection limit and new users couldn't log in. The fix was changing one line of code.

Tools That Actually Work

MongoDB Compass: Free, official, doesn't crash every 5 minutes. Visual explain plans make it easy to see why your queries suck. The Compass documentation covers performance features.

Studio 3T: Costs money but worth every penny. Better profiling, query optimization, and doesn't have Compass's random crashes. Their performance troubleshooting guide is excellent.

Skip NoSQLBooster: Used to be good, now it's buggy and slow. Compass does everything you need for free.

The profiler will save your ass, but only if you actually use it. Turn it on now, before you need it. Trust me on this one.

Once you've identified your slow queries, the next step is fixing them with proper indexes. Most performance disasters come from missing indexes, wrong index field order, or having too many unused indexes that slow down writes. The profiler shows you which queries are broken - indexes are how you fix them.