I got sick of my ChatGPT bills hitting $50/month, so I tried running models locally. LM Studio makes this actually possible without learning 47 command-line tools.

What is this thing actually?

Here's what nobody tells you: LM Studio is probably the easiest way to run AI models on your own computer. Download the app, click a model, and it works. Mostly.

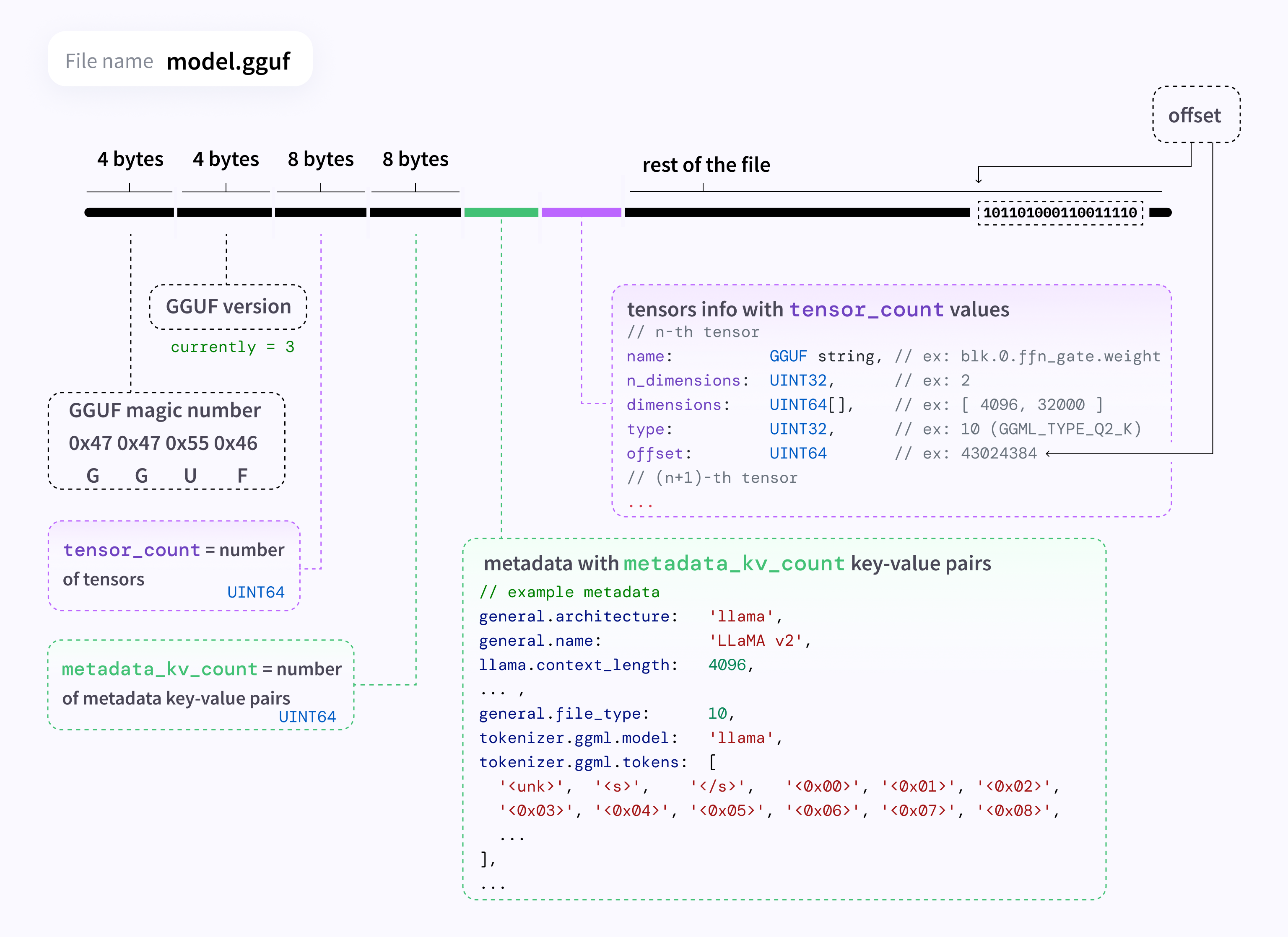

The interface looks clean, kind of like ChatGPT but slower and without the constant internet requirement. You download GGUF format models which are basically compressed AI brains that actually fit on your hard drive.

Setup takes 20 minutes, not 2 minutes like they imply. But once it's running, you can chat with models offline. No internet = no data leaving your machine. That's the whole point.

The privacy thing isn't bullshit

Everything runs on your computer. Your weird questions about code, personal stuff, or whatever - none of it gets sent to OpenAI's servers. For companies handling sensitive data, this is huge. No compliance nightmares, no "did our API calls just train their next model" paranoia.

Your conversations don't leave your machine. ChatGPT logs everything you say. For sensitive stuff, this matters.

The offline thing is real. Once models are downloaded, you can literally disconnect from wifi and keep using it. Handy when internet craps out or you're on a plane.

Your laptop will probably hate you

They say 16GB minimum but that means it'll swap to death and run like molasses. 32GB is where it becomes usable.

If you have an NVIDIA GPU with decent VRAM, models run much faster. Apple Silicon Macs work well too - M2/M3 MacBooks handle this shit way better than I expected.

Your laptop will heat up and fans will spin. This isn't like browsing Twitter - you're running actual AI inference locally. Plan for extra electricity usage too. GPU inference can triple your system's power draw.

Drop-in replacement for ChatGPT

The OpenAI-compatible API is clutch. Point existing ChatGPT tools at http://localhost:1234 and they work with local models. I've tested this with VS Code extensions, Continue.dev, and AutoGen scripts.

There's also some Model Context Protocol support they added in 2025 that connects models to external tools. Still figuring out what that actually enables in practice.