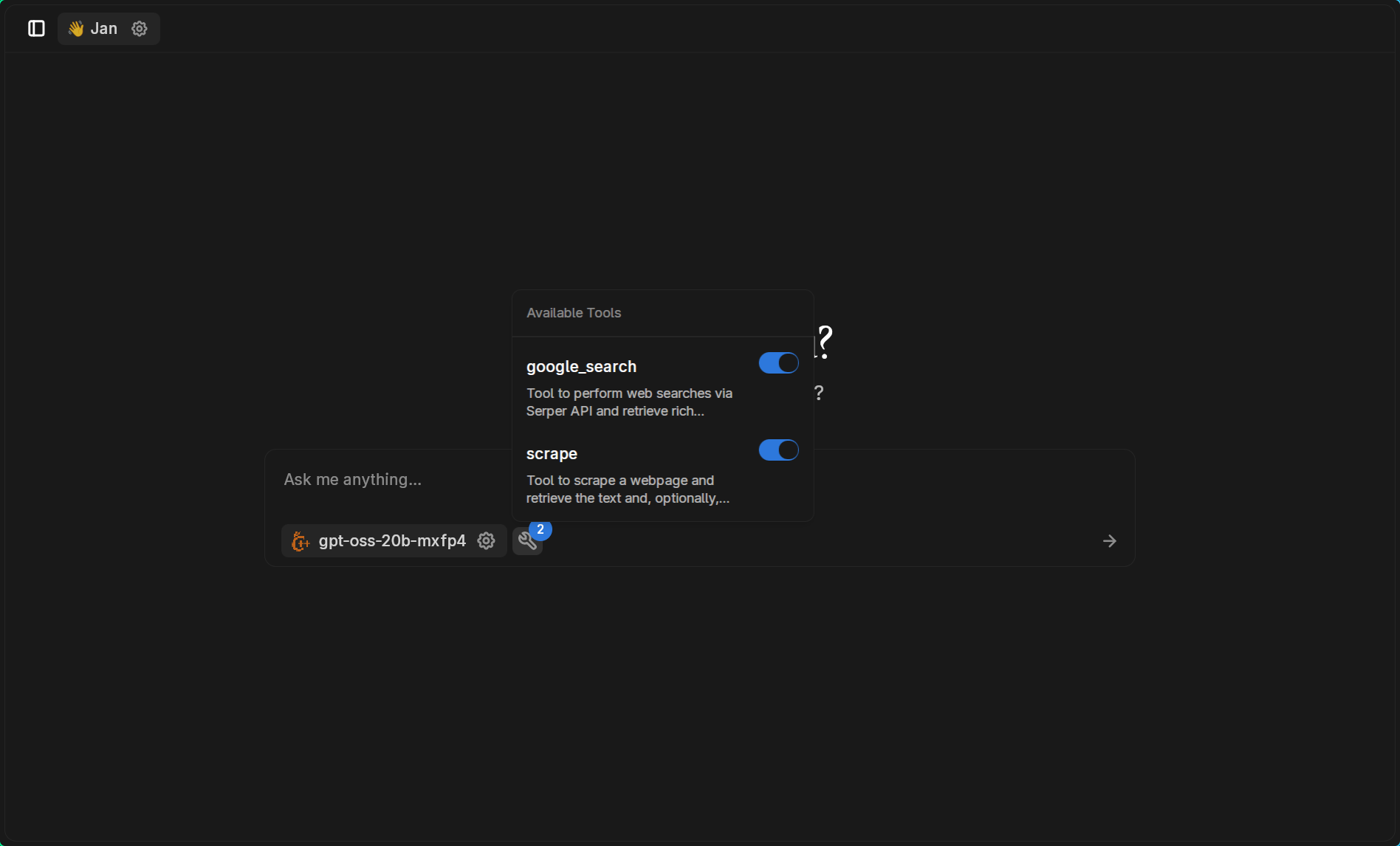

Jan's Model Context Protocol (MCP) went stable in v0.6.9 - no more experimental flags. Finally, you can set up workflows where your local AI does actual work instead of just hallucinating about doing work. Too bad the docs are scattered across 12 different pages and every tutorial assumes you have a PhD in JSON config fuckery.

Here's what I learned setting up MCP workflows after burning 2 weeks on broken configs, mysterious crashes, and JSON syntax errors that made me question my career choices.

Why MCP Actually Matters Now

Before MCP, your local AI was basically an expensive text completion engine. With MCP, it becomes a legitimate automation tool that can:

- Execute code in Jupyter notebooks and see the results

- Search the web for current information

- Read and write files on your system

- Manage Linear tickets and other project management tools

- Connect to databases and APIs

The difference is night and day. I went from "this is a neat demo" to "holy shit this actually saves me time" once I got MCP working properly.

The Reality of MCP Setup (Not the Marketing Version)

What the docs don't tell you: MCP setup is 90% JSON config hell and 10% magic. You're editing ~/jan/settings/@janhq/core/settings.json by hand like it's 2005. There's no GUI, no validation, and when you fuck up the JSON syntax, Jan just silently fails to load your tools.

What actually works in production:

- File system tools - Jan can read/write local files reliably

- SQLite integration - Query databases without writing SQL

- Search providers - Web search that doesn't hallucinate

What breaks constantly:

- Browser automation - works once, then never again

- Complex API chains - one timeout breaks everything

- Tool dependencies - if one MCP server dies, they all die

Hardware Requirements Reality Check

MCP isn't free - each tool connection uses additional RAM and CPU. I've seen setups where 5 MCP tools eat 2GB extra memory on top of your model. Your hardware requirements basically double:

Minimum for MCP workflows:

- 16GB RAM (was 8GB for basic Jan)

- SSD storage (MCP tools create lots of temp files)

- Stable internet for tools that need web access

Don't even try MCP on:

- 8GB laptops (you'll run out of memory)

- Slow HDDs (MCP tools timeout on slow I/O)

- Unstable networks (half the tools need internet)

The official requirements don't mention this because they assume you're only running one model without tools.