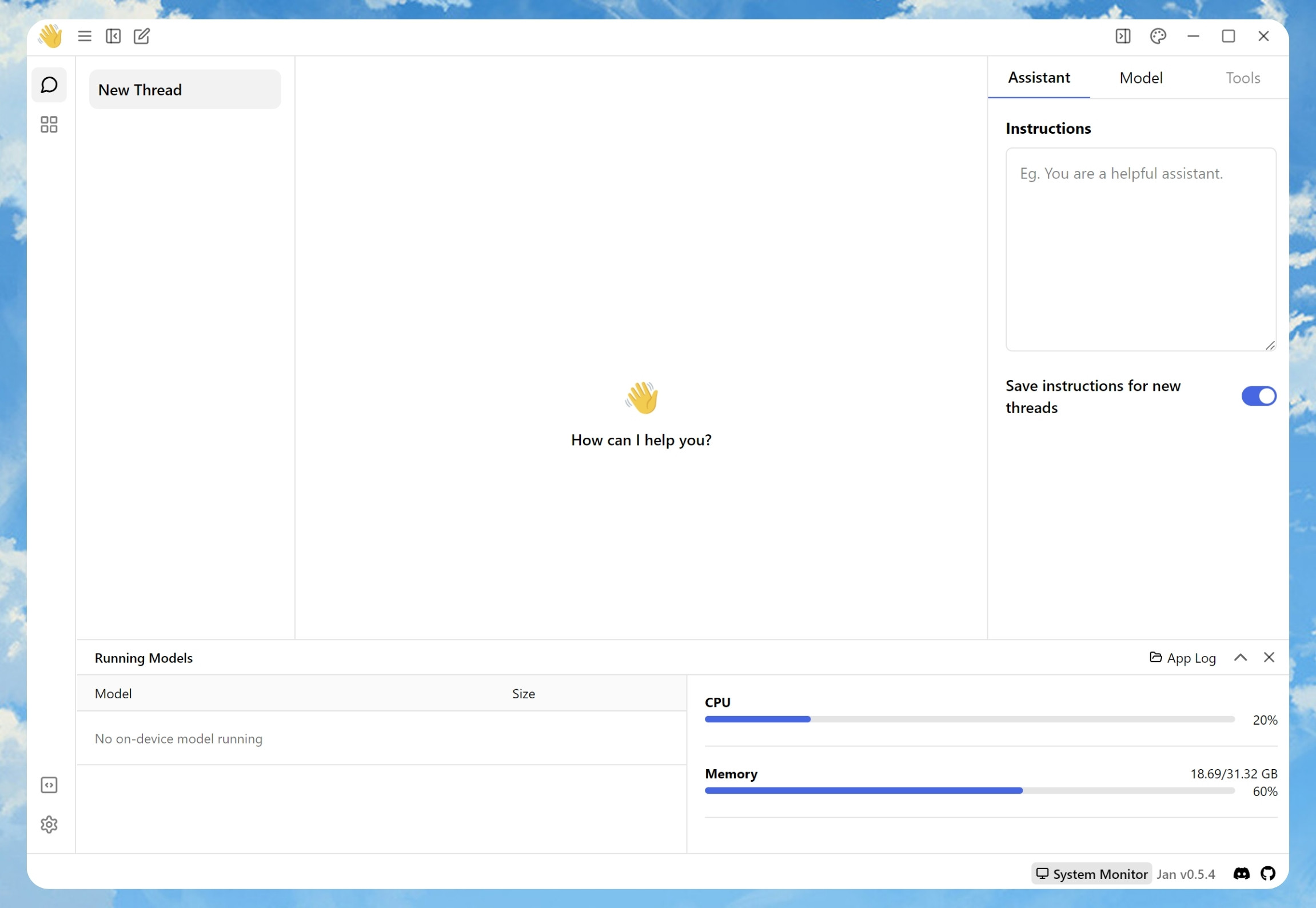

Jan is desktop software that runs AI models locally on your computer. Think ChatGPT but the processing happens on your hardware instead of sending everything to OpenAI's servers. Built by Menlo Research, a team that got tired of privacy-destroying AI services, it's actually open source and works completely offline once you download the models.

I've been testing Jan for a few months on my M1 Mac and here's the reality: it works pretty well for basic chat tasks, but don't expect GPT-4 level performance from models that fit on consumer hardware. The setup is surprisingly smooth on Mac, but Windows users seem to get fucked by driver issues based on the GitHub issues.

What Actually Works

Jan runs on llama.cpp under the hood, which is solid tech that's been battle-tested by the community. It supports GGUF model files from Hugging Face - thousands of them. You download models once and they stay on your machine.

The current version is 0.6.9 - dropped August 28th, 2025. Finally includes some features that aren't just marketing bullshit:

- Local model execution (obviously)

- OpenAI-compatible API server at localhost:1337

- Model Context Protocol (MCP) for connecting to external tools

- Image support for multimodal models (NEW in v0.6.9)

- Cloud provider fallback when you need the big guns

Their own model Jan-v1 actually hits 91.1% on SimpleQA benchmarks, which shocked me for a 4B parameter model. But SimpleQA is just factual stuff - don't expect GPT-4 level reasoning. Your mileage varies wildly depending on what hardware shit you've got running in the background.

Hardware Reality Check

Don't bother unless you have:

- At least 8GB RAM (16GB for anything useful)

- Decent CPU or dedicated GPU

- 10GB+ free storage per model

Runs great on:

- Apple Silicon Macs (M1/M2/M3)

- NVIDIA RTX cards with enough VRAM

- Recent AMD GPUs (though support is shakier)

Pain in the ass on:

- Integrated graphics

- Old hardware

- Linux with AMD cards (driver hell)

I get about 25-30 tokens/second on my M1 with the 7B Llama models, which is fast enough for real conversation. Your ancient laptop probably won't cut it.

The MCP Integration Thing

Jan supports Model Context Protocol which lets the AI actually interact with tools instead of just chatting. I've tried a few:

- Jupyter integration works but is finicky

- Browser automation through various services

- Search tools that actually work

Half the MCP tools are half-baked demos, but the concept is solid and some actually save time.