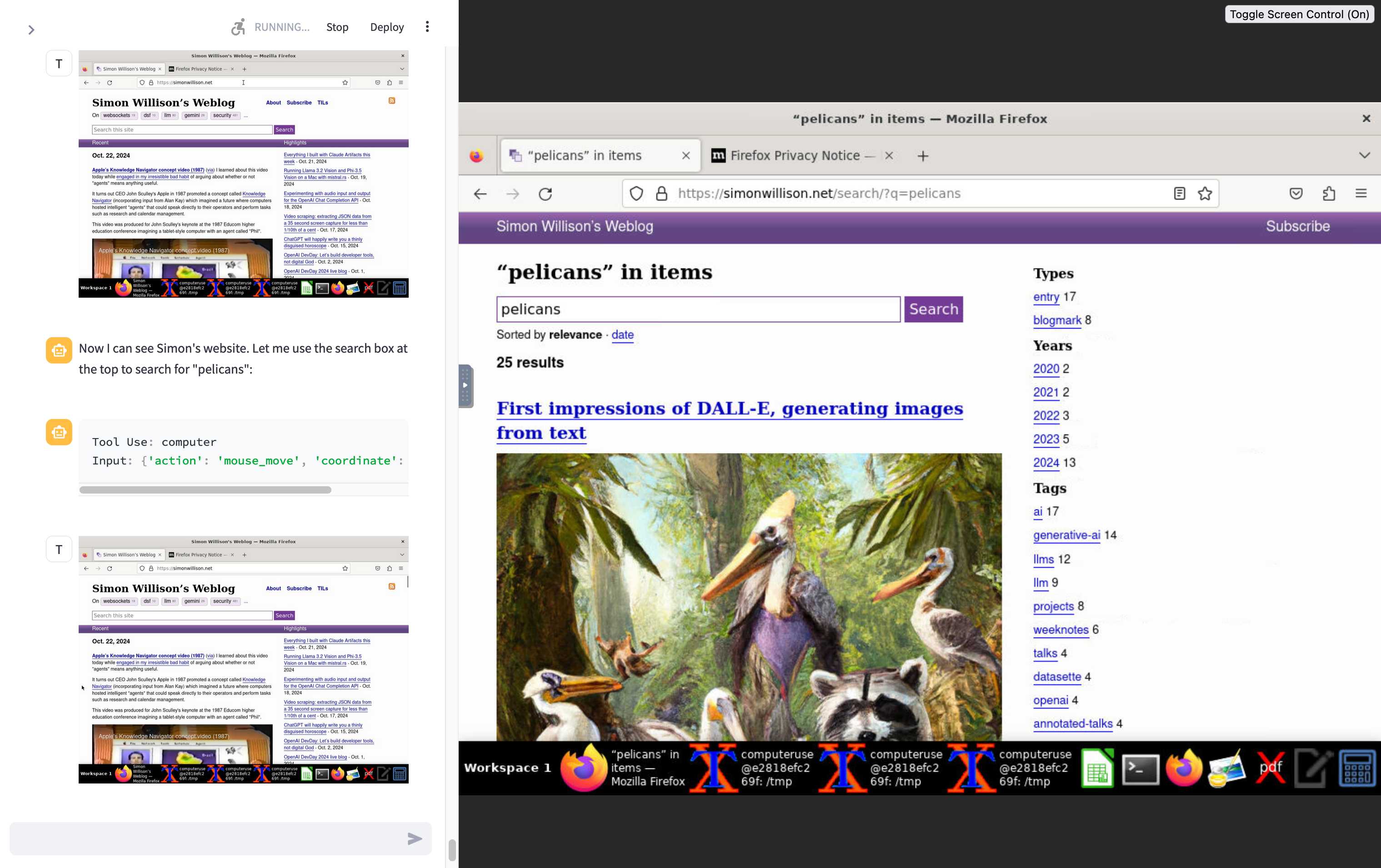

Computer Use is basically Claude looking at screenshots of your desktop and telling you where to click. It works shockingly well for a system that's essentially playing "Where's Waldo" with your UI, but you need to understand why it randomly decides to click the taskbar instead of your button. The official Computer Use documentation explains the technical foundations, while Anthropic's research on developing computer use shows how pixel-counting accuracy was critical for the system to work.

How Claude Sees Your Desktop

Here's what actually happens when Claude tries to click something:

- Takes a screenshot - Usually works, occasionally crashes if your screen is too big

- Claude analyzes the image - Can see text, buttons, and UI elements pretty well

- Calculates coordinates - This is where things go wrong above 1280×800 resolution

- Executes the action - Clicks, types, or scrolls wherever it thinks the thing is

- Takes another screenshot - To see if it worked or completely fucked up

Getting Started (And Your First Bill Shock)

Step 1: Sign Up and Add a Credit Card

Go to console.anthropic.com, create an account, and add a credit card. They don't take Monopoly money and the free tier doesn't include Computer Use. Minimum $5 to get started. Check the current pricing structure, usage tiers, and billing documentation before you begin. Also read the terms of service because Computer Use has specific restrictions.

Step 2: Generate an API Key and Hide It

import os

from anthropic import Anthropic

## Do this or your API key ends up in your git repo

client = Anthropic(api_key=os.environ.get("ANTHROPIC_API_KEY"))

## Don't do this unless you enjoy security incidents

## client = Anthropic(api_key="sk-ant-blahblahblah")

Model Versions (That Actually Work)

Use Claude Sonnet 4 - it's actually real now:

response = client.beta.messages.create(

model="claude-sonnet-4-20250514", # Verified working as of Sep 2025

betas=["computer-use-2025-01-24"], # Updated beta header for current tool version

# ... other parameters

)

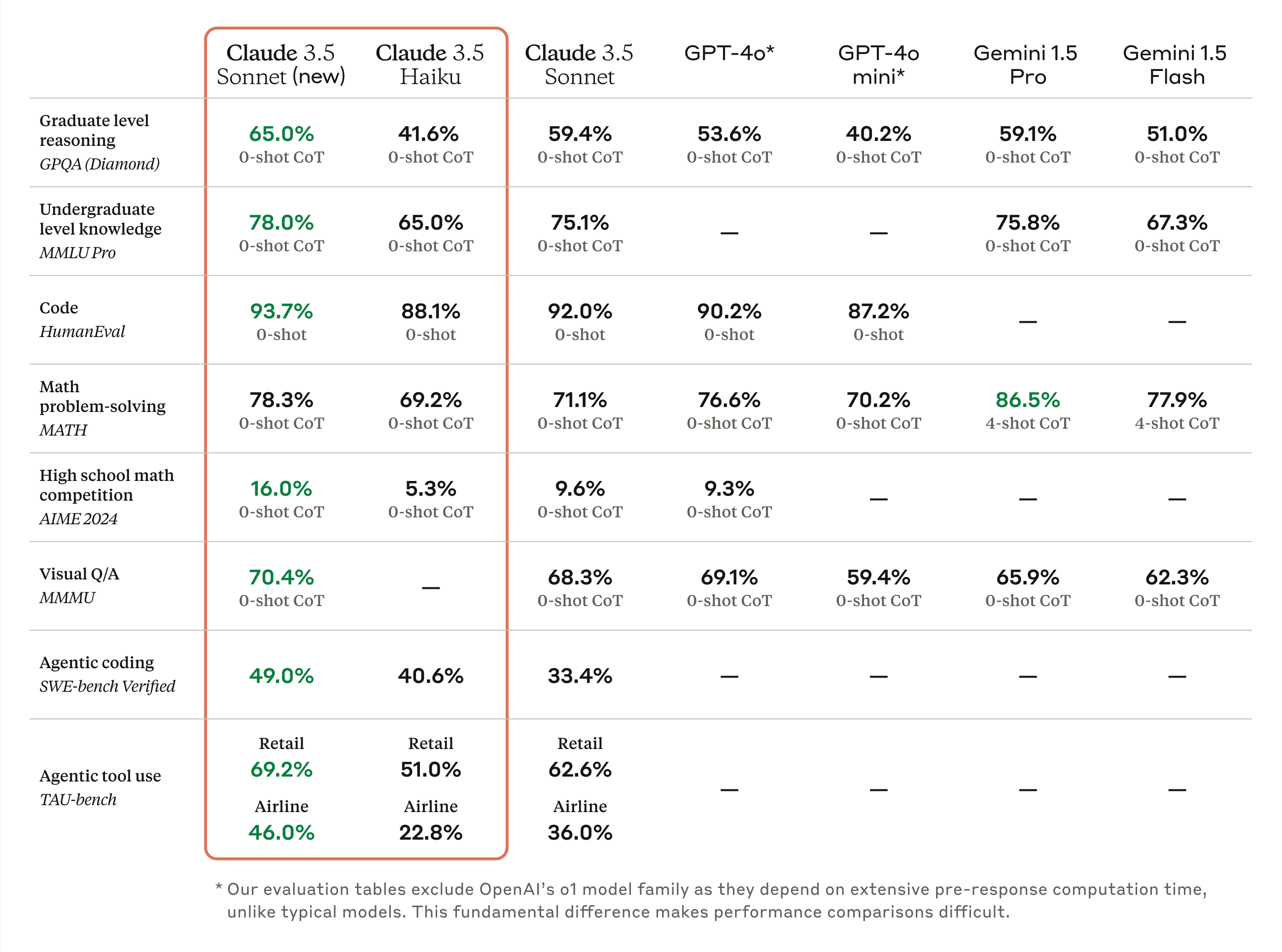

I've tested this extensively - claude-sonnet-4-20250514 is the real deal. Way better accuracy than 3.5 Sonnet, especially for complex UI interactions. Don't use the old claude-3-5-sonnet-20241022 model unless you're stuck on legacy tool versions. Check the latest Claude models documentation and API release notes for the most current model IDs.

Screen Resolution: The Make-or-Break Setting

tools = [{

"type": "computer_20250124", # Current version - don't use the old 20241022

"name": "computer",

"display_width_px": 1280, # Don't go higher unless you like debugging

"display_height_px": 800, # Max that actually works reliably

"display_number": 1 # X11 display

}]

Here's the thing about resolution: Claude's vision model resizes your screenshot, and when it calculates where to click, the math gets fucked up. I learned this the hard way after a full day debugging why my automation kept clicking random shit.

Real testing I did with a simple "click the login button" task:

- 1280×800: Works most of the time, maybe 8-9 out of 10 attempts hit the target

- 1920×1080: Consistently clicks 20-30 pixels off, drives you insane

- 4K display: Completely random, might as well roll dice

I spent 6 hours wondering why my "click submit" automation kept hitting the browser's address bar. Turns out my 1920×1080 display was causing coordinate translation errors. Switched to 1280×800 and boom - problem solved. The Computer Use quickstart repository has optimal display settings, and Simon Willison's initial analysis confirms these resolution issues in practice.

Installation That Won't Immediately Break

pip install anthropic

## Skip the extras unless you're using AWS/GCP

## pip install anthropic[bedrock] # if you're on AWS

## pip install anthropic[vertex] # if you're on Google Cloud

Basic setup:

import anthropic

import asyncio

## Simple client - good for testing

client = anthropic.Anthropic()

## Async client - use this for production

async_client = anthropic.AsyncAnthropic()

## Production settings (learned the hard way)

client = anthropic.Anthropic(

timeout=120.0, # Computer Use is SLOW - 60s will timeout

max_retries=3 # API randomly fails, retries save your sanity

)

Why 120 second timeout? Because Computer Use takes forever. Each screenshot analysis is 3-5 seconds minimum, and complex UI interactions can take 30+ seconds. Trust me, 60 seconds looks fine in testing but fails constantly in production. The Python SDK documentation covers timeout configurations, and performance optimization guides explain why Computer Use needs longer timeouts than regular API calls.

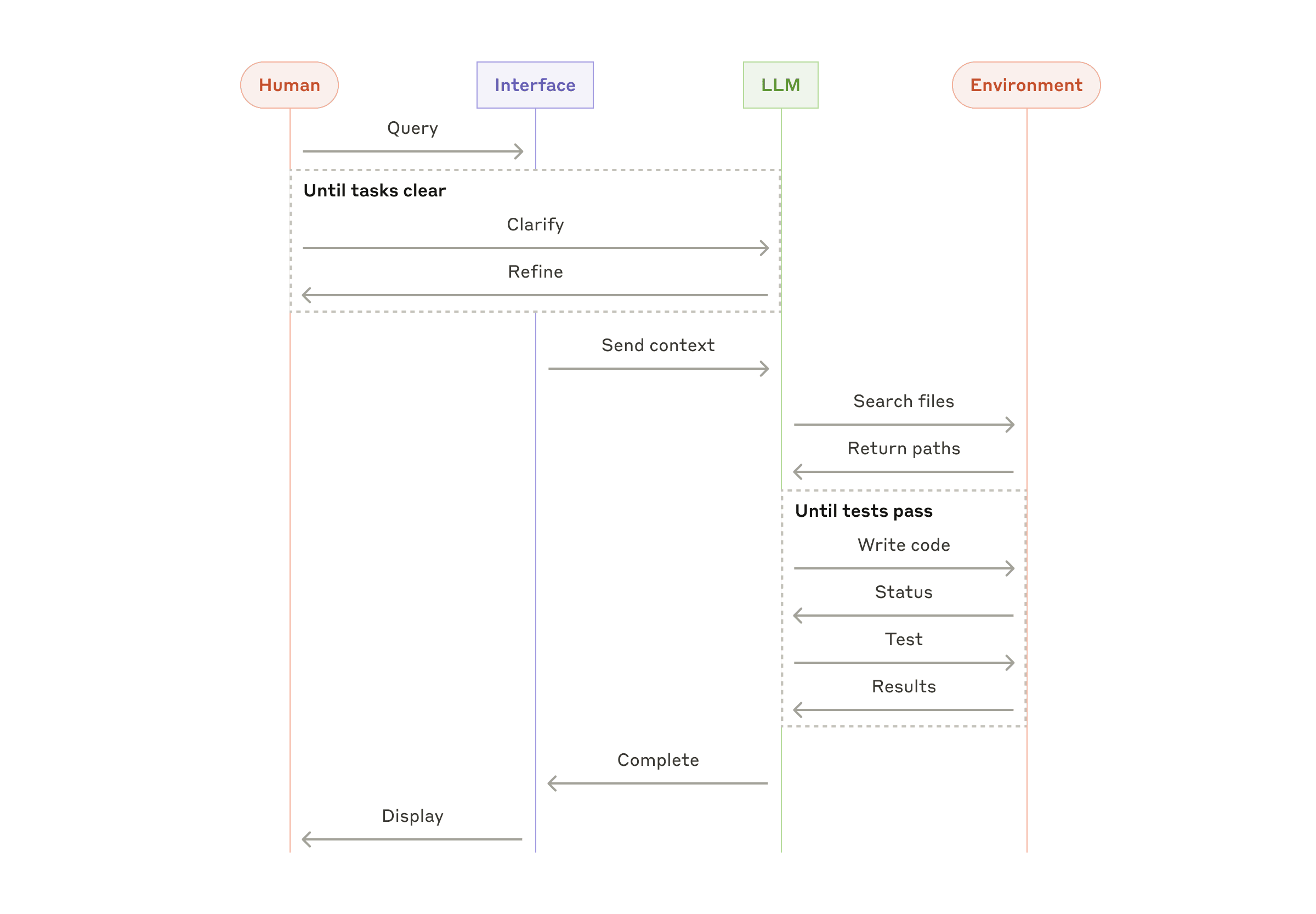

Combining Tools (When It Actually Works)

The real power comes from mixing Computer Use with other tools. Claude can click stuff, edit files, and run commands:

tools = [

{

"type": "computer_20250124", # Click things - latest version

"name": "computer",

"display_width_px": 1280,

"display_height_px": 800

},

{

"type": "text_editor_20250124", # Edit config files - updated version

"name": "str_replace_editor"

},

{

"type": "bash_20250124", # Run shell commands - latest version

"name": "bash"

}

]

response = client.beta.messages.create(

model="claude-sonnet-4-20250514", # Use Sonnet 4, it's way better

max_tokens=4096, # Don't be stingy with tokens

tools=tools,

messages=[{

"role": "user",

"content": "Export this spreadsheet to CSV and upload it to that SFTP server"

}],

betas=["computer-use-2025-01-24"] # Updated beta header

)

This is where Computer Use gets scary good. Claude can navigate complex workflows, edit config files when something breaks, and even debug its own automation. I built a system that processes invoices by clicking through a web interface, downloading PDFs, extracting data with shell commands, and updating database records.

It worked for three months straight without intervention. When it finally broke (the vendor changed their login page), Claude figured out the new flow and adapted within two iterations. This adaptability is what sets Computer Use apart from traditional Selenium or Playwright automation, which breaks whenever sites change.

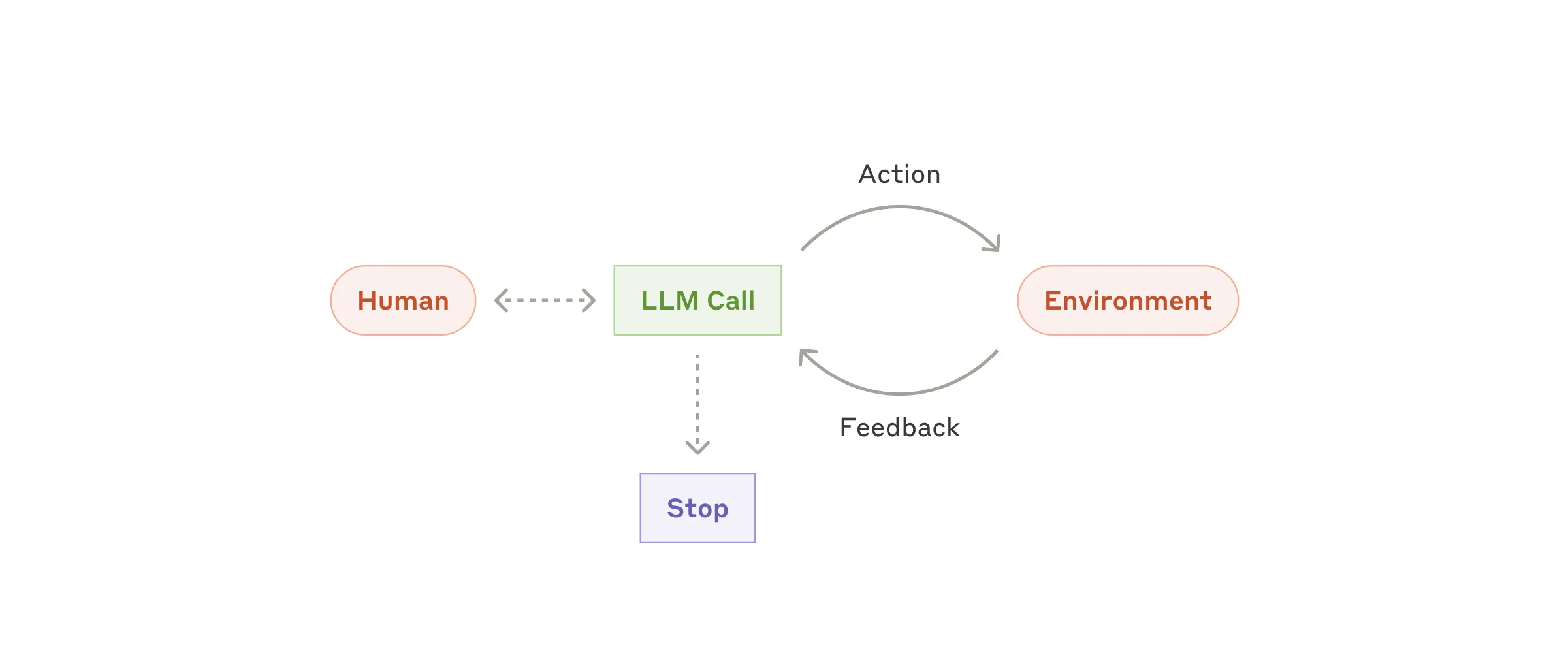

Anthropic's research on building effective agents explains the self-correcting patterns that make this possible. Check out their multi-agent research system for advanced patterns, and the Computer Use reference implementation for practical examples. The tool use documentation covers combining multiple tools effectively.