Look, I've deployed this stack 6 times and here's what actually matters vs what the docs tell you.

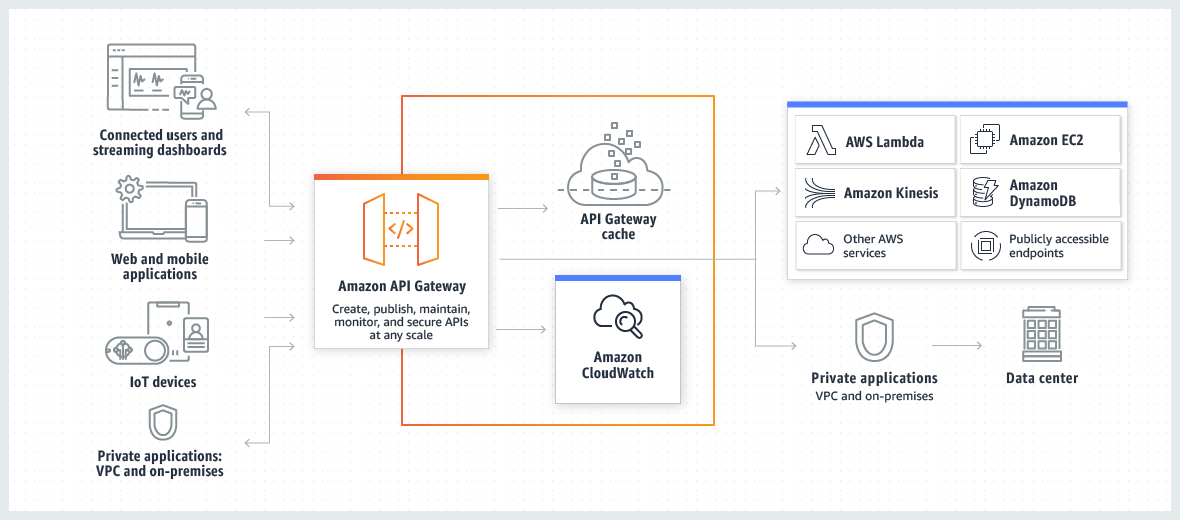

API Gateway: Skip the Fancy Shit

Everyone thinks they need Kong or Envoy. Unless you're Netflix handling millions of requests, just use your cloud provider's API gateway. AWS API Gateway works fine, costs almost nothing for normal traffic, and handles rate limiting without you having to get a networking PhD.

I spent 2 weeks setting up Kong before realizing AWS API Gateway did everything I needed in 20 minutes of clicking buttons. Kong's documentation is excellent if you enjoy reading doctoral dissertations about networking.

FastAPI: The Easy Part That's Actually Easy

FastAPI's async support is genuinely good for this. Unlike most Python frameworks that pretend to be async, this one actually works. Connection pooling happens automatically if you don't screw with the defaults.

The dependency injection sounds like fancy bullshit but it actually prevents connection hell later.

Here's the shit that breaks in production:

Timeout Hell: OpenAI sometimes takes 45+ seconds to respond. Your default timeout is probably 30s. Guess what happens? TimeoutError at 2am when you're dead asleep and your phone starts buzzing with alerts.

Set timeouts to 60s minimum:

client = AsyncOpenAI(timeout=60.0)

Memory Leaks: The OpenAI Python client doesn't close connections properly if you create new instances everywhere. Use dependency injection or watch your memory usage climb to 2GB within an hour.

Rate Limit Lies: OpenAI's error messages are garbage. "Rate limit exceeded" often means "your API key is wrong" or "you hit a quota limit, not a rate limit." Log the actual error details.

Security: Don't Store Keys in Code (Obviously)

Everyone knows not to put API keys in code. What they don't tell you:

- Environment variables in Docker can be seen by anyone with container access

- AWS Secrets Manager costs $0.40/secret/month but prevents security audits from failing

- Rotating keys breaks everything unless you plan for it

I learned this when our security team found hardcoded keys in a Docker image that was deployed to 50+ instances. Got the Slack message at 4am: SECURITY-2024-089: API keys exposed in production containers. Spent the next 6 hours rotating keys and rebuilding everything.

Caching: Redis or Your Bill Will Hurt

![]()

OpenAI charges per token. A simple chat can cost $0.06. Multiply by 1000 users and you're spending $60/day on repeated questions.

Redis caching with a 1-hour TTL reduced our costs by 70%. The setup takes 10 minutes:

## Don't overthink the cache key

cache_key = hashlib.md5(f\"{prompt}{model}\".encode()).hexdigest()

Cache Miss Reality: Our cache hit rate was 23% in production vs 80% in testing. Users ask the same question 50 different ways.

Database: PostgreSQL Unless You Have a Reason

MongoDB is trendy but PostgreSQL's JSONB columns handle conversation data just fine. You get ACID transactions and don't have to learn a new query language.

I migrated from MongoDB to PostgreSQL after our data team spent 2 weeks trying to debug one query that would've taken 5 minutes in SQL. Turns out $lookup with nested arrays is a fucking nightmare. SQL just works, everyone knows it, use it.

Connection Pool Size: Start with 20 connections max. More connections = more memory usage. 20 handles 200+ concurrent users easily.

Monitoring: Start Simple or Burn Out

![]()

Don't go crazy with monitoring on day one. Prometheus, Grafana, and all that enterprise stuff can wait. Start with:

- Sentry for errors (actually useful error tracking)

- Cloud provider metrics (they're free)

- Basic health checks that ping OpenAI

Add complexity when you actually need it, not because some blog post says you should.

Alert Fatigue: We started with 47 different alerts. After getting woken up 6 times in one night because "disk usage > 70%", we turned most off. Now we have 3 alerts that actually matter:

- API response time > 5 seconds

- Error rate > 5%

- OpenAI costs > $100/day

Deployment: Docker + Your Cloud Provider

![]()

Kubernetes is overkill unless you have a team of DevOps engineers. Use your cloud provider's container service:

- AWS App Runner: Works, scales automatically, costs reasonable

- Google Cloud Run: Cheap for low traffic, scales to zero

- Azure Container Instances: If you're already in Microsoft land

I spent way too long trying to learn Kubernetes before I realized Cloud Run does everything we actually needed for 1/10th the complexity.

Docker Gotcha: Multi-stage builds save money and time. A 2GB Python image becomes 200MB. Your deployments go from 5 minutes to 30 seconds.

Docker Networking Hell: The official FastAPI deployment guide skips the part where your containers can't talk to each other by default. Spent 3 hours debugging "connection refused" errors before realizing Docker Compose networking isn't the same as Docker networking. Add networks: to your compose file or enjoy the localhost debugging party.

What Actually Breaks in Production

![]()

- OpenAI API goes down (happens monthly) - implement fallback responses

- Rate limits hit during traffic spikes - queue requests instead of failing

- Memory usage grows over time - restart containers weekly

- Database connections leak - use connection pooling properly

- Costs spiral out of control - monitor token usage, not just requests

The stuff that keeps you up at night isn't the architecture diagrams. It's the 500 edge cases that only happen when real users touch your code.