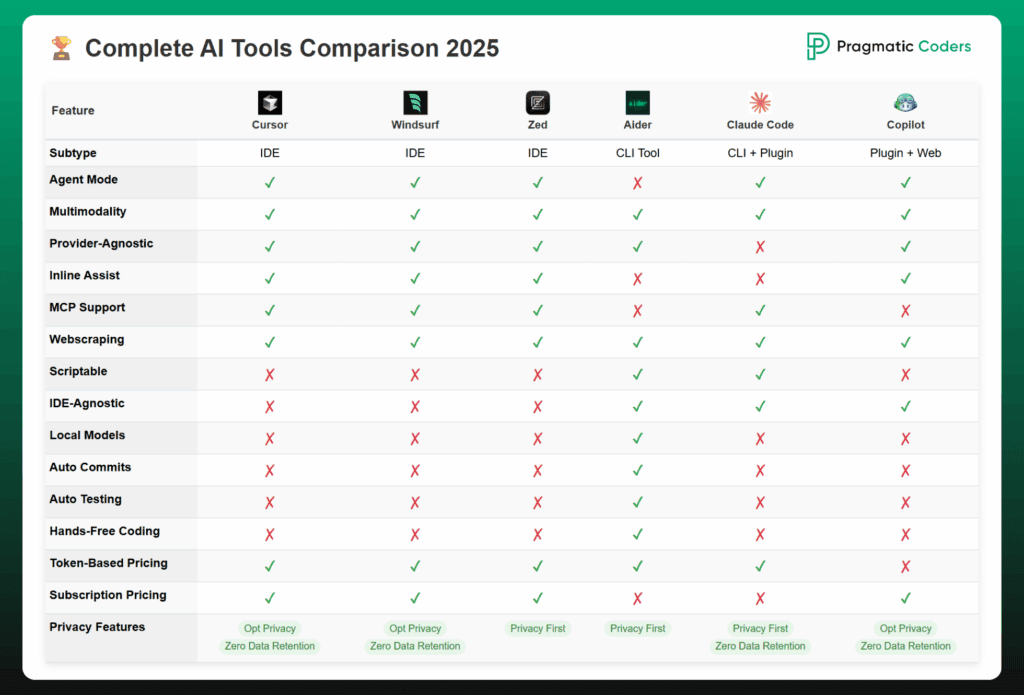

That comparison table above? It's the result of making the same mistake 6 times in the last year: downloading the hyped AI assistant, spending a week learning its quirks, then changing how I code to accommodate its limitations. This approach is completely backwards and will absolutely fuck your productivity.

The right tool should disappear into your existing workflow, not force you to rebuild it from scratch.

Here's what actually happened when I tested these tools on real projects:

Last month I was refactoring a React 18.2.0 codebase with 200+ components. GitHub Copilot kept suggesting class components and deprecated lifecycle methods. Cursor understood the entire project structure and suggested functional components with proper hooks. The difference wasn't subtle - Cursor saved me 3 days of work.

Two weeks ago, setting up AWS infrastructure for a client. Amazon Q suggested the exact ECS Fargate configuration I needed, including the VPC setup and security groups. GitHub Copilot suggested Docker commands that didn't work with AWS. Amazon Q knew I was working on AWS because it actually understands context.

The tools that work disappear into your workflow. The ones that don't make you want to throw your laptop.

The Workflows That Actually Matter (and Which Tools Don't Suck)

1. Rapid Prototyping & Experimentation

What this actually looks like: Building 5 different approaches to the same problem in 2 hours, deleting most of them, and iterating fast without thinking about "wasted" AI requests.

Why GitHub Copilot fails here: Hit the request limit during my last hackathon at 11 PM when I was on fire with ideas. Suddenly every suggestion took 30 seconds. Completely killed my flow state.

What actually works:

- Codeium - Unlimited free tier means I can prototype without watching a usage meter

- Windsurf - Built a working React component with just sketches and descriptions

Real experience: During a client proof-of-concept, I built 3 different data visualization approaches with Codeium in one afternoon. With Copilot, I would have hit limits after the first approach and spent time worrying about billing instead of solving the problem.

2. Large Codebase Maintenance (The Real Test)

What this actually looks like: Renaming a core function used in 47 files, refactoring database queries without breaking 15 different API endpoints, and keeping architectural patterns consistent across a team of 8 developers.

Why GitHub Copilot is fucking useless here: Suggested renaming getUserById to getUser in one file, then kept suggesting the old name in other files. Spent 2 hours fixing inconsistencies that Copilot created.

What works (barely):

- Cursor - Actually indexed our 2.3GB monorepo and suggested refactors that maintained consistency

- Sourcegraph Cody - Found every usage of a deprecated API across 12 microservices

War story: Migrating from Express 4 to 5 on a 3-year-old Node.js app. Cursor suggested the exact middleware changes needed and caught 3 breaking changes that would have caused production issues. GitHub Copilot suggested Express 3 patterns. Not kidding.

3. Team Development & Code Reviews (Chaos Management)

What this actually looks like: 6 developers all getting different AI suggestions for the same problem, code reviews that take 3x longer because everyone's AI generated different patterns, and junior developers following AI suggestions that contradict team standards.

Why GitHub Copilot creates chaos: Each dev gets personalized suggestions based on their usage. Sarah gets React hooks patterns, Jake gets class components, new hire gets deprecated APIs. Code reviews become "which AI pattern should we use?" discussions.

What reduces the chaos:

- JetBrains AI - Same IDE, same analysis engine, same suggestions across the team

- Tabnine Teams - Learns from your actual codebase, suggests your patterns consistently

Team disaster story: Our team used different AI tools for 2 months. Code reviews turned into philosophical debates about functional vs OOP patterns. Spent 4 hours in one review arguing about AI-generated error handling approaches. Now everyone uses the same tool.

4. Cloud-Native & Infrastructure Development (Where Context is King)

What this actually looks like: Configuring ECS tasks that need to talk to RDS, setting up VPC peering that doesn't break security groups, and writing Terraform that doesn't destroy production when you run terraform apply.

Why GitHub Copilot is dangerous here: Suggested aws-sdk-v2 imports when v3 has been standard for 2 years. Recommended security group rules that would open our database to the internet. I caught it, but a junior developer might not have.

What actually understands AWS:

- Amazon Q - Suggested ECS service definitions that actually worked with our VPC setup

- Continue.dev with Claude - Claude 3.5 understands AWS better than Copilot

Production save: Amazon Q caught that my Terraform was using deprecated aws_instance arguments that would have failed in production. Suggested the correct instance_type and ami configuration. Saved me from a 3 AM deployment failure.

5. Security-Conscious Development (Paranoia Mode)

What this actually looks like: Working on banking software where your code literally cannot touch the internet, healthcare apps where HIPAA violations mean $50K fines, and government contracts where security audits take longer than development.

Why GitHub Copilot will get you fired: Every keystroke goes to Microsoft servers. Had a security audit fail because Copilot was sending code snippets to the cloud. Compliance officer lost his shit. Had to remove it from 50 developer machines.

What works when you're paranoid:

- Continue.dev - Runs Llama 3.1 on our own servers, code never leaves the building

- Tabnine Enterprise - On-premises deployment with full audit logs

Compliance reality: Continue.dev setup took our DevOps team 3 weeks and $10K in server costs, but we passed the security audit. Sometimes paranoia is worth it.

The Hidden Costs That Will Fuck Your Productivity

Context Switching Tax: Copilot makes me explain my codebase to it every single day. "This is a React component that connects to our GraphQL API..." Cursor already knows this because it indexed my entire project on day one.

Suggestion Fatigue is Real: Rejecting 80% of suggestions is worse than getting no suggestions. My brain starts ignoring all AI suggestions when most are garbage. Takes weeks to trust AI again after a bad tool experience.

Team Inconsistency Nightmare: Junior developer followed Copilot's class component suggestions for 2 weeks before code review caught it. Spent a day refactoring to hooks. Senior developer was using Cursor and suggested completely different patterns.

Integration Hell: Copilot's JetBrains plugin feels like it was built by someone who's never used IntelliJ. Suggestions appear in wrong places, keybindings conflict, crashes IDE randomly. JetBrains' own AI feels like part of the IDE.

How to Actually Pick the Right Tool (Not the Hyped One)

Week 1: Use your current shitty AI tool and track every time it pisses you off. Write it down. I had 23 incidents with Copilot in one week.

Week 2: Test alternatives on the SAME project, not hello-world tutorials. If you're doing React, test React. If you're doing DevOps, test infrastructure code.

Week 3: Measure reality, not feelings. Time how long tasks take. Count suggestion acceptance rates. I went from 30% acceptance with Copilot to 75% with Cursor.

Week 4: Check if you're still thinking about the tool. Good AI disappears into your workflow. Bad AI makes you constantly aware you're fighting a tool.

The nuclear test: Can you get productive work done when the AI is having a bad day? If your entire workflow depends on AI suggestions, you're fucked when the service goes down.

Final reality check: AI tools evolve rapidly and unpredictably. Copilot was less frustrating 6 months ago. Cursor might disappoint in 6 months. The secret isn't finding the perfect tool—it's finding one that fits your current workflow and staying ready to switch when it inevitably stops working.

Don't get emotionally attached to your AI assistant. It's a tool, not a relationship.