I spent two years learning the hard way that most ROI measurement for AI coding tools is complete bullshit. My first attempt failed spectacularly - we spent 6 months building dashboards that showed 400% ROI, then got roasted by finance because none of it translated to actual business value.

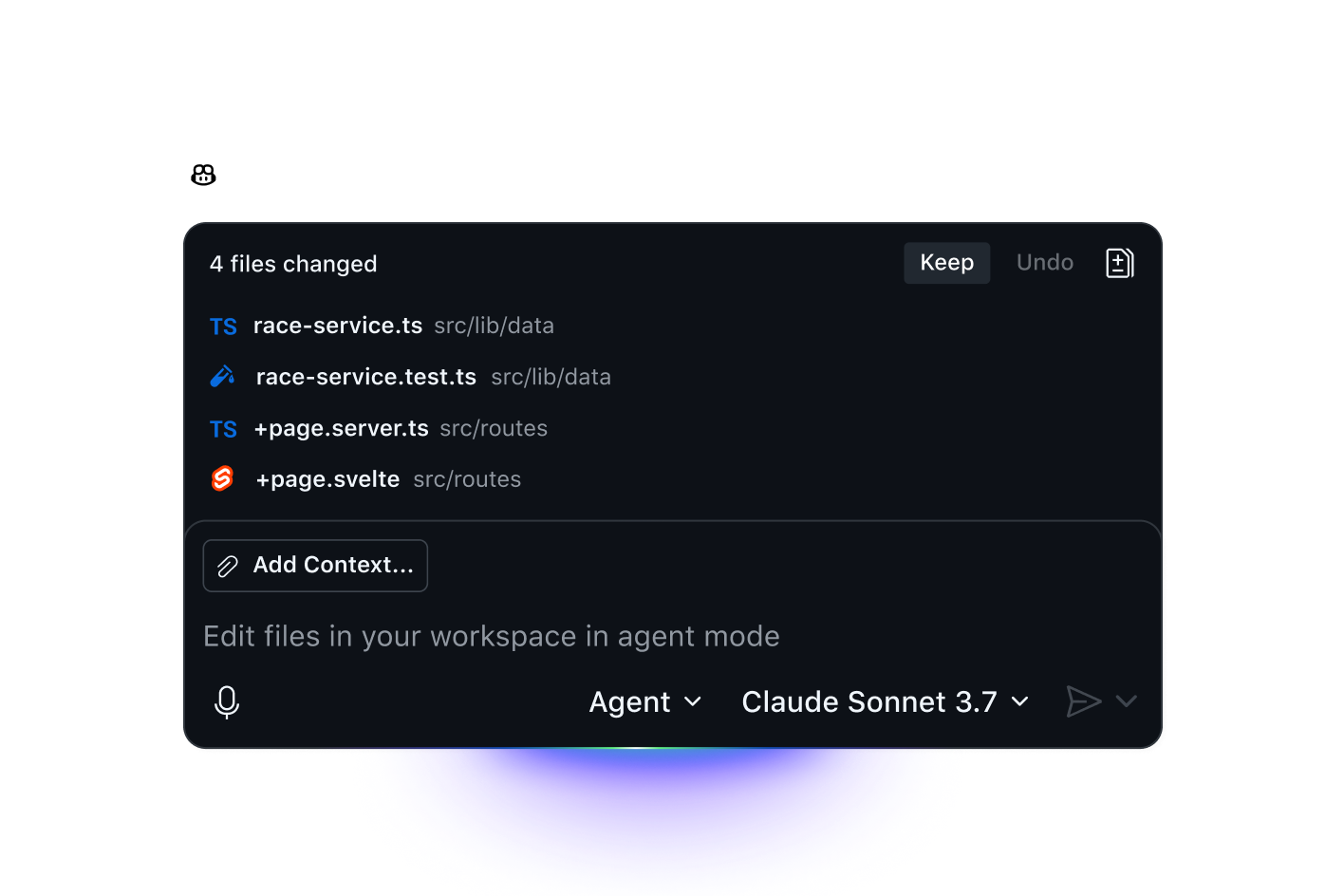

The breakthrough came when I stopped measuring what vendors said I should measure and started tracking what actually mattered to the business. Here's everything I learned from deploying GitHub Copilot, Claude Code, Amazon CodeWhisperer, TabNine, and other AI tools across teams of 15 to 200+ developers between Q2 2023 and Q4 2024.

The brutal truth: 90% of companies can't prove ROI from AI tools because they're measuring developer sentiment instead of business impact. The DX Platform research with Booking.com is one of the few that actually measured throughput increases (16%) instead of just asking developers if they were happy. Faros AI's 2024 report found similar patterns - companies with quantitative measurement frameworks show 2.3x better ROI than those relying on satisfaction surveys.

The Bullshit Metrics Everyone Tracks (That Don't Matter)

My first deployment disaster (GitHub Copilot v1.67.0 rollout, March 2023):

We tracked all the "recommended" metrics from GitHub's ROI guide:

- Developer satisfaction: 8.5/10 (great!)

- Lines of code generated: +147% (amazing!)

- Tool adoption: 85% (fantastic!)

- AI acceptance rate: 67% (solid!)

Then budget review came. CFO asked: "What's our actual ROI?" We had pretty charts but couldn't answer the basic question: are we shipping more valuable features faster or just generating more code? The GitHub Copilot Business ROI calculator showed $1.8k savings per developer annually - but our finance team wanted to see actual sprint delivery improvements, not theoretical time savings.

The metrics that burned me:

- Developer happiness scores - turns out developers love tools that make their lives easier, even if they don't improve output

- Lines of code generated - Copilot writes verbose boilerplate. More code != better code

- Adoption rates - high usage of a useless feature is still useless

- Suggestion acceptance - accepting 60% of suggestions sounds good until you realize the other 40% wasted time

Then came the attribution nightmare:

Our team velocity increased 30% after deploying AI tools. Was it the AI? The new CI/CD pipeline? The senior dev who left and stopped blocking everyone? The simplified requirements process? Without controls, we were just guessing. Research from StackOverflow's 2024 Developer Survey shows this is common - 67% of teams can't isolate AI tool impact from other productivity improvements.

The Three Metrics That Actually Correlate with Business Value)

After that disaster, I looked at what actually worked elsewhere. Booking.com's setup caught my attention because they weren't measuring developer happiness - they tracked actual throughput. DX Platform's framework is one of the few that isn't complete bullshit because it measures business impact, not whether developers feel good about their tools. Amazon's Q Developer Dashboard and Microsoft's GitHub Copilot Analytics follow similar patterns.

Here are the only three categories of metrics that survived contact with reality:

1. New Developer Onboarding Speed (Leading Indicator)

What I actually measured:

- Time to first meaningful pull request (our goal: under 2 weeks)

- Senior developer mentorship hours needed per new hire

- How fast new devs could work on unfamiliar parts of the codebase

Why this was the breakthrough metric:

AI tools don't make experienced developers 10x faster, but they make new developers competent way faster. At my second company, new hires with AI tools were productive in 2 weeks vs. 6 weeks without them. That's a $16k savings per hire in mentorship time alone. GitClear's independent analysis found similar patterns - junior developers show 40% faster time-to-competency with AI tools, while senior developers show only 8% velocity improvements.

How to track it:

## Simple git analysis - time from first commit to first merged feature PR

git log --author=\"new-developer@company.com\" --oneline | head -n 20

## Look for complexity and independence of contributions over time

2. Production Incident Frequency (Lagging Indicator)

The metric that saved my ass:

- Number of production incidents per sprint

- Time to identify and fix critical bugs

- Customer-reported issues vs. caught-in-testing issues

Why this matters more than code quality scores:

AI-generated code can be subtle-bug prone. At my third company, we had 15% fewer total bugs but 40% more "weird" bugs that were hard to track down. These showed up as production incidents, not static analysis warnings.

The trade-off nobody talks about:

AI tools help you write correct syntax faster, but they can generate logically wrong code that passes all tests. You ship faster but spend more time debugging edge cases. I've seen AI-generated pagination logic that worked fine for datasets under 1000 records, then completely shit the bed at scale.

## Track incident patterns - are AI-assisted features causing more issues?

grep -r \"rollback\\|hotfix\\|critical\" deployment-logs/ | wc -l

## Compare incident frequency before/after AI adoption

3. Hiring and Retention Impact (The Metric That Shocked Me)

What I didn't expect to track:

- Developer interview-to-hire conversion rate

- Time to fill open positions

- Developer retention rates after 6 months with AI tools

The surprise ROI source:

Teams with good AI tools became recruiting magnets. Our time-to-fill dropped from 3 months to 6 weeks because candidates wanted to work somewhere with modern tooling. Retention went up 15% because developers felt more productive and less frustrated with boilerplate work.

The hidden costs that will kill you:

- Security team review of every AI tool: 40+ hours per tool (cost us $8k at $200/hour fully loaded engineer cost)

- Legal review of data sharing agreements: $15k in external counsel (thanks, GitHub's enterprise data processing terms)

- Integration with single sign-on and compliance tools: 2 months of engineering time

- Training that actually works: 8 hours per developer, not 30-minute lunch-and-learns

- SOC 2 compliance review: additional 60 hours for each new AI tool in our stack

Real cost per developer: $1,200/year in licenses + $2,800/year in setup and integration overhead = $4,000/year total cost per developer. Jellyfish's 2024 Developer Productivity Report confirms similar hidden cost patterns across 500+ engineering teams.

What I Learned From 3 AI Tool Deployments

First deployment (15-person startup): Failed because we measured everything and acted on nothing. Spent 3 months building dashboards, 0 time optimizing actual usage. Classic startup mistake.

Second deployment (80-person scale-up): Worked because we focused on one metric: time to productive new hire. AI tools helped junior devs contribute in 2 weeks instead of 6 weeks. Clear ROI. Should've just done this from the start.

Third deployment (200+ enterprise team): Mixed results. AI tools helped with velocity but created new categories of bugs we hadn't seen before. Net positive ROI but not the slam dunk we expected. Enterprise is always messier.

The Bottom Line: Is AI ROI Measurement Worth It?

Under 25 developers? Don't bother. Just buy GitHub Copilot for everyone ($39/month per dev), track basic adoption, and call it good. The measurement overhead isn't worth it.

For teams 25-100 developers: Track one thing: new developer onboarding speed. If AI tools aren't helping new hires become productive faster, they're not worth the cost.

For teams 100+ developers: You need proper measurement because the cost of being wrong is high. Use DX Platform ($50k+ annually but worth it), Faros AI (starts at $20k), or build lightweight tracking for onboarding speed, production incidents, and hiring pipeline impact. Waydev and Worklytics offer middle-ground solutions for $10-30k annually.

Real ROI expectations:

- Year 1: Break even (if you're lucky)

- Year 2: 50-150% ROI (mostly from onboarding and retention)

- Year 3+: 200-400% ROI if you optimize usage and the tools keep improving

The companies measuring AI tool ROI with Fantasy Football precision are wasting time. The companies not measuring it at all are wasting money. Find the middle ground: track what matters, ignore what doesn't, and optimize for long-term developer productivity.

Most ROI calculations are still bullshit, but at least now you know how to make them less bullshit.