Remember when GitHub Copilot launched at $10/month and everyone thought "finally, an AI tool that won't bankrupt us"? Yeah, that was adorable. Fast forward to 2025, and engineering managers are staring at $66k+ annual bills wondering what the fuck just happened.

Here's the brutal math: GitHub Copilot Enterprise at $39/user sounds reasonable until you multiply by 100 developers and realize you just committed to $46,800 in subscriptions alone. But that's just the fucking down payment. Real TCO analyses show the actual damage: $66k+ annually once you account for all the implementation disasters, training time, security reviews, and operational overhead nobody warned you about.

Why advertised pricing is complete bullshit

Remember when tools had predictable per-seat licensing? Those days are dead. AI tools love usage-based pricing that burns through budgets faster than you can say "GPT-4 API call."

Token costs will fuck your budget. OpenAI's pricing means GPT-4o runs about $3-10 per million tokens (GPT-4o Mini is cheaper at $0.15 input/$0.60 output but the quality drops hard). Sounds cheap until your power users start generating thousands of lines of AI code daily. I watched one senior dev blow through $280 in API costs during a three-day refactoring sprint because nobody thought to set limits on the team's shared account.

Developers go rogue with multiple tools. While you're budgeting for GitHub Copilot, your team is secretly using ChatGPT Plus, Claude Pro, and Cursor simultaneously. Each dev might have 3-4 AI subscriptions running because they found different tools work better for different tasks. Shadow IT is real, and it's expensive.

Security team will require 47 compliance reviews. Want to use these tools in production? Get ready for security audits, compliance reviews, data isolation requirements, and network restrictions that nobody budgeted for. On-premises deployments can double your infrastructure costs overnight.

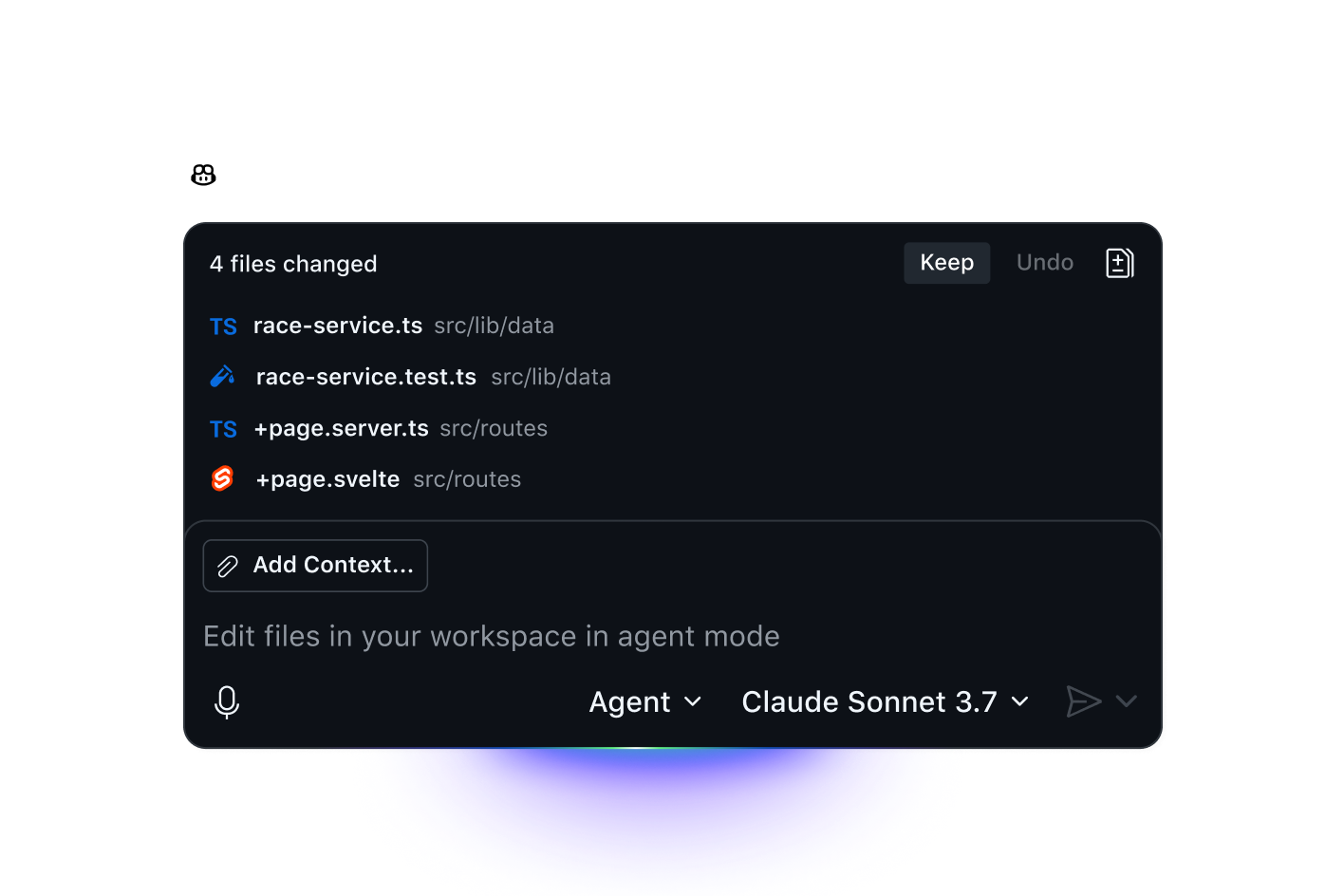

Market Landscape Analysis

![]()

The AI coding market is basically three big players screwing enterprises in different ways:

GitHub Copilot maintains market leadership with 77,000+ organizations. Their enterprise pricing at $39 per user monthly includes advanced features like chat personalized to codebases, documentation search, and pull request summaries. The platform's integration with existing GitHub workflows provides significant switching cost advantages.

Cursor wants to replace your entire IDE. At $20/month for Pro ($60 for Teams), they're betting you'll abandon VS Code for their AI-first editor. Good luck convincing your team to switch - I've tried this migration twice and both times half the team kept using VS Code anyway while still paying for Cursor licenses.

Tabnine promises privacy but their suggestions are mediocre compared to Copilot. You pay extra for worse code completion just to keep lawyers happy.

Amazon Q Developer offers integrated AWS ecosystem benefits at $19/user/month for the Pro tier, with unique code transformation capabilities charged at $0.003 per line of code beyond included allowances.

Newer entrants like Windsurf (formerly Codeium) provide competitive pricing starting at $15/month with generous credit allocations, targeting price-sensitive enterprise buyers.

Why half your team won't actually use the damn thing

Marketing promises 50-100% productivity gains, but here's the ugly truth: even high-performing organizations only hit 60-70% adoption rates. You're paying for 100 seats while maybe 65 developers actually use the tools daily.

GitHub's own research with Accenture found similar adoption challenges despite decent productivity gains for active users. The 2025 Stack Overflow survey reveals the real problem: developer trust in AI accuracy dropped from 70% to 60%. Turns out when AI suggestions are wrong 40% of the time, experienced engineers stop trusting them.

90% of employees are using AI tools outside your approved list, creating Shadow IT nightmares. While you're managing GitHub Copilot licenses, your team is paying for ChatGPT Plus, Claude Pro, and whatever new AI tool hit Product Hunt this week. Cost management studies show enterprises struggling with duplicate subscriptions they don't even know about.

Bottom line: you pay for 100% of seats, get 65% adoption, and ROI timelines stretch 6-12 months longer than anyone projected. This pattern shows up across organizations consistently.

The solution isn't more comprehensive frameworks or ROI measurement strategies—it's understanding that these tools require significant change management and realistic adoption expectations. Start with pilots, measure actual usage, and budget for the learning curve chaos.