Windsurf basically keeps getting hungrier the longer you use it. Started out around 500MB when I first fire it up, but give it a few hours and it's chomping through 3-4GB like it's nothing.

Here's what I've figured out from actually using this thing:

The Real Memory Problem

Windsurf's Cascade feature is pretty smart - it remembers stuff about your project and keeps context from your conversations. But "remembering" means storing that shit in memory, and it never seems to forget anything.

The memory usage breakdown on my 2023 MacBook Pro looks like this:

- Fresh startup: ~500MB (version 1.0.7, not terrible)

- After 2 hours of work: 1.5-2GB (getting heavy, fans start up)

- End of day session: 3-4GB (everything else starts swapping to disk)

The stuff that really kills performance:

- Big codebases make the indexing go nuts

- Every Cascade conversation adds to the memory pile

- Multiple projects open? Forget about it

- Working on a React app with `node_modules`? Good luck

What Actually Works to Fix This

1. Just Restart the Damn Thing

Sounds dumb as hell, but restarting Windsurf every 3-4 hours is the only real fix. Yeah, you lose your conversation history, but your machine stops dying.

Found this out the hard way when Windsurf hit 6GB during a deadline push and took down my Docker containers because the system ran out of memory. Lost 20 minutes of work because I forgot to save. Now I restart religiously - lunch break and end of day, minimum.

2. Make Windsurf Ignore the Junk

Create a `.codeiumignore` file in your project root and tell it to skip the bloat:

node_modules/

dist/

build/

.next/

coverage/

*.log

public/uploads/

This alone cut my memory usage by like 30%. All that generated crap doesn't need AI analysis.

3. Close Projects You're Not Using

Windsurf keeps indexing stuff even when you're not actively working on it. I learned to close projects instead of just switching between them. Memory usage drops immediately.

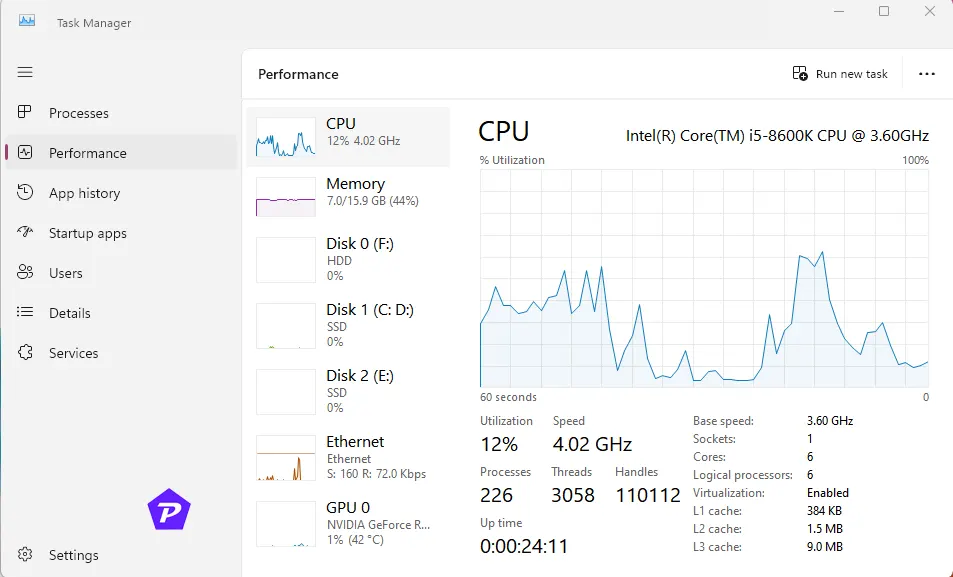

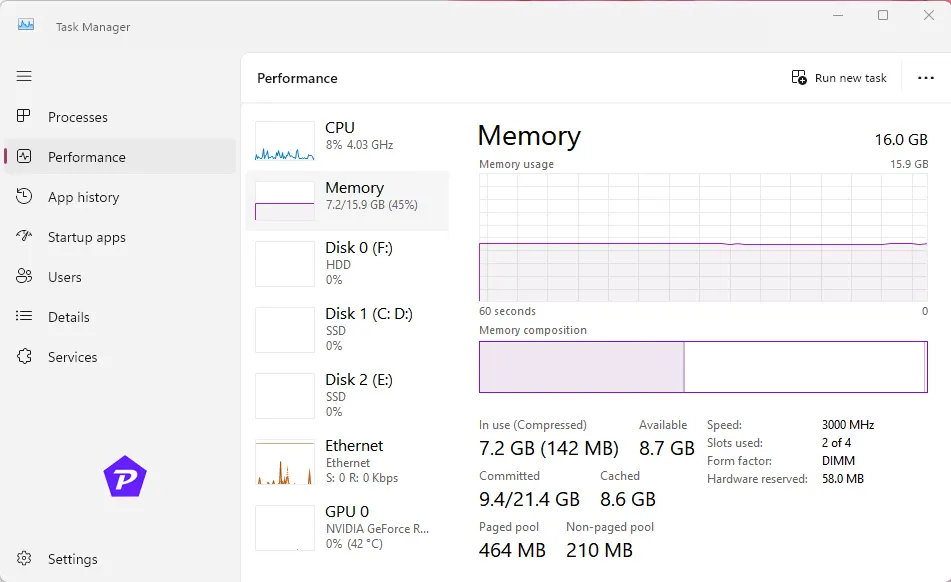

4. Monitor Your RAM Usage

On Mac, I just keep Activity Monitor open on the side. When Windsurf hits 3GB+, that's my cue to restart it before things get ugly.

On Windows: Task Manager works fine. Linux folks probably already know this stuff.

Working with Large Codebases

If you're working on anything bigger than a typical side project, Windsurf's default behavior will murder your machine. I found this out on a 300k line Rails app where Windsurf spent 45 minutes indexing on startup and used 8GB of RAM before I could even write a line of code.

For big codebases:

- Be super aggressive with `.codeiumignore`

- Focus Cascade on one feature at a time instead of the whole codebase

- Use specific file mentions (@filename) instead of letting it crawl everything

- Consider breaking your work into smaller, focused sessions

When Teams Use It

The memory problem gets way worse with team setups. Everyone's indexing, everyone's context is mixing together, and suddenly Windsurf is using 6-8GB.

Team survival tips:

- Coordinate who's using the heavy AI features when

- Set up dedicated dev machines with more RAM if you can swing it

- Consider using it in smaller, focused sessions rather than all-day marathon coding

Network Performance Issues

Corporate networks will absolutely wreck Windsurf's performance. The AI responses that normally take 2-3 seconds start taking 15-20 seconds or just hanging forever.

Spent a whole morning thinking Windsurf was broken until I realized our IT department had blocked the Codeium API endpoints. The error messages were useless - just said "network error" instead of "your IT team hates AI."

Corporate network fixes:

- Ask your IT to whitelist

*.windsurf.comand*.codeium.com - If you're behind a proxy, Windsurf's settings need to know about it

- Sometimes VPN routing screws things up - try connecting directly if possible

The Bottom Line

Windsurf is genuinely useful when it works, but it's not optimized for long coding sessions. The memory leaks are real, and there's no magic setting that completely fixes it.

My workflow now:

- Start Windsurf

- Work for 3-4 hours max

- Restart it during breaks

- Keep

.codeiumignorefiles in every project - Close unused projects aggressively

It's annoying, but until they fix the underlying memory issues, this is what actually keeps it usable. Way better than fighting with a 6GB editor that's crawling along.