Most people use Windsurf wrong. They ask for a function, copy-paste whatever it gives them, then wonder why their app crashes. That's not using AI - that's just paying $15/month to generate broken code faster.

I've been using Windsurf for actual production work since Wave 2, shipped maybe a dozen features with it. Here's the workflow that doesn't make you want to throw your laptop out the window. This builds on the official workflow guide and community best practices.

The Workflow That Doesn't Suck

Don't Just Start Typing, Figure Out What You're Building

Biggest mistake: Opening Windsurf and immediately asking it to write a function. Cascade will happily generate code that makes no sense for your project.

Before I even open Cascade, I spend 5-10 minutes getting my head straight:

What exactly am I building? Not "add auth" but "users can sign up with email, log in, reset passwords when they forget them, and stay logged in for a week"

What's already there? I actually read my existing codebase. Crazy concept, right? What database are we using, what's the folder structure, are we already doing auth somewhere else?

Write down the rules: The `windsurfrules.md` file is where you tell Cascade how your project actually works. Most people skip this, then wonder why it generates Express code for their Next.js app. Check the official Rules directory for examples and best practices guide.

Rules that don't suck (learned the hard way):

## Authentication Rules

- We use bcrypt with 12 rounds (tried 10, got hacked)

- JWT expires after 24 hours because users complained about longer sessions

- Password reset tokens die after 15 minutes (legal department requirement)

- Rate limit to 5 auth attempts per minute or script kiddies will hammer us

## Code Style (stuff that broke in production)

- TypeScript strict mode always - any broke prod twice

- async/await everywhere, promise chains are unreadable garbage

- All DB queries through UserService or you'll have SQL injection somewhere

- Error messages need to be user-friendly AND logged for debugging

- Start with a plan, not code: Tell Cascade what you're building and let it figure out the approach. It's surprisingly good at architecture if you don't jump straight to implementation.

Actually Talk to Cascade Like a Human

Stop giving it orders like it's a code monkey. I see people typing "write auth middleware" and then complaining when it generates generic garbage.

Here's what actually works:

Me: "I need to add user auth to this Express app. Looking at what we have, I'm thinking email/password login with JWT sessions. We also need password reset because users are forgetful idiots.

Take a look at our codebase - we're already using PostgreSQL and have a User table. What approach would you suggest?"

Cascade: [Actually analyzes your code, suggests specific patterns that fit your project]

Me: "That makes sense, but what about security? I don't want to get pwned again."

Cascade: [Suggests CSRF protection, input validation, rate limiting based on what you're actually building]

Me: "Alright, let's build this thing. Start with the User model changes we need."

The trick: Let Cascade see the big picture first. It's way better at architecture than you'd expect, but only if you don't jump straight to "write me a function."

Build It in Pieces, Not One Giant Blob

Don't ask for everything at once. I tried that. Cascade generated 400 lines of code that looked perfect and worked for exactly zero of my use cases.

Instead, build it piece by piece (based on meta-cognitive workflow patterns that actually prevent context loss):

Get something working

- "Add email/password fields to the User model"

- "Create basic login/register routes that don't crash"

- "Make a simple auth middleware that checks tokens"

Then make it not terrible

- "Add input validation so people can't inject SQL"

- "Implement rate limiting before we get DDoS'd"

- "Add CSRF protection because the security team will audit us"

Handle all the weird edge cases

- "What happens when the email service is down? (it will be)"

- "Add error handling for expired/invalid tokens"

- "Lock accounts after 10 failed attempts"

The key is each step works on its own. You're not debugging 400 lines of AI-generated spaghetti - you're fixing one small thing at a time.

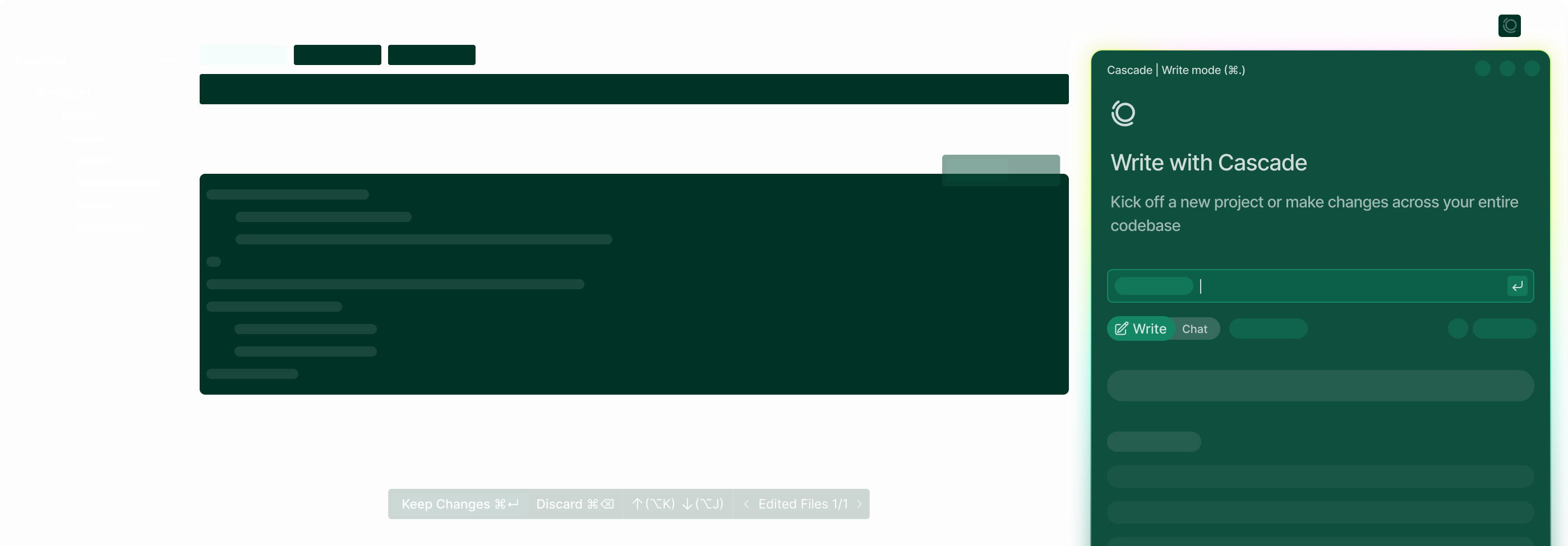

Chat Mode vs Write Mode (And When Each One Doesn't Suck)

Use Chat Mode when:

- You're trying to understand what the hell this legacy code does

- Planning something complex (Cascade is surprisingly good at architecture)

- Debugging weird issues that don't make sense

- Learning how your codebase actually works

Use Write Mode when:

- You know what to build and just need it implemented

- Making changes across multiple files (it's actually decent at this)

- Refactoring without breaking everything

- Adding features that touch several components

The pattern: Chat first (figure out what to do), Write Mode second (actually do it), Chat again when it inevitably breaks, Write Mode to fix it. This approach is covered in the Cascade documentation and developer tips guide.

Reality check: Write Mode sometimes generates code that doesn't match what you discussed in Chat Mode. It's like Cascade has multiple personalities. When this happens, go back to Chat Mode and be more specific about what you want.

Rules and Memory: The Stuff Nobody Sets Up (But Should)

Most people skip Windsurf's memory system entirely, then wonder why Cascade keeps suggesting React code for their Vue project. You need to actually tell it how your project works. Check the memory system guide if you want to do it properly.

Global Rules (The Stuff That Applies to Everything)

Set this up at ~/.codeium/windsurf/memories/global_rules.md. These are your "don't be an idiot" rules that apply to every project. Check practical rules examples and SaaS-specific patterns:

## Code Quality (Things That Bit Me Before)

- Always handle errors or your app will crash in production

- TypeScript everything - any types are banned after what happened last month

- Use real variable names, not single letters (debug hell otherwise)

- JSDoc comments for public methods or you'll forget what they do

- Write tests or pray nothing breaks

## Architecture (Lessons Learned)

- Dependency injection everywhere - hard coupling is a nightmare to test

- All database access through repository pattern (SQL injection is real)

- API responses: {success, data, error} format - consistency matters

- Environment variables for all config - hardcoded values will bite you

## Security (Because We Got Pwned Once)

- Validate all inputs - trust nothing from users

- Parameterized queries only - Bobby Tables is still out there

- Log auth failures and weird stuff for forensics

- Never log passwords, tokens, or PII (compliance will audit this)

Project Rules (Tell Cascade How This Specific Project Works)

Put this in .windsurfrules.md in your project root. This is where you explain your weird project-specific stuff. See AI coding rules examples and the Windsurf AI prompting guide:

## This Project's Weird Specifics

This is a Node.js/Express API with PostgreSQL database (not MongoDB, stop suggesting Mongoose)

### Database (Specific to Our Setup)

- Use UserService for all user operations - it handles the connection pooling weirdness

- DATABASE_URL is in .env - don't hardcode localhost

- Always run migrations before adding fields or you'll break staging

- Transactions for anything touching multiple tables (learned this the hard way)

### Authentication (How We Actually Do It)

- JWT_SECRET is in .env - don't generate a new one

- Sessions expire after 24 hours because users complained about shorter ones

- Refresh tokens in the database with user_id FK - session store was unreliable

- Password resets: crypto.randomBytes(32) - UUID4 caused collisions somehow

### Testing (Our Messy Setup)

- Unit tests in __tests__ because that's how we started

- Integration tests in tests/integration/ - different pattern, I know

- supertest for API testing - request was deprecated

- Mock all external APIs or tests will fail randomly

Workflow Files (.windsurf/workflows/)

The workflow system automates repetitive tasks. Here's a deploy workflow that actually works:

## Deploy to Staging

1. Run all tests to ensure code quality

```bash

npm test

Check for security vulnerabilities

npm audit --audit-level=moderateBuild the production bundle

npm run buildDeploy to staging environment

git push staging mainRun post-deployment health checks

curl $STAGING_API_URL/healthCreate deployment log entry with timestamp and commit hash

**Invoke with**: `/deploy-staging` in Cascade

### Handling Complex Features: The Multi-Session Approach

For big features, don't try to do everything in one Cascade session. [Context limits](https://docs.windsurf.com/windsurf/cascade/cascade) are real.

**Session 1: Architecture and Planning**

- Define the feature scope and requirements

- Plan the database changes needed

- Identify which existing code needs modification

- Create the high-level implementation strategy

**Session 2: Core Implementation**

- Implement the main feature logic

- Add database migrations if needed

- Create the basic API endpoints

- Write the core business logic

**Session 3: Integration and Polish**

- Connect frontend to new API endpoints

- Add comprehensive error handling

- Write tests for the new functionality

- Update documentation

**Session 4: Security and Performance**

- Add security validations

- Implement rate limiting if needed

- Optimize database queries

- Add monitoring and logging

Each session starts with a brief recap: "We're implementing user authentication. In the previous session, we created the User model and basic auth middleware. Today we're adding password reset functionality."

### When Your Code Breaks (And It Will)

Production is down, users are complaining, and you have no idea what went wrong. Here's how to debug with Cascade without making it worse:

1. **Don't panic, paste the error**

```

"Everything was working fine, then I deployed the password reset feature and now I'm getting:

TypeError: Cannot read property 'id' of undefined

at /auth/reset-password line 42

What the hell happened?"

```

2. **Let Cascade connect the dots**

```

"Look at the password reset handler and anywhere that accesses user.id. I didn't touch any existing user code, so why is this breaking now?"

```

3. **Get a debugging plan, not random guesses**

```

"Give me a systematic way to figure out where the user object is becoming undefined. What should I check first?"

```

4. **Fix it step by step**

```

"The user is undefined because the token lookup is failing. Fix the password reset handler to handle this case gracefully."

```

**The trick**: Don't just ask "how do I fix this error." Give Cascade the context of what you changed and let it figure out why things broke.

### Working with Existing Codebases

Most tutorials assume you're starting from scratch. In reality, you're adding features to existing code that someone else wrote 6 months ago and left no fucking documentation.

**How to not break existing shit:**

1. **Let Cascade figure out what you're dealing with**

```

"I need to understand this codebase before I break everything. Can you look around and tell me:

- What framework are we using and how is it structured?

- How does auth work currently?

- What's our database setup?

- Any obvious problems I should avoid making worse?"

```

2. **Ask how to fit in, don't force your patterns**

```

"I need to add user roles. Looking at how the User model and auth middleware work, what's the least disruptive way to add this?"

```

3. **Get a plan that won't piss off your teammates**

```

"Show me exactly what files I need to touch and what I need to create. I don't want to refactor half the codebase for this feature."

```

4. **Start with the smallest possible change**

```

"Let's just add the roles field to the User model first, exactly like the other fields are done here."

```

**The key**: Make Cascade document the existing patterns before you start. Otherwise you'll spend weeks in code review hell.

### Team Workflow (Or: How Not to Drive Your Colleagues Insane)

Using Windsurf solo is easy. Getting a whole team to use it without chaos? That's harder.

#### Share Your Rules or Everyone Will Have Different Patterns

Put your `.windsurfrules.md` and workflow files in git. When someone figures out the right way to handle auth tokens, everyone's Cascade should know about it. Otherwise you'll have five different auth patterns in the same codebase.

#### Share Your Debugging Wins

Use the [conversation sharing](https://docs.windsurf.com/windsurf/cascade/memories) feature when you figure out something tricky:

"Spent 3 hours debugging why payments were failing in production. Turned out to be a timezone issue with the Stripe webhook. Sharing this so nobody else has to go through this hell."

#### Code Review (Before Your Teammates Roast You)

Before you submit that PR and pray nobody notices the hacky bits:

"Look at my recent changes and tell me what's going to get flagged in code review:

- Any obvious bugs or security issues?

- Performance problems that will bite us?

- Does this follow our project patterns or am I being weird?"

Fix whatever it finds, then:

"Create tests for this user role stuff so QA doesn't find bugs I missed"

And finally:

"Update the API docs so the frontend team stops asking me how this works"

#### Onboarding New Team Members

Create an onboarding workflow:

```markdown

## Team Onboarding

1. Set up development environment following our standards

2. Clone repository and install dependencies

3. Copy shared rules files to Windsurf memories directory

4. Review existing codebase architecture with Cascade

5. Complete starter task: "Add a simple health check endpoint"

6. Get code review from team lead

New developers can use Cascade to understand the codebase faster instead of bugging senior developers with basic questions.

Performance and Resource Management

Windsurf development workflow isn't just about features - it's about sustainable productivity.

Managing Context and Memory

- Restart Cascade sessions every 30-40 interactions to prevent context degradation

- Save important insights as memories before restarting

- Use specific file mentions (@filename) to keep context focused

- Close unused projects to prevent Windsurf from indexing every damn thing on your machine

Optimizing for Long Development Sessions

The memory leak issues are real, but manageable:

- Monitor RAM usage and restart Windsurf when it hits 3GB+ (it will)

- Use

.codeiumignoreaggressively to prevent indexing build artifacts - Work on one feature at a time instead of jumping between projects like a maniac

- Take breaks - both you and Windsurf work better with periodic resets

Integration with Traditional Tools

Windsurf doesn't replace everything:

- Keep a terminal open for git operations and debugging

- Use browser dev tools for frontend debugging

- Keep documentation handy for API references Cascade doesn't know

- Have a backup editor ready for when Windsurf needs to restart

Advanced Workflow Patterns

Documentation First (When You Actually Want Good Documentation)

Instead of building the feature then scrambling to document it later:

"I want to add user notifications - email alerts, preferences, history, mark as read, the whole thing. Help me write a proper spec for this before I start coding."

Then:

"Turn that spec into OpenAPI docs so the frontend team knows what to expect"

Finally:

"Now build the notification system exactly like the docs say it should work"

This approach actually works because you're forced to think through the feature completely before you write a single line of code.

Testing First (When You're Tired of Debugging in Production)

"Write tests for the notification feature I'm about to build - they should all fail since nothing exists yet"

Then implement just enough to make the tests pass. It's slower upfront but prevents the "works on my machine" disasters.

Refactoring Without Breaking Everything

"This auth code is a mess. Show me what needs to be refactored and give me a plan that won't break production"

Then do it piece by piece, running tests after every change. Less exciting than a big rewrite, but you actually ship it instead of spending months on a branch that never gets merged.

The Bottom Line: Workflow Over Features

Windsurf has impressive features, but features don't ship products - workflow does.

The developers who get the most out of Windsurf aren't the ones who know every command or have the perfect setup. They're the ones who figured out how to work WITH the AI instead of just using it to generate code they don't understand.

The workflow mindset shift:

- From "generate code" to "collaborative development"

- From "one big request" to "iterative refinement"

- From "fix my bug" to "help me understand the problem"

- From "write a function" to "let's plan this feature together"

This isn't about being dependent on AI - it's about being more effective by using AI as a development partner that actually understands your codebase, your patterns, and your goals.

Master this workflow, and Windsurf becomes the difference between shipping features and shipping features that don't suck.