Rolling out AI coding tools to hundreds of developers isn't like installing Slack. It's more like convincing a room full of cats to use the same litter box - theoretically possible, but prepare for some serious pushback. Enterprise AI deployment challenges are well-documented across the industry.

The Three Deployment Stages (And Where They Usually Fail)

Stage 1: The Honeymoon (Weeks 1-4)

Everything looks great in demos. Procurement approves the budget. IT security grudgingly signs off after you jump through 47 different compliance hoops. You think you're golden.

Then reality hits. Hard.

Stage 2: The Reckoning (Months 2-6)

- Your firewall blocks half the API calls because Windsurf "forgot" to mention

*.codeiumdata.comin their official setup docs. Took us most of a week to figure that out while developers were losing their shit. - Rate limiting kicks in during crunch time because they share infrastructure with every other enterprise customer. Nothing like having AI fail when you need it most.

- Senior developers revolt because "AI can't understand our legacy codebase" (they're not wrong - it choked on our 15-year-old Java monolith)

- Junior developers love it but produce code that passes tests but breaks spectacularly in production. One AI-generated database query took down our entire reporting system - lasted maybe 6 hours? Could've been longer, I was too busy fixing shit to check the clock.

- Your security team discovers that \"zero data retention\" doesn't mean your code isn't flying around the internet. Cue emergency CISO meeting and 6 weeks of legal review.

Stage 3: Acceptance or Abandonment (Months 6-12)

Either you work through the problems and get 10-20% of developers actively using it, or it becomes shelfware that accounting asks about every quarter.

Enterprise AI tool deployment involves multiple security layers and network configurations - most of which will break.

What Actually Breaks During Deployment

Network Infrastructure Pain Points

The documentation lists some domains but here's what you actually need to whitelist:

*.codeium.comand*.windsurf.com(they recommend these)server.codeium.com(most API requests)web-backend.codeium.com(dashboard requests)inference.codeium.com(inference requests)unleash.codeium.com(feature flags)codeiumdata.comand*.codeiumdata.com(downloads and language servers)- Plus whatever CDN endpoints change without notice

Your VPN will randomly break API calls - specifically, it'll work fine for 2 weeks, then fail during the demo to executives. Your corporate proxy will mangle requests in creative ways that take hours to debug. And when it fails, the error messages are useless: "Network error occurred." Thanks, very fucking helpful.

Pro tip: Keep a debug log of every network failure. You'll need it when the network team insists "everything is working fine" while 50 developers can't get autocomplete to work.

SSO Integration Hell

"Seamless authentication" my ass. Here's what actually happens:

- Initial SAML setup takes 2-3 weeks because enterprise SSO is never straightforward

- Token expiration behavior is inconsistent between VS Code and the Windsurf editor

- Some developers get stuck in auth loops that require clearing browser cache

- The $10/user/month SSO addon for Teams plan isn't negotiable (Enterprise includes it)

The analytics dashboard shows credit usage, but doesn't warn you about the cliff coming at the end of the month.

The Credit System Nobody Explains Properly

Enterprise plan gives you 1,000 credits per user monthly. Sounds generous until you learn:

- Simple autocomplete: 1 credit (via the new Windsurf Tab system)

- Code generation with Cascade: 1 credit per prompt, plus tool calls

- SWE-1 model usage: Currently promotional (0 credits) but won't stay that way

- GPT-5 High reasoning: 2x credits when promotion ends

- One developer doing heavy AI-assisted development burns through 1,000 credits in two weeks

Developer Adoption: It's Not What You Think

Forget the productivity metrics. Here's how adoption actually plays out:

How Developers Actually Use This Thing

Most developers try it once, maybe fuck around with it for a few hours, then forget it exists. Some will use it when they need to generate boring CRUD operations or tests. A handful become obsessed with it and won't shut up about how amazing AI coding is.

The obsessed ones drive most of whatever productivity gains you'll measure. Everyone else just contributes to your adoption statistics while doing the absolute minimum to keep managers happy.

What Developers Actually Complain About

- "It doesn't understand our domain-specific code patterns"

- "The suggestions are wrong more often than helpful"

- "It generates code that works but violates our style guide"

- "I spend more time reviewing AI suggestions than writing code myself"

- "It's just Stack Overflow with extra steps"

What Actually Drives Adoption

- Works reliably for tedious tasks (writing tests, generating boilerplate)

- Doesn't break existing developer workflows

- Handles your specific tech stack without constant configuration

- Provides value immediately, not after a learning curve

The developers who stick with it are usually the ones dealing with legacy systems or writing repetitive code. The ones working on novel problems or highly optimized systems find it less useful.

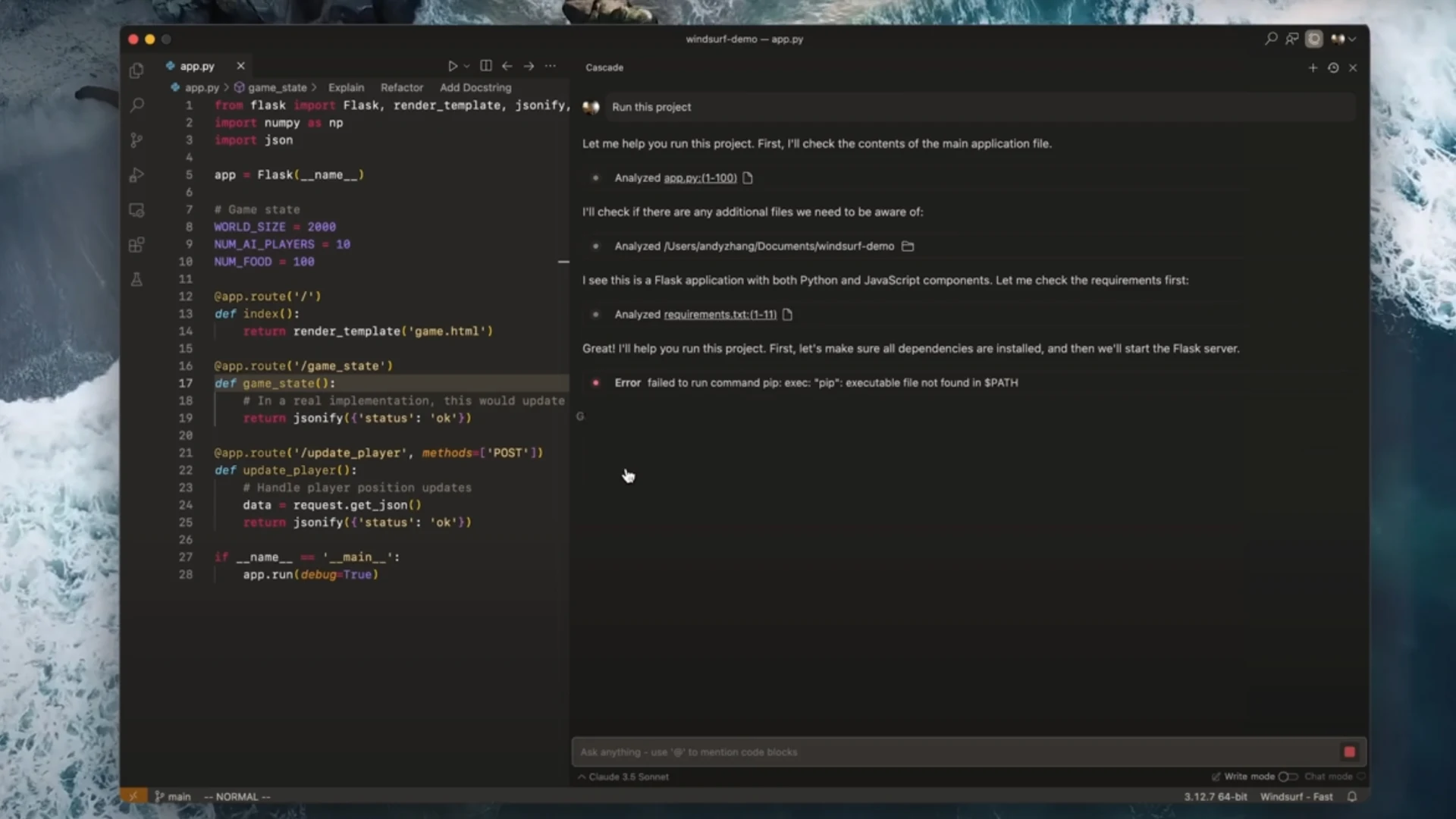

The dashboard makes everything look smooth. Real usage is messier.

Fast Company's take on Windsurf Cascade - the reality involves more debugging and less magic.

Security: What They Don't Tell You Upfront

"Zero data retention" sounds great until you realize it only applies to long-term storage. Your code still transits through their servers, gets processed by third-party LLM providers, and exists in memory/logs for undefined periods.

For most companies, this is fine. For regulated industries or security-paranoiac organizations, it's a non-starter that surfaces months into evaluation.

The on-premises deployment option requires 200+ seats and costs significantly more than advertised. One client's quote came back at 3x the listed enterprise pricing once implementation costs were included.

But let's be specific about what this actually costs you. Time to break down the real numbers.