Distributed databases give you more attack surface than regular PostgreSQL, so you need more layers of security. CockroachDB tries to make this less painful than rolling your own security, but you still need to understand what's happening when things break.

Network Encryption: At Least This Works

Everything uses TLS 1.3 by default, which is good because you don't want someone sniffing your database traffic:

Node-to-node: All the replication and consensus chatter between nodes is encrypted

Client connections: Your app connections use the PostgreSQL wire protocol over TLS

Web UI: The admin console is HTTPS only

Certificate hell: Certificate rotation in distributed systems is painful. CockroachDB can reload certs without restarting, but expired certificates can take down entire clusters at 3am when someone forgets to update the rotation job. Set up monitoring for cert expiry dates or you'll learn this the hard way.

Encryption at Rest: Multiple Layers of Protection

CockroachDB implements encryption at rest through several mechanisms:

Infrastructure-Level Encryption

Cloud deployments automatically benefit from provider-managed encryption:

- AWS: EBS volumes encrypted with AWS KMS keys

- Google Cloud: Persistent disks encrypted with Google-managed keys

- Azure: Managed disk encryption with Azure Key Vault

This provides baseline protection against physical disk theft, but you're trusting the cloud provider's key management.

CockroachDB Enterprise Encryption at Rest

The Enterprise Encryption at Rest feature adds an additional layer using AES-256 encryption. This encrypts data before it hits the storage layer, ensuring that even cloud provider employees with disk access can't read your data.

Key management: You control the encryption keys through external key management systems (AWS KMS, Google Cloud KMS, HashiCorp Vault). Keys never exist in plaintext on CockroachDB nodes. The KMS integration guide covers setup procedures.

Performance impact: Encryption at rest adds minimal overhead - typically 5-10% performance reduction. The bigger impact is key management complexity, not computational cost. Review the encryption performance analysis for detailed metrics.

Backup Encryption

Backups can be encrypted using KMS providers:

BACKUP DATABASE company_data TO 's3://backups/2025-09-17'

WITH kms = 'aws:///arn:aws:kms:us-east-1:123456789012:key/12345678-1234-1234-1234-123456789012';

Critical note: Backups taken without the KMS option are NOT encrypted even if you have Encryption at Rest enabled. This caught us by surprise during a compliance audit - make sure your backup procedures explicitly include encryption.

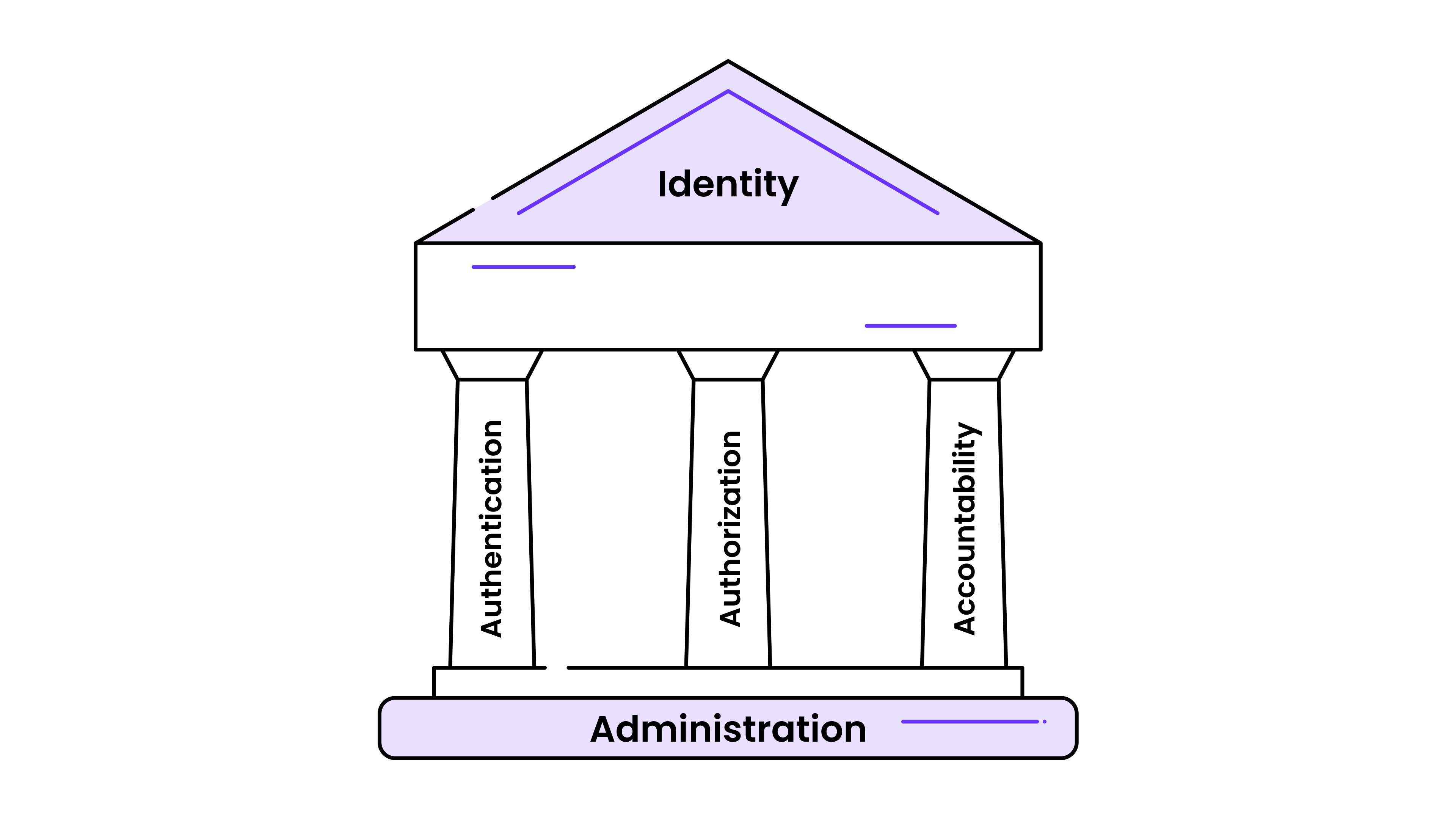

Authentication and Identity Management

Certificate-Based Authentication

CockroachDB uses X.509 certificates for both node and user authentication. This eliminates password-based vulnerabilities for administrative access:

Node certificates: Each CockroachDB node has a unique certificate signed by the cluster's CA. This prevents unauthorized nodes from joining the cluster.

Client certificates: Users can authenticate using client certificates instead of passwords. Essential for service accounts and automated tools that need database access.

Certificate generation: The `cockroach cert` command simplifies certificate creation, but integrate with your existing PKI infrastructure for production deployments.

SASL/SCRAM-SHA-256 Authentication

For password-based authentication, CockroachDB supports SCRAM-SHA-256, which stores salted password hashes instead of plaintext. This is significantly more secure than traditional password storage methods.

Single Sign-On (SSO) Integration

The web console supports SSO authentication via OpenID Connect (OIDC). Integrate with:

- Azure Active Directory

- Google Workspace

- Okta

- Any OIDC-compliant identity provider

Production tip: SSO only affects web console access, not SQL connections. You'll still need certificate or SCRAM authentication for application database access.

Role-Based Access Control (RBAC)

CockroachDB implements comprehensive RBAC with PostgreSQL-compatible syntax. The role-based security model supports fine-grained permissions and inheritance:

User and Role Management

-- Create roles for different access levels

CREATE ROLE read_only;

CREATE ROLE data_analyst;

CREATE ROLE application_user;

-- Grant specific permissions

GRANT SELECT ON ALL TABLES IN SCHEMA public TO read_only;

GRANT SELECT, INSERT, UPDATE ON orders, customers TO application_user;

-- Create users and assign roles

CREATE USER alice WITH PASSWORD 'secure_password';

GRANT data_analyst TO alice;

Granular Permissions

Unlike some NoSQL databases, CockroachDB supports fine-grained permissions:

- Database-level: Control access to entire databases

- Schema-level: Restrict access to specific schemas

- Table-level: Grant permissions on individual tables

- Column-level: Hide sensitive columns from specific roles

- Row-level: Filter data based on user context (enterprise feature)

Schema design impact: Design your schema with security in mind. Group sensitive tables into separate schemas and use different service accounts for different application components.

Network Security and Access Control

IP Address Allowlisting

Configure allowed IP ranges at both the SQL and network levels:

-- SQL-level IP restrictions

CREATE USER external_api WITH PASSWORD 'password' VALID UNTIL '2025-12-31';

ALTER USER external_api SET allowed_ips = '203.0.113.0/24,198.51.100.5';

Network-level filtering: Use cloud provider security groups, firewalls, or VPC configurations to restrict network access. Don't rely solely on application-level controls.

Private Network Connectivity

For cloud deployments, CockroachDB supports:

- VPC Peering: Connect from your existing cloud VPCs

- AWS PrivateLink: Keep traffic within AWS backbone

- GCP Private Service Connect: Private connectivity within Google Cloud

Security benefit: Private connectivity prevents database traffic from traversing the public internet, reducing attack surface and meeting compliance requirements for data in transit.

Compliance and Regulatory Frameworks

Supported Compliance Standards

CockroachDB Dedicated clusters are certified for:

PCI DSS: Payment Card Industry compliance for handling payment card data. Requires enabling specific features and following operational procedures.

SOC 2 Type II: Annual audits verify security controls and processes. CockroachDB's infrastructure and operational procedures meet SOC 2 requirements.

GDPR/CCPA: Data privacy regulations compliance through encryption, access controls, and audit logging. Row-level security helps implement data residency requirements.

Compliance Architecture Considerations

Data residency: Use regional tables to ensure data stays within specific geographic boundaries. Critical for GDPR and similar regulations. The data residency guide covers implementation strategies.

Right to be forgotten: Implement data deletion procedures that work across distributed replicas. CockroachDB's transactional guarantees ensure consistent deletion across all nodes.

Audit requirements: Enable comprehensive audit logging and ensure logs are tamper-proof and retained according to compliance requirements. The compliance logging guide covers configuration options.

Production Security Hardening

Secure Cluster Configuration

Disable insecure mode: Never run production clusters with `--insecure`. Always use certificates and TLS encryption.

Certificate management: Implement automated certificate rotation. Plan for certificate expiry events and have emergency procedures ready.

Network segmentation: Isolate database clusters in private subnets. Use bastion hosts or VPN connections for administrative access.

Monitoring and Alerting

Set up security-focused monitoring:

- Failed authentication attempts: Alert on suspicious login patterns

- Certificate expiry: Monitor certificate validity periods

- Privilege escalation: Track role and permission changes

- Unusual access patterns: Monitor for off-hours access or unusual geographic locations

Integration tip: CockroachDB exports security metrics to Prometheus. Build dashboards that show authentication failures, certificate status, and access patterns.

Security for distributed databases is challenging, but CockroachDB gives you the tools you need without making it worse. The key is understanding how these security features work together and not trying to implement everything at once.