Claude 3.5 Haiku is Anthropic's fastest AI model, responding in about 0.52 seconds instead of the 2-5 seconds you get with larger models. Released October 22, 2024, it's built for when you need an AI that doesn't make users abandon your app while waiting.

Been using this since it dropped. The 40.6% score on SWE-bench Verified beats GPT-4o and even the original Claude 3.5 Sonnet. Yeah, it's wrong more than half the time on coding tasks, but that's honestly better than some senior developers I've worked with after their third coffee.

Here's what I've figured out:

- Context Window: 200,000 tokens (about 150K words, but rate limits hit way before that)

- Response Time: ~0.52 seconds (benchmarked here, assuming perfect conditions which never happen)

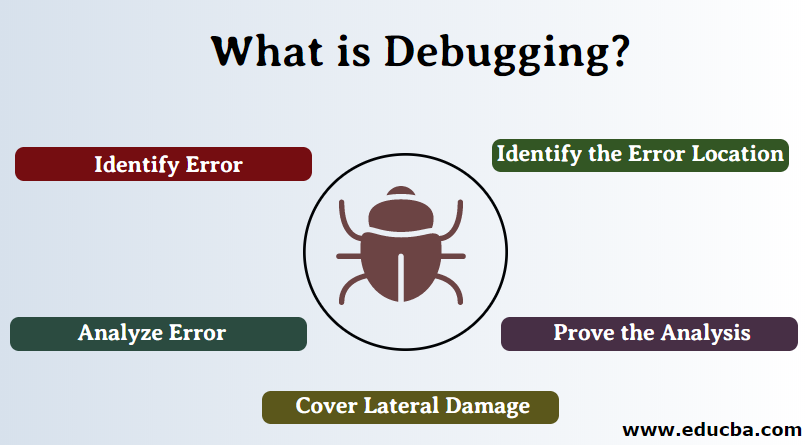

- Capabilities: Code completion, debugging, reasoning, tool use (actually works, unlike some competitors)

- Input Types: Text only (images "coming soon" since forever)

- Pricing: $0.80 input, $4 output per million tokens (expensive enough to make finance teams cry)

The part where your CFO starts crying

At $4 per million output tokens, Claude 3.5 Haiku is 5.3x more expensive than GPT-4o Mini. Burned through maybe 10M tokens last month and got hit with a $40 bill. Scale that to production volumes and you're looking at real money fast. Check out Vantage's cost calculator before committing to anything serious.

What It's Actually Good For

Real companies are betting production workloads on this. Replit uses it for app evaluation - makes sense when you need sub-second responses and can justify the premium. Apollo reports better sales email generation, probably because it's smart enough to avoid sounding like ChatGPT while being fast enough for real-time workflows.

Found the sweet spot in user-facing applications where response time trumps token cost. Code completion in VSCode extensions, chatbots where users notice the difference between 0.5 and 2 seconds, real-time content moderation - anywhere human patience is the bottleneck, not your budget.