Today is Friday, September 05, 2025. AWS has been shitting out new AI features this year like they're trying to win some kind of service launch competition. Most of it is marketing theater, but a few things actually work when you're debugging at 3am and your models are failing in prod. Here's what doesn't suck versus what looks cool in demos but breaks the moment real data touches it.

SageMaker Unified Studio: Finally, A Single Place That Works

SageMaker Unified Studio - Finally, one console to rule them all (theoretically)

Amazon SageMaker Unified Studio went GA in March 2025 and it's probably the most useful thing AWS has shipped for AI teams since the original SageMaker. After years of jumping between seventeen different AWS consoles, hunting for that one dataset Sarah uploaded last month, and debugging IAM permissions that make no fucking sense, having one interface that doesn't make you want to throw your laptop out the window is genuinely revolutionary.

So here's what this thing actually does - it's one workspace where you can query data from S3, Redshift, and whatever other data sources you've got scattered around, all from the same interface. Build ML models without jumping between five different AWS consoles. Share notebooks and datasets across teams without the usual IAM permission hell that makes everyone want to quit.

Production reality: Been using it for six months. The data discovery actually works - no more Slack messages asking "where did Mike put the customer churn data?" that nobody can answer. Compliance finally stopped breathing down my neck about data access because the governance tools actually show what I'm doing instead of the black box nightmare we had before. Visual ETL is fine for basic stuff, but anything interesting still needs actual code because drag-and-drop can't handle real business logic. Plus the visual editor randomly corrupts your workflows and you get InvalidParameterException: Workflow definition contains invalid syntax errors with no indication of what's actually wrong. The troubleshooting guide basically says "try again" for every error.

The catch: It's still SageMaker under the hood, so expect AWS bills that'll give your CFO nightmares if you leave shit running. The QuickSight integration works but building dashboards feels like watching paint dry. And like every "unified" platform AWS has ever built, it's great until you need to do something they didn't think of - then you're back to stitching together twelve different services with IAM policies held together by duct tape and prayers.

Bedrock Multi-Agent Collaboration: Agents That Don't Hate Each Other

Amazon Bedrock Multi-Agent System - Multiple AI agents that (sometimes) coordinate better than your dev team

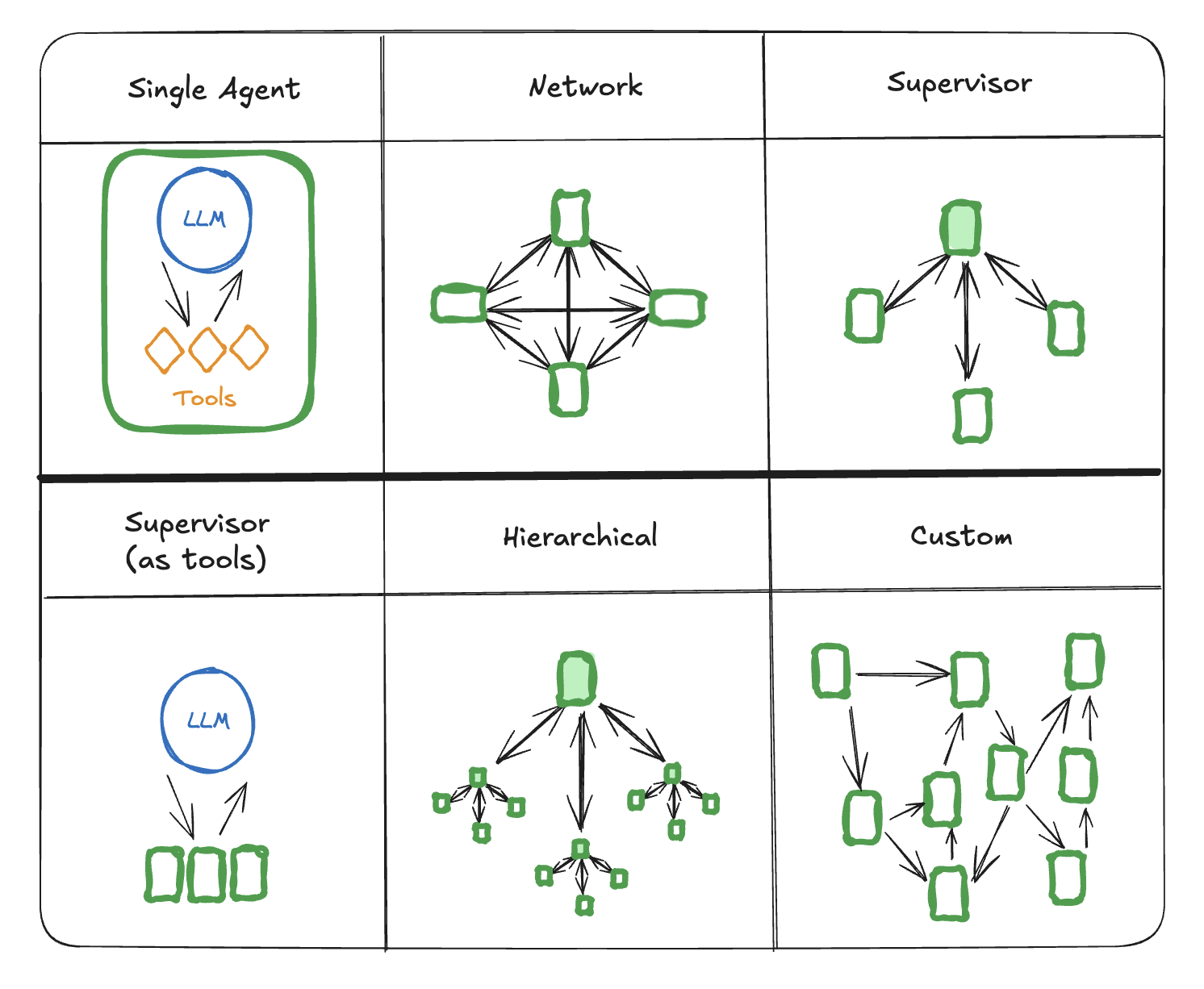

Here's how this multi-agent mess works - a supervisor agent breaks down your request and farms it out to specialist agents that actually know what they're doing in their specific domains, instead of one dumb agent trying to handle everything and failing spectacularly.

Amazon Bedrock multi-agent collaboration became generally available in early 2025. This lets you build AI systems where multiple specialized agents work together instead of trying to cram everything into one massive prompt that breaks when users ask unexpected questions.

The supervisor takes complex requests and delegates them to specialist agents - one handles financial analysis, another deals with regulatory compliance, whatever. Each agent has its own knowledge base and tools, so they can actually be good at their specific job without stepping on each other's toes like your typical cross-functional team.

Real-world usage: We built a customer support system with three agents - one handles technical issues, another processes billing questions, and a third escalates to humans when needed. Way better than our previous single-agent system that was completely fucking useless for anything more complex than "what's the weather today?" The agents can work in parallel, so complex requests that used to take 30-45 seconds now finish in 8-12 seconds when they work. When they don't work, you get delightful errors like Agent execution failed: Unable to determine routing destination with zero helpful documentation on what that actually means.

What breaks: Agent coordination becomes a complete shitshow when requests span multiple domains. Had this one case where the financial and compliance agents got into some weird loop arguing about a purchase approval - supervisor just gave up after 30 seconds and threw a HTTP 500 Internal Server Error. The error handling docs are useless - when agents can't coordinate properly, you're stuck debugging with CloudWatch logs that basically tell you "something went wrong somewhere." Also expensive as hell - our AWS bill tripled even though the system works better overall.

Amazon Nova Model Customization: Fine-Tuning That Actually Works

Amazon Nova customization in SageMaker was announced in late 2024 and became more widely available in 2025. This gives you extensive fine-tuning capabilities for Amazon's foundation models - continued pre-training, supervised fine-tuning, direct preference optimization, reinforcement learning from human feedback, and model distillation.

What makes it different: Previous AWS fine-tuning options were basically prompt engineering with extra steps. Nova customization lets you actually modify the model weights using your data. The model distillation features are particularly useful - you can create smaller, cheaper models that keep most of the performance of the big ones, maybe like 80-90% depending on your use case and how lucky you get.

Production experience: Used Nova fine-tuning for domain-specific legal document analysis. Base model was terrible at understanding contract clauses - kept returning garbage like "this appears to be a legal document" instead of actual analysis. Fine-tuned model actually works now and can spot liability clauses the lawyers care about. The process beats training from scratch but you'll still hit ModelTrainingJobInProgress errors that last for hours with no progress updates. Took two weeks instead of three months, but expect random ValidationException: Training job failed due to client error messages that AWS support can't explain.

Reality check: First training run failed after 18 hours because we hit some undocumented token limit. Second run failed because the training data S3 bucket had versioning enabled and Nova couldn't figure out which version to use - nowhere in the docs does it mention this gotcha. Third run actually worked but produced a model that was somehow worse than the base model. Turned out our eval dataset was contaminated with training examples, so it looked good in validation but was complete shit in real use. Model distillation cut our inference costs by 60% but debugging the distillation pipeline when it breaks is like trying to debug a black box inside another black box.

Cost reality: Fine-tuning will hurt your wallet - we spent like 8 grand on our first training run, then another 12 on the second one when that failed, then 6 more on the third attempt that actually worked. Costs are all over the place depending on dataset size and how many times you have to retry when shit breaks. Could be anywhere from 5 to 20 grand per successful training run. But if you've got specialized requirements that off-the-shelf models can't handle, it beats building from scratch. Would have cost us at least 50k and six months of developer sanity to build our legal doc analyzer the old way.

OpenAI Open Weight Models on AWS: The Models Everyone Actually Wants

OpenAI + AWS Integration - GPT models that you can actually control (for a premium price)

AWS announced in August 2025 that OpenAI's open weight models are available in Bedrock and SageMaker. This includes GPT-OSS-120B and GPT-OSS-20B models that you can run on your own infrastructure or through managed AWS services.

Why this matters: Teams have been asking for GPT-quality models they can actually control. The managed OpenAI API is fast and convenient but doesn't work for regulated industries that need on-premises deployment or custom fine-tuning. Having these models available through AWS closes that gap for enterprise compliance requirements.

Technical details: The models are available through SageMaker JumpStart for self-hosted deployment and through Bedrock for managed inference. Performance is comparable to GPT-4 class models but with the flexibility to modify training data, implement custom guardrails, and maintain data residency requirements.

Early results: Been testing GPT-OSS-120B for the past month. Quality is solid - actually better than Claude 3.5 Sonnet for technical writing, which surprised the hell out of me. Costs are higher than hitting OpenAI's API directly, maybe 10-20% more depending on usage patterns, but you can actually fine-tune it and keep your data from being used to train their next model, which legal finally stopped complaining about.

Bedrock AgentCore: The Modular Agent Platform (Preview)

Amazon Bedrock AgentCore entered preview in July 2025. This is AWS's attempt to build a modular, composable platform for AI agents that works with any model (not just Bedrock) and any open-source agent framework.

The vision: Instead of being locked into AWS's agent architecture, you can use components independently. Want AWS's agent orchestration but with your own models? Fine. Want to use LangGraph for workflow management but AWS's security and scaling? Also fine. The services are designed to work together or separately.

Preview limitations: Don't put this in production yet unless you hate your weekends. The docs assume you have a PhD in agent architectures. Error handling is a coin flip - sometimes it fails gracefully, sometimes it just explodes with a ValidationException that tells you nothing. Integrating with existing systems requires so much custom code you might as well build it yourself.

Worth watching: If AWS doesn't fuck this up (big if), it could solve the vendor lock-in nightmare that keeps enterprise teams awake at night. But it's preview software from the company that gave us AWS Config and Systems Manager, so maybe don't hold your breath. Wait for GA and let other people discover the gotchas first.

What Didn't Make the List (And Why)

S3 Tables integration: Useful for data teams but doesn't fix the core problem that finding data in S3 is still like searching for a needle in a haystack made of more needles.

Bedrock IDE: Still half-baked. It's a basic notebook that doesn't do anything better than Jupyter, plus it has that special AWS flavor where simple things randomly break for no reason.

Lambda model integration: Incremental improvements that fix problems AWS created in the first place. Still doesn't solve the cold start issue that makes serverless ML painful for anything time-sensitive.

Implementation Timeline for Teams

Based on production experience, here's the realistic adoption timeline for these new features:

Month 1-2: Start with SageMaker Unified Studio if your team struggles with data access and discovery across multiple AWS services. The learning curve is manageable and immediate productivity gains are significant.

Month 3-4: Evaluate Bedrock multi-agent collaboration for use cases where single-agent approaches are failing. Start with simple two-agent systems before building complex orchestrations.

Month 6-8: Consider Nova model customization if you have domain-specific requirements and budget for fine-tuning. The ROI is high for specialized use cases but requires dedicated ML engineering resources.

Month 9-12: Test OpenAI open weight models for enterprises with strict data governance requirements. These models provide GPT-class performance with the control and customization that regulated industries need.

The common thread across all these updates: AWS is finally building tools that recognize how teams actually work instead of forcing workflows around service boundaries. The question isn't whether these features are useful - it's whether your organization can adopt them fast enough to maintain competitive advantage.