The Computer Use API costs way more than you think. Fucking API burned through my budget in three days because nobody mentions the token overhead.

What They Don't Tell You About Costs

Anthropic's pricing docs mention Computer Use but the examples are bullshit. Screenshots pile up way faster than their cute little quickstart suggests. The official Computer Use documentation barely covers real-world cost implications.

Every request burns tokens on:

- System prompt overhead: ~800 tokens just to start

- Tool definitions: ~300 more tokens

- Screenshot processing: 1,200+ tokens per screenshot

So that's like $0.004 per screenshot minimum with Sonnet 3.5 at $3/$15 per MTok. Doesn't sound like much until you realize this thing takes screenshots every few seconds.

Why Costs Explode

Computer Use screenshots everything. And I mean everything. Page loads? Screenshot. Button clicked? Screenshot. Waiting for something to load? More screenshots.

I set up automation to fill some forms and went to grab coffee. Came back to see 200+ screenshots for what should've been a 5-step process.

What makes costs explode:

Retry loops when Claude gets stuck on buttons, high-res displays because bigger images = more tokens, modern web apps that confuse the hell out of it, and leaving this shit running unattended overnight.

Lower Resolution = Lower Bills

Higher resolution screenshots cost way more tokens. Docs barely mention this but it's huge.

## Drop your resolution and save money

docker exec computer-use xrandr --output VNC-0 --mode 1024x768

## Note: Breaks on some older xrandr versions (pre-1.5.0) with:

## \"xrandr: cannot find display\"

Hacky but works. Switched from 1440p to 1024x768 and bills dropped about 40%. UI elements are still big enough for Claude to find.

Don't Let Workflows Run Forever

Long workflows fail more and cost more. Every failure means starting over with fresh screenshots.

Break shit into smaller pieces:

## Hard limits before going broke

max_screenshots = 15

screenshot_count = 0

for step in workflow_steps:

if screenshot_count > max_screenshots:

print(\"Hit screenshot limit, bailing out\")

break

try:

result = execute_step(step)

if result.worked:

save_progress(step)

except Exception as e:

print(f\"Step failed: {e}\")

break

Forces you to design workflows that bail out instead of burning money on infinite retries.

Caching Reality Check

Prompt caching helps if you're doing similar tasks repeatedly, but screenshots change every time so it's limited. Check Anthropic's prompt caching guide and their recent token-saving updates for more optimization strategies.

## Cache the system prompt if doing similar tasks

cached_system = {

\"type\": \"text\",

\"text\": \"Your computer use system prompt...\",

\"cache_control\": {\"type\": \"ephemeral\"}

}

Works best for repetitive workflows. If every task is different, caching won't save much. For web stuff, just use Selenium - way cheaper. ChromeDriver 118+ handles most sites Computer Use struggles with anyway. Playwright is even faster than Selenium for modern web automation.

Don't Screenshot When Nothing Changed

Obviously don't take screenshots when the screen's the same.

import time

import hashlib

last_screenshot_hash = None

def should_take_screenshot(current_screenshot_data):

global last_screenshot_hash

current_hash = hashlib.md5(current_screenshot_data).hexdigest()

if current_hash == last_screenshot_hash:

time.sleep(2)

return False

last_screenshot_hash = current_hash

return True

Screen hasn't changed? Don't burn tokens on the same image. This saved me about $15/day when I was debugging a flaky Docker Desktop setup that kept randomly stopping.

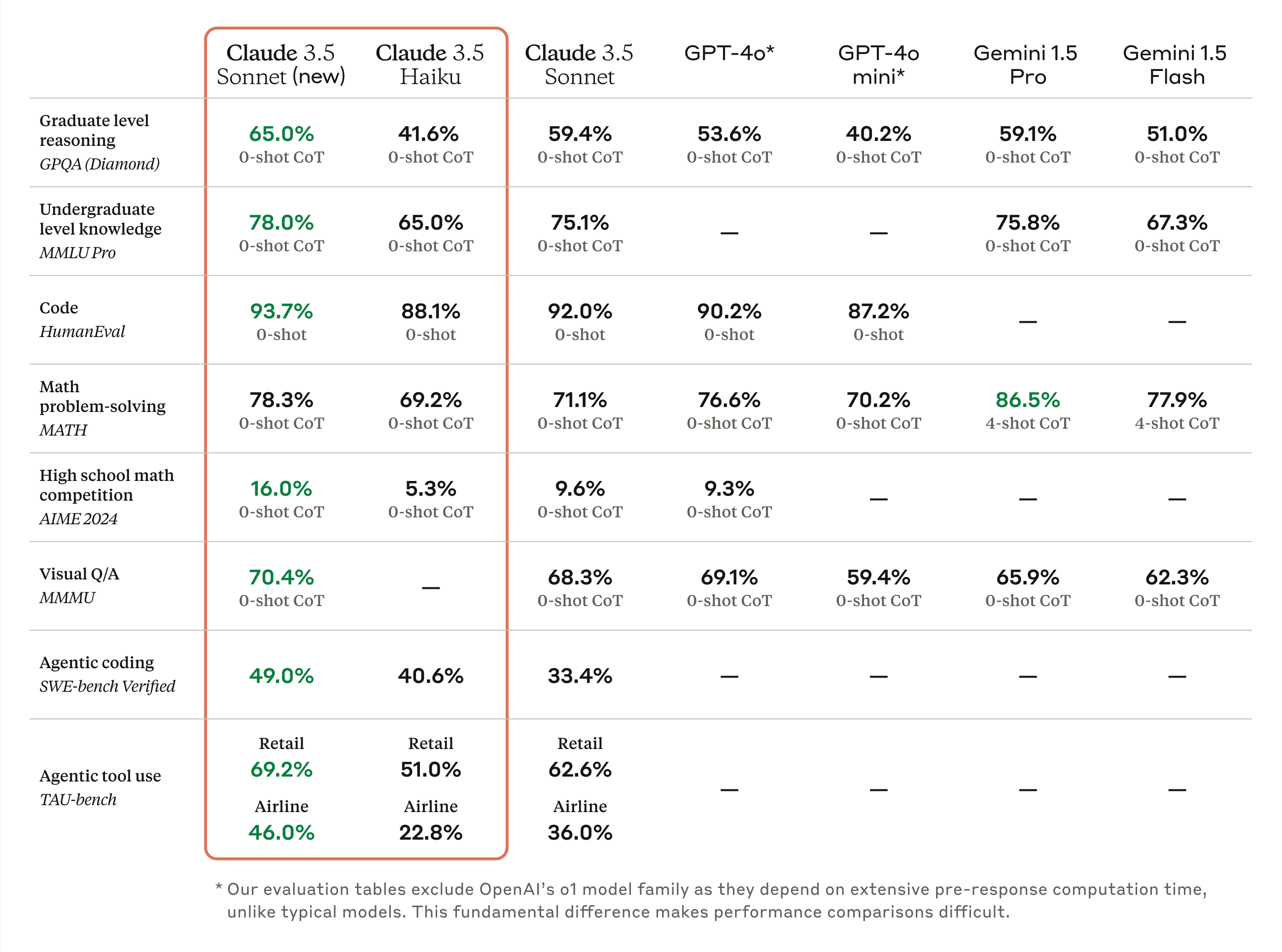

Model Selection Reality

| Model | Input Cost | Output Cost | What Actually Happens |

|---|---|---|---|

| Claude Haiku 3.5 | $0.80/MTok | $4/MTok | Cheap but misses obvious buttons |

| Claude Sonnet 3.5 | $3/MTok | $15/MTok | Costs more but usually works |

Tried Haiku to save money. Kept missing buttons and retrying. "Savings" got eaten by retry loops. Just use Sonnet unless you're doing really simple stuff.

Track Your Spending or Go Broke

Set up cost tracking or wake up to scary bills:

## Track spending before going broke

daily_budget = 50

current_spend = 0

def track_cost(input_tokens, output_tokens):

global current_spend

cost = (input_tokens * 3e-6) + (output_tokens * 15e-6)

current_spend += cost

if current_spend > daily_budget * 0.8:

print(f\"WARNING: ${current_spend:.2f} spent today\")

if current_spend > daily_budget:

print(\"EMERGENCY: Daily budget exceeded!\")

# Actually stop the automation here

Need some way to kill runaway automation before it drains your account. Pro tip: AWS billing alerts take 6+ hours to trigger, so don't rely on them alone. Check out rate limits documentation for API-level controls.

What's Actually Improved

Computer Use accuracy has gotten better - fewer retry loops now. Documentation has more cost optimization stuff. Alternative tools like Selenium are still way cheaper for web automation. Here's a comprehensive cost optimization guide that covers hidden API costs.

Bottom line: Computer Use is expensive but more reliable now. Traditional browser automation tools still make more financial sense for most stuff. Check out Anthropic's official engineering blog for the latest optimization techniques.