Every vendor shows you the same bullshit math: 200 developers × $39/month = $93K annually. Except that $39 never includes the security review that takes 6 months, SSO integration that destroys your auth stack, or the compliance audit that finds 17 ways your tool violates policy.

This Is Where Everything Goes to Shit

The fintech I worked with budgeted around $120K for GitHub Copilot Enterprise. Simple math - 250 developers, $39 each, done.

About 18 months later they were somewhere north of $350K and still fighting with compliance about data residency. The tool worked OK when it wasn't getting rate-limited or blocked by security policies. Actually, that's being generous - it worked maybe 60% of the time.

Here's where it all fell apart:

European Company Discovers GDPR the Hard Way

This European tech company with maybe 300 developers tried to deploy GitHub Copilot globally. Budgeted something like $140K based on the per-seat math.

Last I heard they were around $400K and still fighting with lawyers.

The whole thing imploded when their Brussels legal team figured out GitHub processes EU developer data in US cloud regions. EU privacy regs meant they needed separate regional deployments, except GitHub doesn't really support that despite what sales promised.

Where It All Went Wrong:

GDPR Compliance Hell

- External lawyers charged something like $80K just to review GitHub's data processing agreements

- Each EU subsidiary needed separate Data Processing Impact Assessments

- German ops demanded data never leave EU borders (which GitHub Copilot can't do)

- Months of back-and-forth with GitHub legal about regional data isolation

SSO Integration Nightmare

Their existing Active Directory setup worked fine until they flipped on Copilot Enterprise SSO. Authentication started randomly failing across their entire dev environment with unhelpful "SAML_RESPONSE_ERROR" messages. Took weeks and probably $25K in SAML consulting to fix.

The Rate Limiting Bullshit

GitHub Copilot Enterprise has these undocumented rate limits, maybe 150-200 requests per hour per user. Their senior engineers hit these constantly during crunch time, making the tool basically useless when you need it most. GitHub's helpful response: "upgrade to premium support for another $50K annually." I wanted to punch their sales rep.

Developer Revolt

Half their frontend team switched to Cursor anyway because Copilot's suggestions sucked for React 18 with TypeScript 5.0. Now they're paying for both tools - GitHub for compliance, Cursor for actual productivity. Classic enterprise clusterfuck.

Why Banks Get Screwed on AI Tool Pricing

This major US bank wanted AI coding for their 400-person dev team. Regulatory requirements meant zero code could leave their network - no cloud AI, no external APIs, nothing.

Tabnine was the only vendor offering true air-gapped deployment. Sales pitch: $39/user/month, same as everyone else.

First year cost ended up around $1.2M or so. That's like $250/month per developer for what's basically a worse version of autocomplete everyone else gets for $20.

Why Air-Gapped Costs 10x More:

The GPU Infrastructure Nobody Mentions

Tabnine's air-gapped deployment needs dedicated GPU servers to run models locally. Sales never mentioned this fucking detail. Bank ended up buying something like $180K worth of NVIDIA A100s just to make the tool work.

Security Theater Costs

- Maybe $120K for penetration testing of their internal Tabnine deployment

- Around $80K annually for SOC 2 audits of infrastructure they control anyway

- Internal security team spent months documenting AI-specific risk controls

- Compliance demanded separate network segments, probably another $60K in hardware

The Support Desert

Air-gapped means basically no vendor support. Model updates arrive monthly via encrypted USB drives (I shit you not - like we're back in 1995). When stuff breaks, the bank's team has to figure it out themselves. They hired 2 additional platform engineers at around $180K each just to babysit Tabnine.

Model Quality Reality Check

Tabnine's air-gapped models are garbage compared to GPT-4 or Claude. Developers complained constantly about useless suggestions. But compliance doesn't care about developer satisfaction - only about keeping proprietary code internal.

How Tool Sprawl Destroyed a Startup's Budget

This startup with maybe 50 engineers started simple: GitHub Copilot Business at $19/user/month. Around $950/month total. Seemed reasonable.

About 18 months later they had grown to like 180 engineers and were burning $8K+ per month across 6 different AI tools. Nobody could really explain how it happened.

The Slow-Motion Budget Explosion:

Frontend Team Switched to Cursor

GitHub Copilot sucked for React/TypeScript work, so the frontend team (12 devs) switched to Cursor Pro at $20/month each. Fine, whatever.

Then Cursor launched their Team plan with better context sharing. Frontend team upgraded to $40/month per user. Still cheaper than Copilot Enterprise, right?

Wrong. Cursor's credit system meant heavy users were burning $200-400/month in additional charges. Nobody warned them about this. Fucking sneaky if you ask me.

Backend Team Tool Shopping

Backend engineers hated Cursor but GitHub Copilot didn't understand their Go microservices architecture. Half switched to Claude Pro ($20/month), others tried Amazon Q Developer ($19/month).

Now they had engineers using 4 different tools with completely different interfaces and capabilities.

The Management Tax

- IT spent 2 days/month reconciling licenses across multiple vendors

- Finance couldn't track which tool was generating actual value

- Security team demanded compliance reviews for each new tool ($25K external audit)

- Department VPs kept requesting usage analytics nobody could provide

Developer Productivity Nightmare

Engineers switching between projects had to learn different tools. Senior dev switching from a Cursor project to a Copilot project lost 2-3 hours relearning different interfaces and hotkeys.

Code quality became inconsistent because different AI models suggested completely different patterns for the same problems.

Government Contractors: Where Good Budgets Go to Die

Defense contractor with around 120 developers needed AI coding tools. GitHub Copilot Enterprise has FedRAMP authorization, so deployment should be straightforward, right?

Something like 23 months and close to $900K later, they had a working system. For 120 developers. That's over $600/month per developer for GitHub fucking Copilot. I've seen smaller countries with lower defense budgets.

Why Government Procurement is Hell:

FedRAMP Authorization Doesn't Mean Shit

GitHub has FedRAMP authorization, but that doesn't authorize YOUR specific use case. Every deployment needs separate approval through the ATO (Authority to Operate) process.

This took 9 months. Nine. Months. For autocomplete software.

Security Clearance Nightmare

Every developer using the tool needed security clearance verification. 34 of their contractors failed clearance reviews and couldn't use the tool they'd already paid for.

The Documentation Black Hole

Government contracts require NIST 800-53 compliance documentation. They hired 3 technical writers at around $140K each for like 8 months just to document their AI tool deployment.

The final documentation package was thousands of pages. For a coding assistant.

Infrastructure Overkill

- Dedicated government cloud instance (costs 3x normal pricing)

- Separate network segments with government-approved monitoring

- Air-gapped backup systems that nobody will ever use

- Specialized logging that captures every AI interaction for audit trails

Operational Clusterfuck

System administration requires security-cleared personnel. They hired 2 cleared sysadmins at $180K each just to manage the Copilot deployment.

Every software update requires formal change management approval. GitHub pushes Copilot updates weekly. Each update requires a 2-week approval process.

Healthcare: Where HIPAA Compliance Kills Common Sense

Large hospital system with around 200 developers wanted GitHub Copilot Enterprise. Microsoft has a legit Business Associate Agreement for HIPAA compliance, so they figured they were covered.

Initial budget: around $94K annually for licenses.

Actual first-year cost: something like $340K.

Why Healthcare IT is Expensive Paranoia:

Legal Review Hell

Their legal team spent 6 months reviewing Microsoft's BAA. Every single clause required healthcare-specific modifications. Legal fees: $85K.

The final contract took 14 months to negotiate. For autocomplete software.

PHI Panic Mode

Compliance team discovered that AI models sometimes suggest variable names like patient_ssn or diagnosis_code. Even though this isn't actual patient data, they treated every AI suggestion as potential PHI exposure.

This led to mandatory code reviews for ALL AI-generated code. They hired 3 additional senior engineers at $145K each just to review AI suggestions.

Technical Overkill

- Data loss prevention system configured to block AI tools if they detect healthcare-related terms ($60K setup)

- Enhanced logging that captures every single AI interaction ($25K annually)

- Separate development environments isolated from production networks ($80K infrastructure)

- Role-based access controls that required 2-factor auth for AI tool access

The Compliance Tax

Dedicated HIPAA compliance officer spent 40% of her time on AI tool oversight. Annual audits now include AI-specific compliance reviews costing $45K annually.

The Ironic Result:

After 14 months and $340K in deployment costs, developers barely used the tool. The code review requirements made AI suggestions slower than just writing code manually.

Most developers disabled Copilot and went back to Stack Overflow.

What I've Learned from These Clusterfucks

Every enterprise AI coding deployment I've worked on has gone massively over budget. Not by 20% or 30% - more like 3-5x the original estimate.

The pattern is always the same:

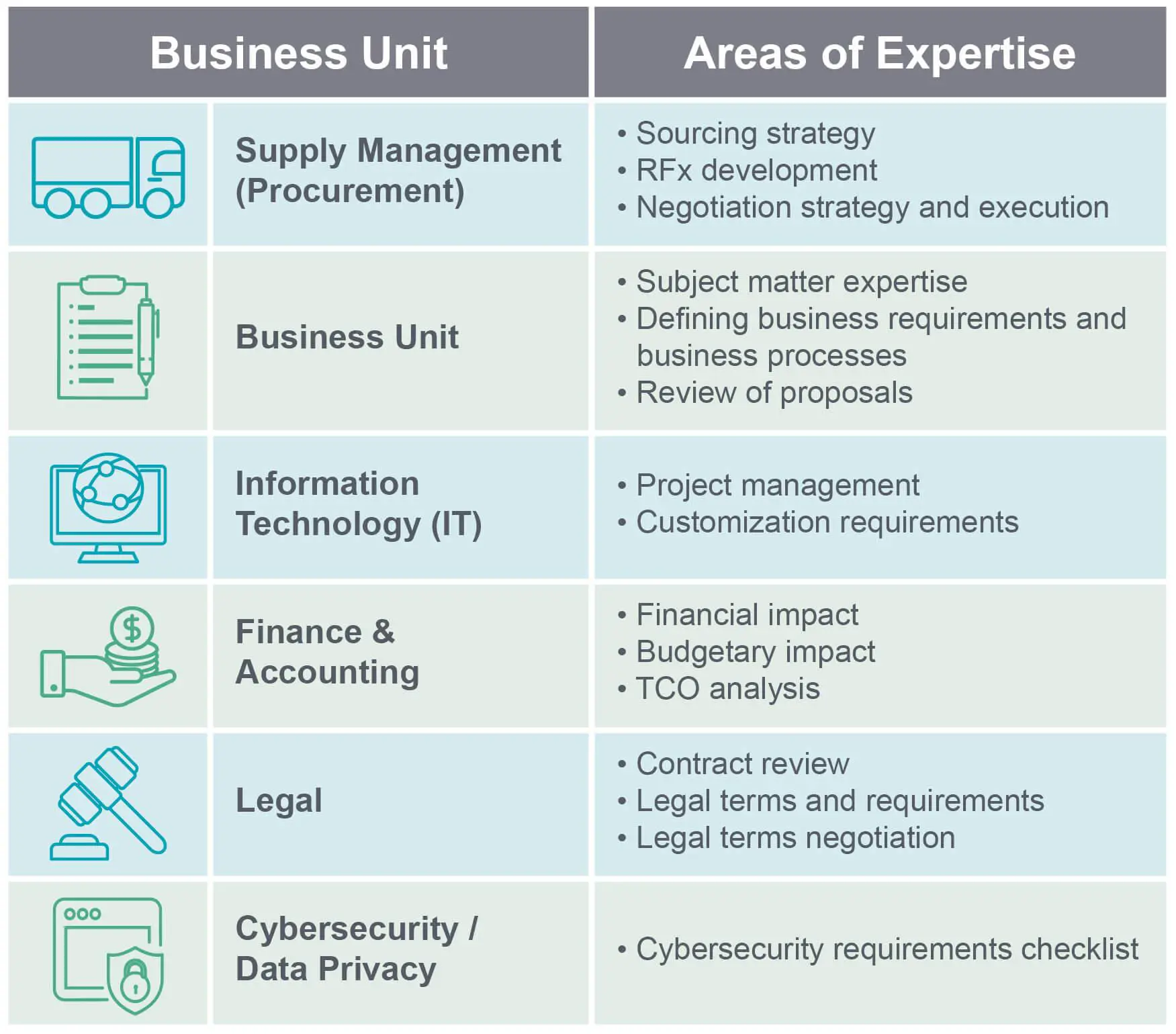

- Some VP sees a demo and gets excited

- Procurement quotes simple per-seat math

- Legal, security, and compliance teams find out about it

- Costs explode when everyone realizes enterprise software isn't just "adding users"

The Real Cost Multipliers:

Regular companies: 2-3x advertised pricing

Global companies: 3-4x (data residency is expensive)

Banks: 4-6x (air-gapped deployments cost a fortune)

Healthcare: 3-5x (legal reviews take forever)

Government: 5-10x (bureaucracy multiplies everything)

The Depressing Reality:

Most "successful" enterprise AI tool deployments cost more than they save. Companies spend $500K deploying tools to make developers 15% more productive, then wonder why their engineering budgets are exploding.

The companies that actually get value from AI coding tools are the ones that:

- Budget 3-4x the advertised pricing from day one

- Plan for 18-24 month deployment timelines

- Accept that compliance requirements will dominate costs

- Choose tools based on what compliance approves, not what developers prefer

If your company thinks deploying AI coding tools is simple, you're about to learn an expensive lesson about enterprise software procurement.