Here's why this matters: EEG signals suck. They're noisy, weak, and picking up your brain's commands through your skull is like trying to hear a whisper through a concrete wall. That's why everyone assumed you needed to drill holes and stick electrodes directly into brain tissue like Neuralink does.

UCLA's team figured out that if you throw enough AI at the problem, you can make shitty EEG signals work almost as well as implanted electrodes. The AI co-pilot system watches what you're trying to do and fills in the gaps where the brain signals are unclear.

Here's What Actually Happened

They tested it on four participants - three regular folks and one guy paralyzed from the waist down. Two tasks: move a cursor on screen, and control a robotic arm to grab stuff.

The paralyzed guy couldn't even start the robot arm thing without AI. With AI? Took him forever, maybe 6 minutes or something, hard to tell from their demo video. Still, going from completely unable to getting blocks moved around is pretty damn impressive.

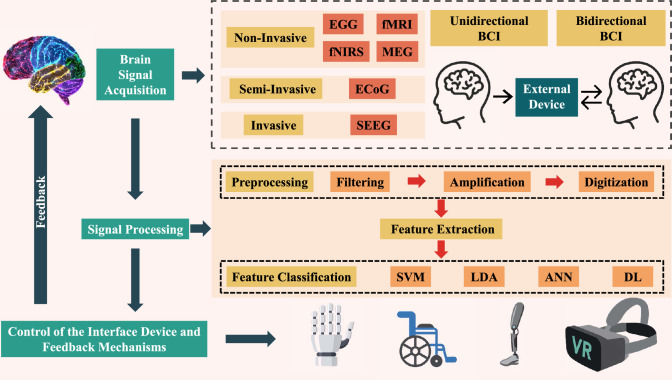

The system uses two types of AI working together: one that figures out what your brain is trying to tell the EEG sensors, and another that watches the environment through computer vision cameras to understand what you're trying to accomplish.

Why This Isn't More Hype

Most brain-computer interface research is academic masturbation - cool in the lab, useless in reality. But UCLA's Jonathan Kao published this in Nature Machine Intelligence, which means the peer reviewers actually checked their work.

The real test isn't whether it works in perfect lab conditions. It's whether someone can use it at home without a team of PhD students adjusting it every five minutes. We don't know that yet, but the fact that one paralyzed person could operate a robot arm after minimal training suggests they're onto something real. Compare that to other BCI systems that take months of training.