Look, Supabase realtime runs on WebSockets. When they break, your chat app turns into a refresh-fest from 2005. I learned this the hard way when our product demo died in front of investors.

Here's What's Actually Going Wrong

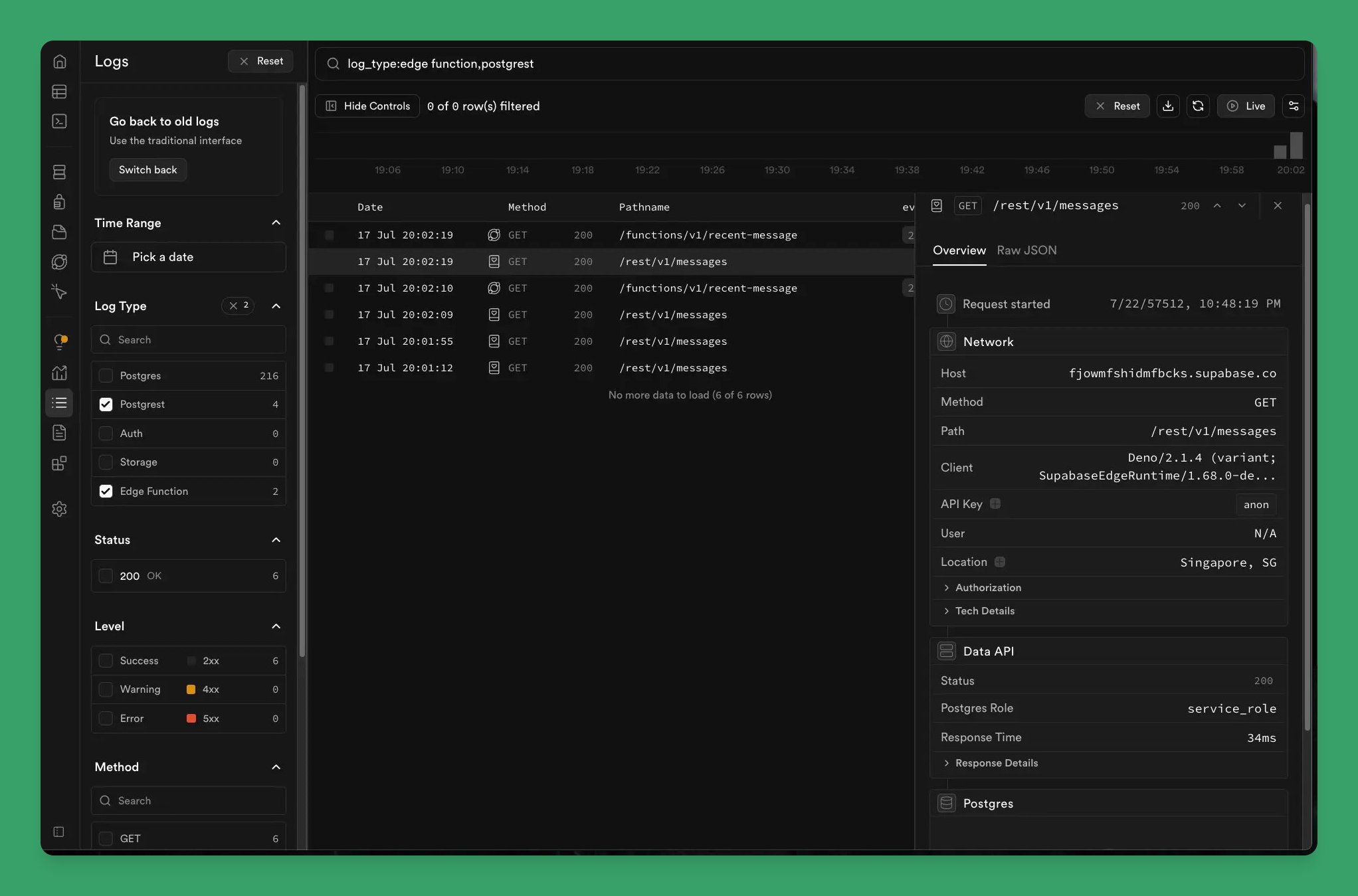

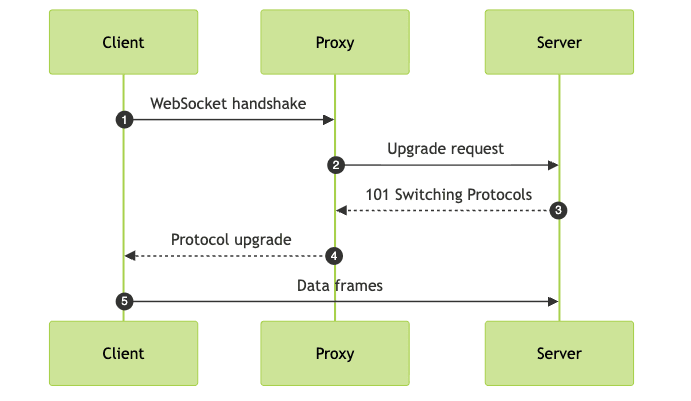

Your WebSocket connects to wss://[project-ref].supabase.co/realtime/v1/websocket, authenticates with a JWT, subscribes to channels, and waits for database changes. Simple enough, right?

Wrong. Every step can fail spectacularly:

Step 1 breaks because: Corporate firewalls hate WebSockets more than they hate productivity.

Step 2 breaks because: JWT tokens expire. Supabase doesn't tell you when this happens. You find out when users complain their chat stopped working.

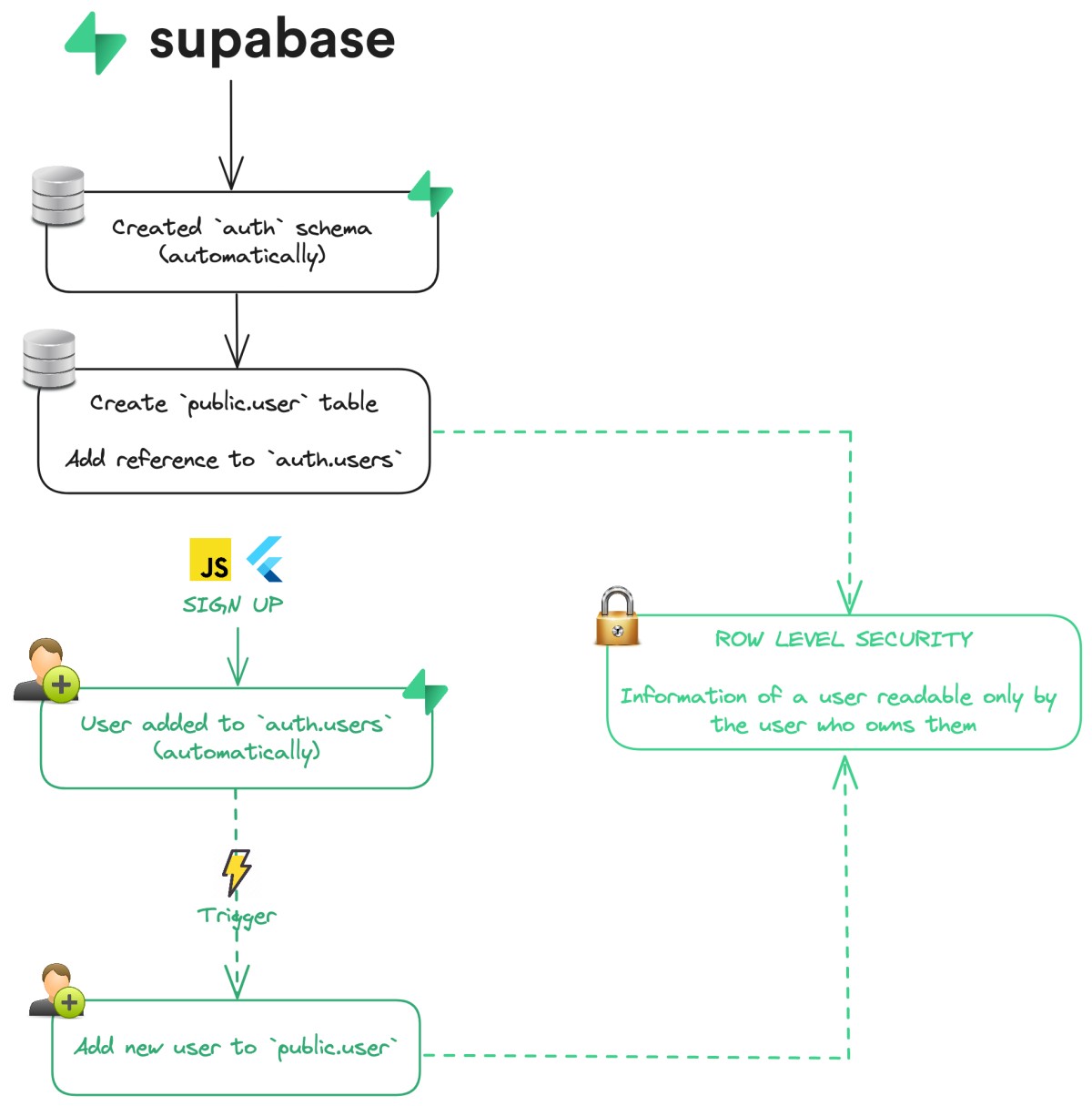

Step 3 breaks because: RLS policies that work fine for regular queries suddenly block realtime subscriptions. Why? Because Supabase's realtime server checks permissions differently than your app.

Step 4 breaks because: You hit connection pool limits and Supabase just says "unable to connect to database" like that's helpful.

Step 5 breaks because: Mobile OSes kill background connections faster than you can say "battery optimization".

Common Failure Patterns You'll Encounter

Mobile Background Hell

Your app works perfectly. User locks their phone for 5 seconds. App comes back to a dead connection that refuses to reconnect.

iOS and Android kill your WebSocket connections to save battery. They don't ask permission. They don't send a goodbye message. Your connection just dies.

I spent 3 days debugging this before finding GitHub issue #1088 where someone else suffered through the same shit: "minimize the mobile app and lock the phone for 3 seconds. After reverting back to the app you should get two CHANNEL_ERROR subscription statuses."

Translation: Your app is fucked and you need to handle app lifecycle events properly.

Reconnection Death Spirals

Your app gets stuck in SUBSCRIBED → CLOSED → TIMED_OUT → SUBSCRIBED loops forever. Users watch the loading spinner while you hemorrhage 5-star reviews.

This happens because you're not cleaning up failed channels before creating new ones. The server thinks you're already connected while your client thinks it's connecting. Classic distributed systems nightmare.

One developer on GitHub put it best: "I had to wrap the channel handling because it does not reconnect the channels, I manually have to remove them and subscribe again."

The "proper" way is in the docs. This is what actually works: nuke the old channel completely before creating a new one.

Connection Pool Exhaustion

"Unable to connect to the project database" is Supabase's way of saying "you're fucked, figure it out yourself."

Free tier gets 200 connections, Pro gets 500. Sounds like a lot until your Next.js app opens 50 connections per API route. I learned this during our Black Friday launch when our chat went down and 500 angry customers couldn't complain to each other.

Connection pooling should be default. Instead it's buried in docs nobody reads.

RLS Policies That Secretly Hate Realtime

Your subscription says SUBSCRIBED. No errors. No data. Just... nothing.

Here's the thing nobody tells you: RLS policies that work fine for regular queries can completely block realtime subscriptions. The realtime server checks permissions differently than your app.

I spent 4 hours debugging this before finding out the realtime service needs SELECT permissions that my "secure" policy was blocking. My policy worked for everything else. Just not the one thing I actually needed.

Version-Specific Fuckups

supabase-js v2.50.1 is cursed. It has a critical bug that causes "unable to connect to database" errors. Pin your versions to v2.50.0 or suffer random production failures. GitHub discussion #36641 has the gory details.

I've been burned by auto-updating dependencies too many times. Now I check the GitHub issues before upgrading anything Supabase-related.

Network Bullshit

Corporate firewalls block WebSocket connections because security teams think it's 1995. Mobile networks drop connections when switching between cell towers. VPNs interfere with WebSocket upgrades. Browsers implement WebSockets differently and some are shit.

Your beautiful local setup works perfectly. Production is where dreams go to die and WebSocket connections go to timeout.

The Problem: Your RLS policies are blocking the realtime service. This is the most common fuck-up.

The Problem: Your RLS policies are blocking the realtime service. This is the most common fuck-up. Translation:

Translation: