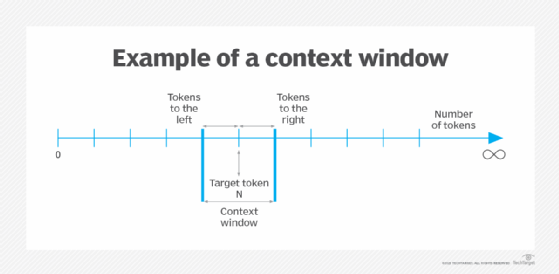

Context window exhaustion doesn't crash like a segfault. It's way worse - your AI just gets progressively dumber and you keep thinking it's helping.

I've seen this kill entire debugging sessions. Your AI starts great, then 30 minutes later it's suggesting try-catch blocks for everything and asking you to repeat the error message you pasted 10 minutes ago.

The Depressing Truth About AI "Productivity"

Here's the fucked up part about AI coding: you feel productive while actually getting slower. I've seen this happen to entire teams - developers feel like they're flying while actually taking longer to ship stuff.

I've lived this shit firsthand. Spent 3 hours debugging auth middleware that looked perfect because my AI forgot we use passport.js. The code compiled clean, tests passed, but login was completely fucked - kept getting req.user is undefined even after supposedly successful authentication. Fast responses from your AI trigger that dopamine hit that screams "I'm being productive!" even when you're implementing solutions for the wrong tech stack.

The warning signs (that you'll probably miss):

- Suggestions become useless ("use proper error handling" - thanks, genius)

- AI asks for shit you already told it

- Code suggestions ignore your actual project structure

- Generated code compiles but breaks everything when you integrate it

- Debugging help becomes "have you tried console.log?"

Why Your Brain Falls for This Crap

Your brain is terrible at noticing gradual quality decline. We adapt to bad AI suggestions the same way we adapt to a dimming screen - the change is so gradual you don't notice until someone points it out.

I've seen this happen to entire teams. Developers trust generated code without proper review, especially when fast responses make them feel productive. Your brain sees quick answers and thinks "helpful AI" even when the suggestions are garbage. The pattern is always the same - feel more productive while actually being less effective.

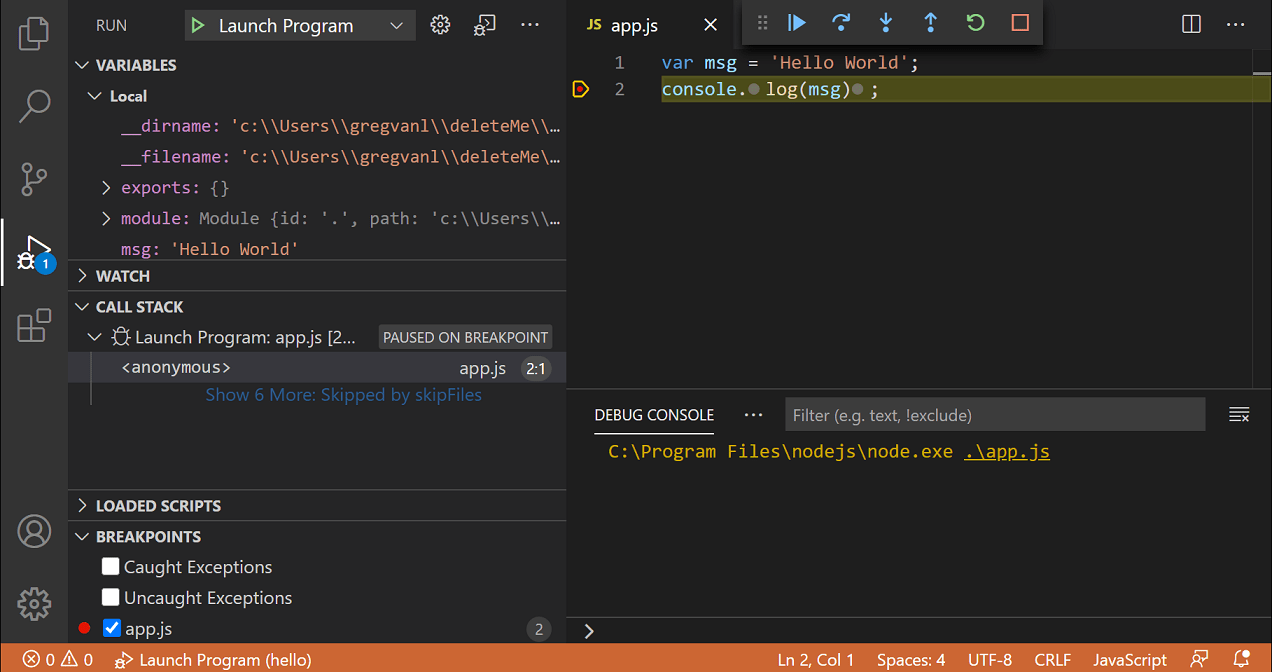

When Your AI Breaks Production (True Story)

Had this auth bug where Claude suggested middleware that let users access stuff they shouldn't. Took us way too long to figure out why random people could see admin panels - turns out it broke our permission checks and was checking req.user.role === 'admin' when our actual field is req.user.permissions.includes('admin').

The AI wasn't trying to screw us over - it just couldn't remember our auth setup from earlier in the conversation. Started treating our Express app like some generic tutorial project instead of the actual enterprise clusterfuck we'd been discussing. You don't need research to tell you that forgetting your system architecture is dangerous as hell.

How Context Loss Screws You Over

The "Almost Right" Trap: Your AI writes perfect-looking code that compiles clean and passes unit tests, then explodes spectacularly when you actually run it. Without context about how your microservices communicate, the AI optimizes for the isolated function you showed it, not your actual distributed clusterfuck.

Spent 3 hours debugging TypeError: Cannot read property 'user' of undefined because my AI forgot our API responses get wrapped in {success: true, data: {...}}. The function looked right but kept failing because it was checking response.user instead of response.data.user.

The Thing Forgets Your Decisions: Your AI suggests patterns you deprecated months ago. Doesn't remember you ditched Redux, keeps suggesting Redux shit anyway. This problem gets worse with bigger codebases.

The Dependency Bullshit: Can't see your package.json, so it suggests libraries you removed months ago. Waste half a day trying to integrate something that's not even installed. Without context, AI tools become productivity drains.

Here's the fucked up part: when your AI gets stupid, it feels like you're the problem. You think your requirements suck or your project is too complex. Nope - your AI just forgot everything about your codebase.