Ever had your fraud system block your CEO's corporate card while letting obvious bot accounts waltz right through? Yeah, that's why Sift exists. Most fraud detection is still stuck in 2015 with rule-based systems that treat every transaction from Ohio like it's suspicious.

The Problem with Rule-Based Fraud Detection

Traditional fraud systems work like this: IF purchase > $100 AND new_zipcode = true THEN flag_for_review. Brilliant, right? Your legitimate customers get blocked, your fraud team drowns in false positives, and the actual fraudsters figure out your rule-based patterns in about a week.

I spent 6 months at a previous company trying to tune these rules. Every time we fixed one false positive pattern, we'd break something else. Black Friday was a nightmare - half our legitimate customers got blocked while credit card testers ran wild.

How Sift Actually Works (The Technical Reality)

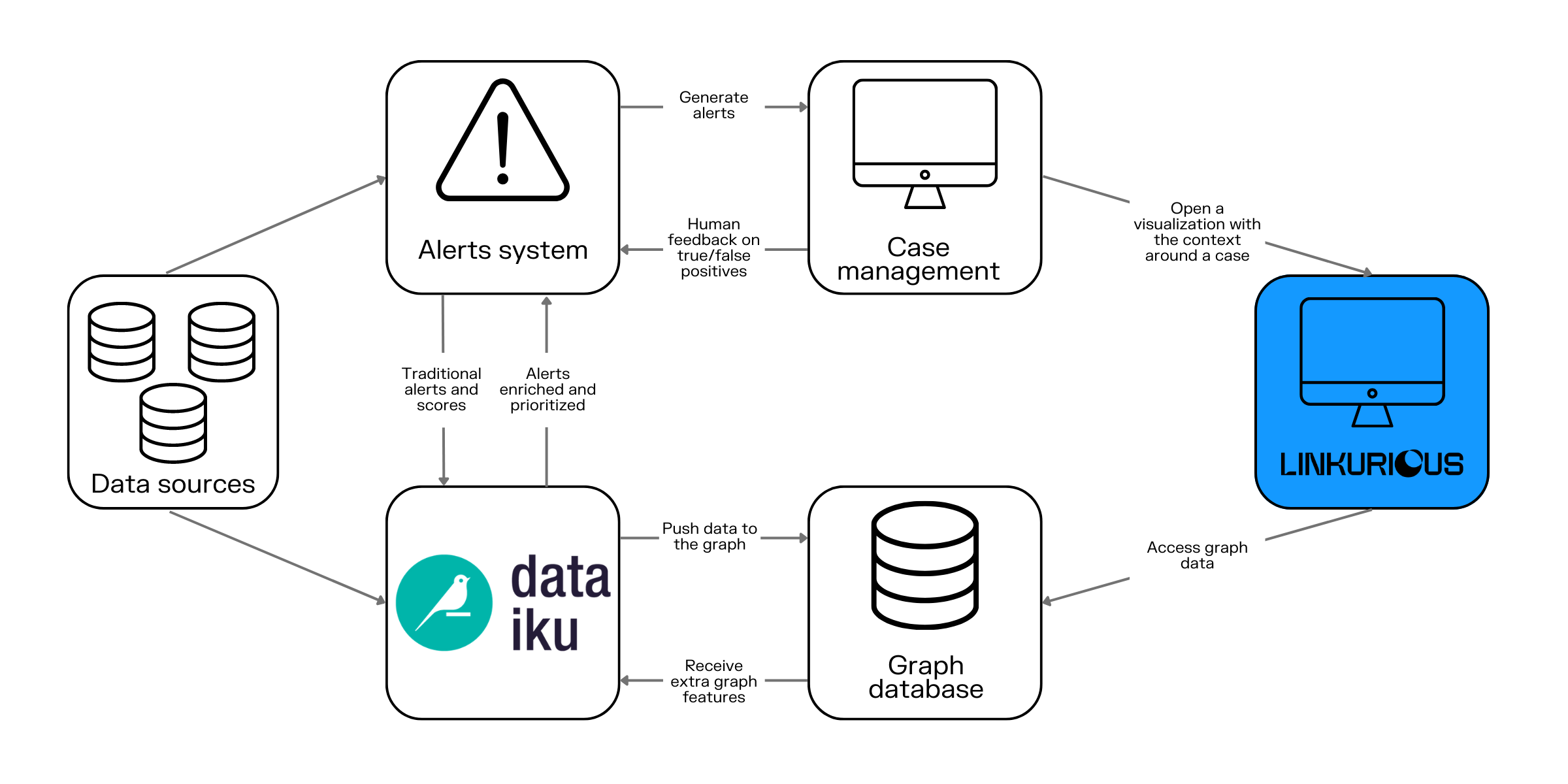

Machine learning fraud detection typically follows a workflow: data collection → feature engineering → model training → real-time scoring → decision automation

After getting burned by rules-based systems, I tried Sift. They use machine learning trained on data from a shitload of sites - they claim some huge number but honestly, who knows the exact count. Instead of hardcoded rules, they build behavioral profiles. In my experience, the system actually learned that our power user who always buys from different countries isn't fraud, but 50 accounts created with sequential email addresses probably are.

Their global network processes some ridiculous number of events - they claim over a trillion but sounds like marketing bullshit. That said, the network effect is real. When a fraudster hits one site, the network learns and protects others. It's like crowdsourced fraud intelligence.

From what I can tell, their ML looks at:

- Device fingerprints (harder to fake than you'd think)

- Behavioral patterns (how fast they type, mouse movements - creepy but effective)

- Network analysis (VPN detection, suspicious IP ranges)

- Account relationships (are these "different" accounts using the same fucking device?)

What Sift Actually Covers

Sift's platform integrates multiple fraud detection modules: payment fraud, account takeover, content abuse, and chargeback management - all feeding into a unified risk scoring system

Unlike single-purpose tools, Sift handles multiple fraud types:

Payment Fraud: The obvious one - stolen cards, chargebacks, fake transactions. In my experience, it works better than most because it correlates payment data with account behavior.

Account Takeover: Detects when someone's account gets hijacked. Looks for login anomalies, device changes, behavioral shifts. Actually useful unlike most ATO tools that just check if the IP changed (looking at you, legacy security tools).

Content Integrity: Catches fake reviews, spam, bot content. This one surprised me - it actually helped us clean up our user-generated content problem when nothing else worked.

Dispute Management: Automates chargeback responses. Saves me hours of manual work fighting disputes.

Real-World Implementation Reality

Here's what nobody tells you: Sift works, but implementation isn't trivial. This 2-week project took me 8 weeks because I kept discovering new events I needed to track. Their API is decent but assumes you understand their event taxonomy - which I definitely didn't.

The Score API has quirks - like Error 54 when you call it immediately after sending user events. Learned this the hard way during a production deployment when everything started failing. You need to wait 4-800ms or build retry logic. Took me 3 hours of debugging to find this buried in their support docs.

Pricing is "contact sales" which means expensive. My manager nearly choked when he saw the quote - expect mid-five figures minimum if you have any meaningful volume. But if it prevents a few major fraud incidents, it pays for itself. We had one weekend where fraudsters got through our old system and cost us $30K in chargebacks.

The platform works best when you feed it quality data. Garbage in, garbage out. I spent a month fine-tuning event instrumentation because I was getting false positives on half our European customers. Do this right or you'll just have an expensive way to piss off legitimate users.