Most AI coding tools are just ChatGPT with some code examples thrown in. This was built for coding from scratch, which is why it doesn't lecture you about "best practices" when you just want to fix a fucking bug.

Actually Built for Coding, Not Just Adapted

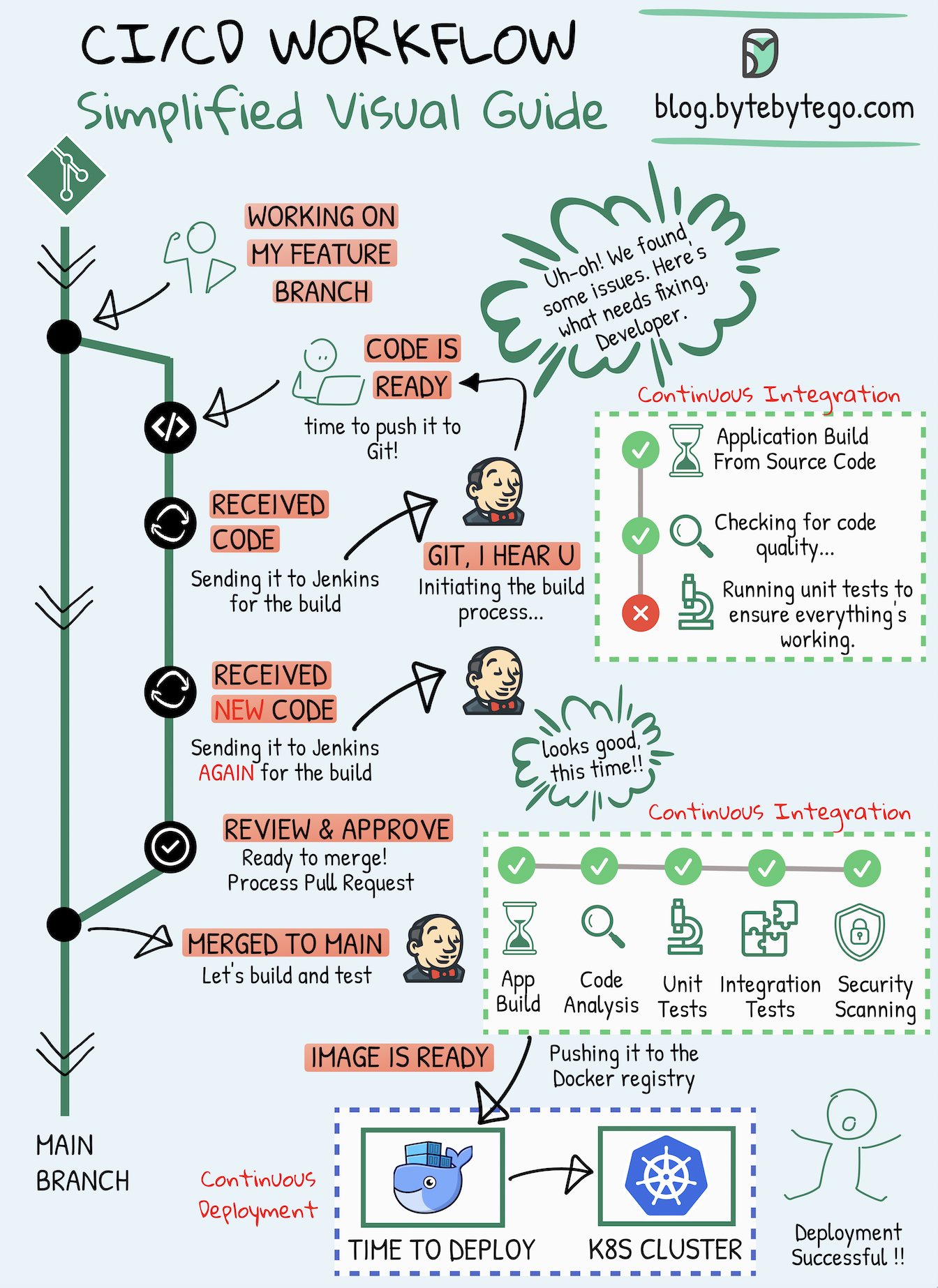

Instead of starting with a general chatbot and teaching it to code, xAI trained this on programming datasets from the beginning. They used real pull requests and actual developer workflows, not synthetic examples. Working with tools like Cursor and Cline during training probably helped - it shows they understood what developers actually need.

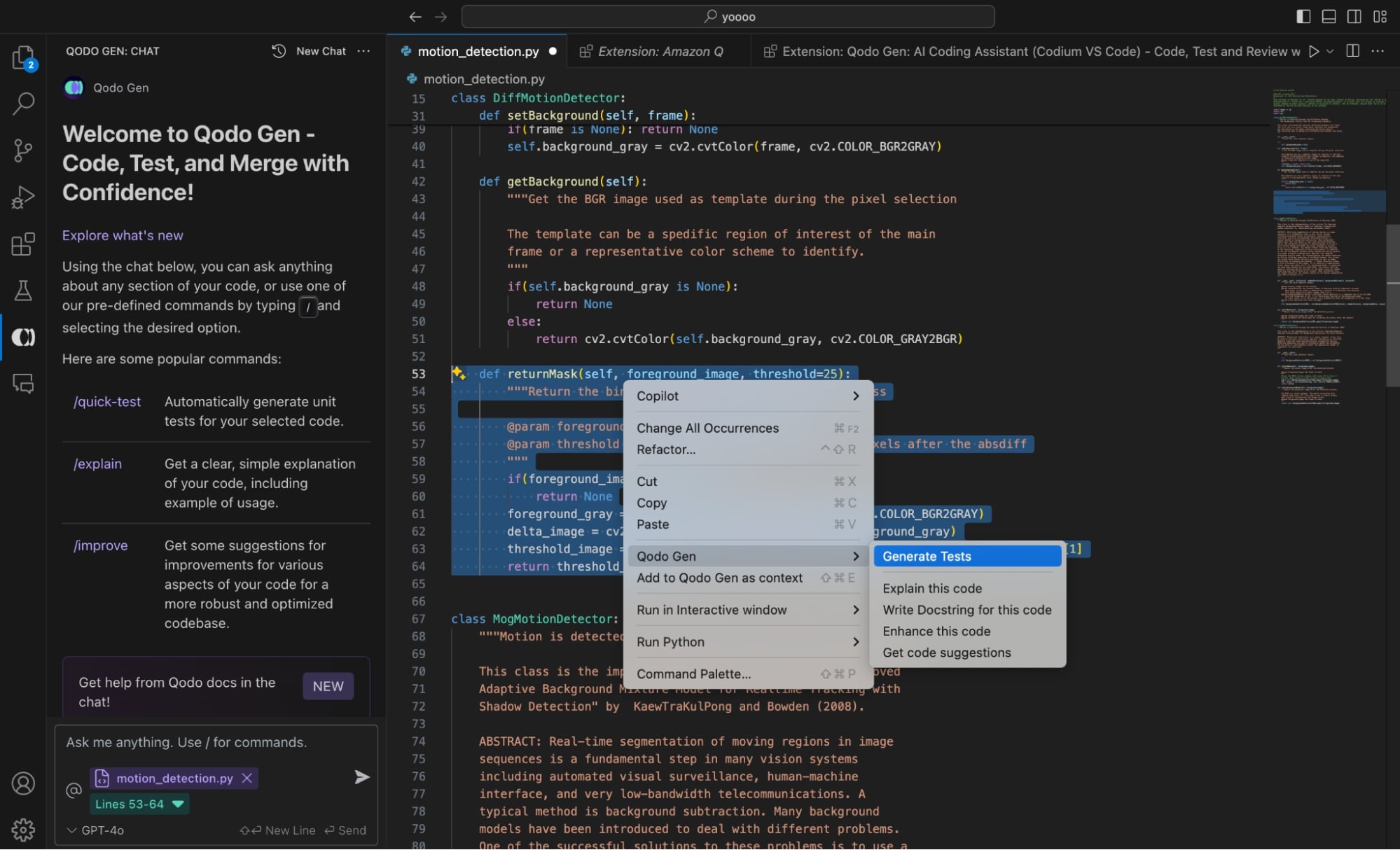

It knows what grep and git are without me explaining version control like it's a fucking intern. When I say "fix this test," it actually edits the test file instead of giving me a lecture about testing frameworks.

Don't expect miracles. It chokes on anything more complex than fixing a React component. Asked it to refactor our authentication flow and it suggested storing JWT tokens in localStorage with a cheerful comment about 'modern web development best practices.' Yeah, great idea until your XSS vulnerability becomes a security audit nightmare. Sometimes suggests useEffect without dependencies that causes infinite re-renders - classic tutorial mistake that killed my CPU for 20 minutes while I figured out why my React app was using 100% of everything.

Fast Enough to Actually Use Interactively

At 92 tokens per second, this thing responds fast enough that you can actually have a conversation with it. Compare that to Claude or GPT-4 where you craft a perfect prompt, wait 45 seconds, then spend another 10 minutes adapting their response to your actual codebase.

With Grok, I can ask "fix this function," see what it does, then immediately follow up with "actually, make it handle edge case X" without losing my train of thought. The caching means second requests on the same project are nearly instant.

This totally fucks with your workflow. Instead of crafting perfect prompts and praying, you can actually iterate. Ask questions, get answers, tweak, repeat. It's like pair programming with someone who types fast but occasionally has no idea what you're actually trying to build.

The Specs That Actually Matter

The 256K context window sounds big until you paste in a few React components and realize you've used 80K tokens on what feels like a tiny project. The reasoning traces actually help debug what it's thinking, unlike other models that just spit out code with no explanation.

314 billion parameters sounds impressive until you realize it's a mixture-of-experts model, so only a fraction run for each request. Still performs well - scored 70.8% on SWE-Bench Verified, putting it among higher-tier coding models for problem-solving.

The tool integration is the real win. It can actually run git commands and edit files instead of making you copy-paste everything. Though it sometimes gets confused about file paths and will confidently edit the wrong file while acting like it knows exactly what it's doing.

The Reality Check

The 70.8% on SWE-Bench Verified sounds impressive until you realize it probably never debugged a React useEffect that re-renders infinitely because it references a function defined inside the component. Benchmarks test algorithmic puzzles, not 'why the fuck is my component updating 500 times per second.' In practice, it's good at straightforward tasks and completely loses its shit on complex refactoring. Works well for React components, less well for fixing distributed systems issues.

Does it actually make you faster? For me, yeah - mostly because I can iterate quickly instead of waiting 30 seconds between each attempt. But if you're building a complex distributed system, you'll still need actual human brains to figure out the architecture.

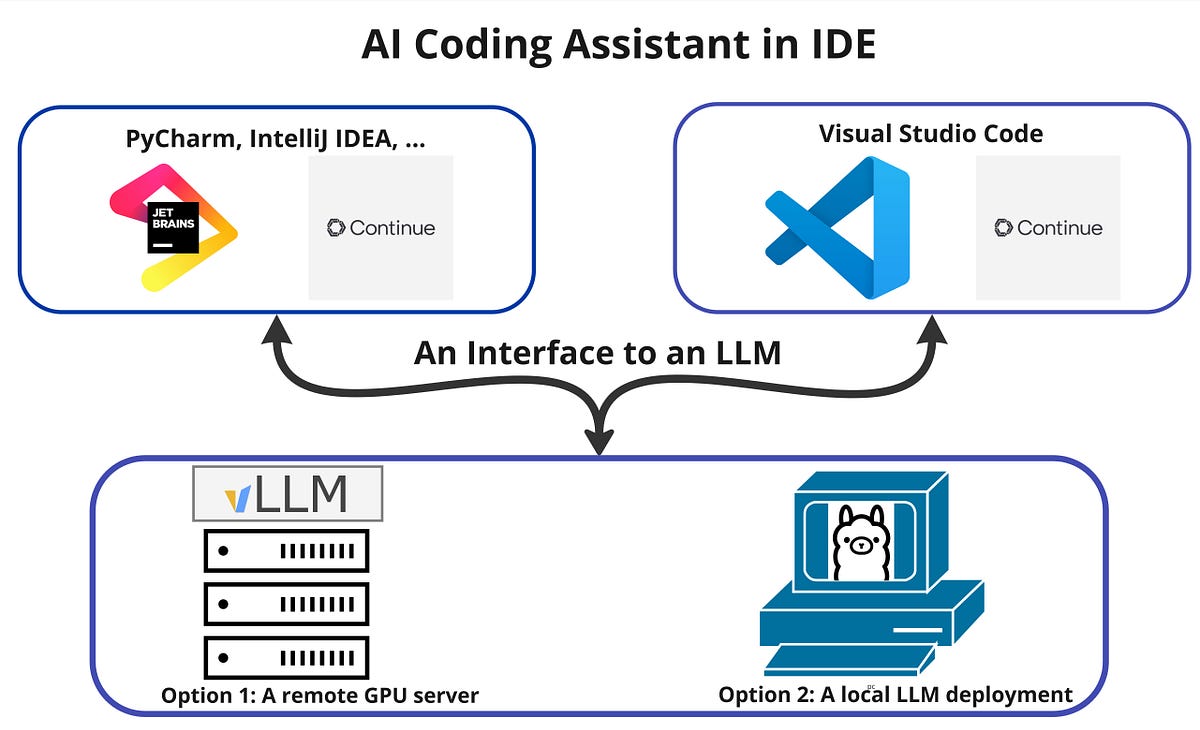

Where You Can Actually Use It

Available through Cursor, Cline, GitHub Copilot, and some other tools. Free until September 2025, then $0.20/$1.50 per million tokens. The pricing is reasonable unless you're doing massive refactoring all day.

API compatible with OpenAI SDKs, so switching is usually just changing the endpoint URL. Though their docs are garbage - three examples total and none of them work with the newer OpenAI SDK v4.52.0+ because of breaking changes. Expect some trial and error during setup.

Whether this is worth switching depends on how much waiting around pisses you off. For quick prototyping and debugging, the speed difference is huge. For complex architectural work or anything involving legacy systems, you'll still need Claude or actual human brains.