The Reality of Enterprise-Scale Discovery

Standard Migration Center setup breaks in predictable ways at enterprise scale. You have 3 ways to deploy this. Use option 2 unless you hate yourself. Here's what actually works after you've been burned twice by the default approach in environments with 5,000+ assets, complex networks, and legacy systems that haven't been touched since 2019.

Discovery Client Architecture for Scale

The discovery client 6.3.7 has performance improvements, but Google designed this thing for toy environments with 1,000 servers in a single data center. Real enterprises with multiple vCenters and legacy infrastructure? Yeah, you're on your own. The August 6, 2025 release fixed ZFS partition issues and floppy disk drives showing up in Linux scans (seriously, who still has floppy drives in 2025?), but they still haven't fixed the core scaling problems.

Deploy Multiple Discovery Clients Strategically

Instead of trying to scan everything from one discovery client, deploy multiple instances with clear boundaries:

- One discovery client per major data center - Network latency and firewall rules make cross-data center scanning unreliable

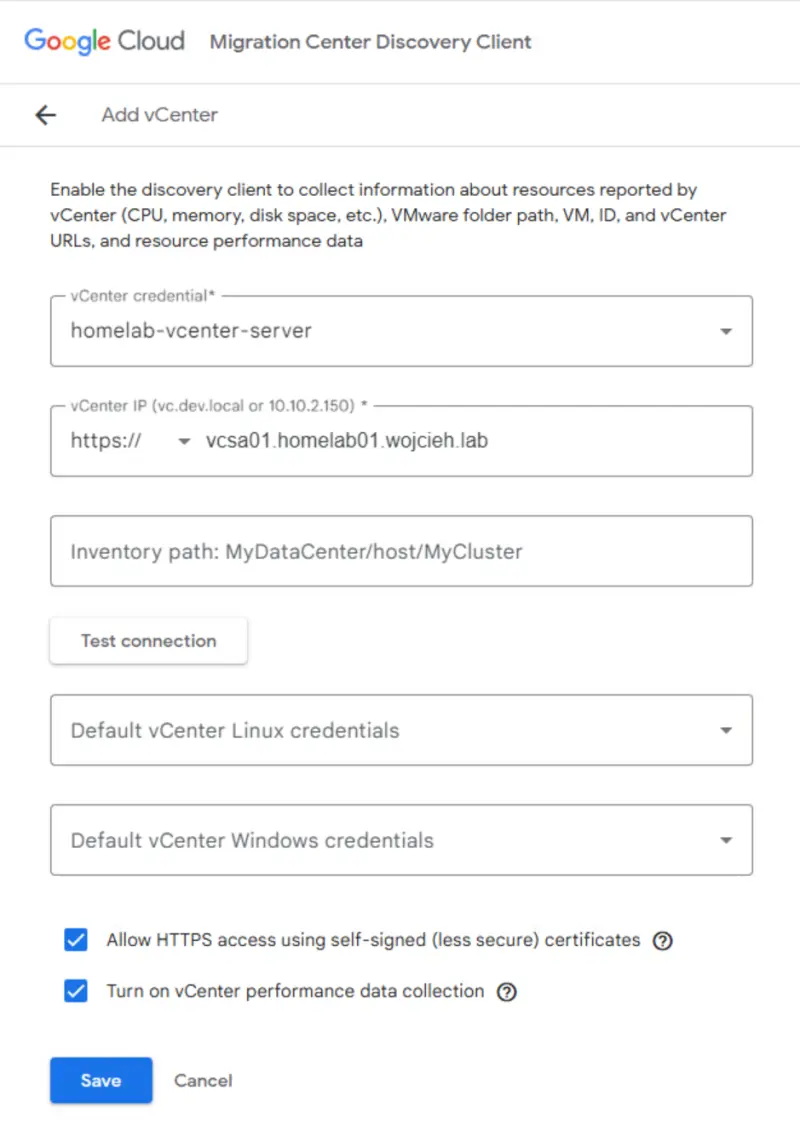

- Separate discovery clients for different credential domains - VMware environments with different service accounts, AWS accounts with different IAM roles

- Dedicated discovery clients for performance-sensitive environments - Production databases and critical applications should be scanned during maintenance windows with separate agents

Each discovery client maintains its own database and scan schedule, but all upload to the same Migration Center project. This approach prevents the single-point-of-failure issues that plague large monolithic discovery deployments.

Optimize Scan Scheduling for Production Impact

The custom scheduling feature introduced in version 6.3.0 lets you define opt-out schedules per server. This is critical for production environments where even the lightweight guest collection scripts can impact performance during peak hours.

What actually works in production:

- Development/test servers: Scan during business hours (9 AM - 5 PM)

- Production applications: Scan only during maintenance windows (Sunday 2-6 AM)

- Database servers: Scan during low-transaction periods (avoid month-end, quarter-end)

- Legacy systems: Manual coordination with application owners before any scanning

The discovery client collects performance metrics over time, so inconsistent scanning schedules will result in incomplete utilization data and inaccurate rightsizing recommendations.

Network and Credential Management at Scale

Managing Credentials Without Losing Your Mind

Most large discoveries die here. Google's credential management works great if you have one AD domain and a simple setup. Got 12 different AD forests, legacy systems with local accounts, and a security team that changes passwords weekly? Good luck.

Segmentation approach:

- Domain credentials: One set per AD domain/forest

- Local admin accounts: Separate credentials for servers not joined to domain

- Service accounts: Dedicated accounts for VMware vCenter, database instances

- Cloud credentials: AWS IAM roles, Azure service principals

Store credentials in enterprise password managers, not in the discovery client interface. Use the credential reset functionality to rotate accounts monthly as part of security compliance.

Network Dependencies and Firewall Planning

The discovery client needs network access on multiple protocols, and enterprise firewalls rarely have the ports configured correctly from the start. Plan for these network requirements:

Outbound from discovery client:

- TCP 443: HTTPS to Google Cloud APIs (required for uploading data)

- TCP 443: HTTPS to vCenter APIs (for VMware discovery)

- TCP 22: SSH to Linux servers (for guest-level collection)

- TCP 135, 445: WMI to Windows servers (for guest-level collection)

- TCP 3389: RDP for Windows troubleshooting (optional but recommended)

Inbound to target servers:

- Network scanning requires ICMP ping responses

- SSH daemon must accept connections from discovery client IP

- Windows Firewall must allow WMI traffic from discovery client subnet

Most enterprise network teams will push back on opening these ports broadly. The compromise solution is to deploy discovery clients inside each network segment, minimizing firewall rule changes.

Data Collection Optimization

Performance Impact Mitigation

Even with the performance improvements in version 6.3.4, guest-level collection can impact target systems during collection. The discovery client sets Linux collection scripts to run with higher nice levels and optimizes Windows collection, but this still causes CPU spikes on resource-constrained systems.

What actually happens to your servers:

- CPU impact: 5-15% CPU spike for 30-60 seconds (or until your monitoring explodes)

- Memory usage: 50-100MB temporary increase (watch out on 2GB legacy boxes)

- Disk I/O: "Minimal" until it hits that ancient Windows 2008 server with a dying disk

- Network bandwidth: 1-5MB per server (multiply by 2,000 servers during peak hours)

I learned this the hard way when discovery scanning triggered a cascade failure on a production ERP system running at 95% CPU. That was a fun Saturday morning explaining to the CTO why payroll was down.

Storage and Database Performance

The discovery client stores collected data in a local SQLite database before uploading to Migration Center. For large environments, this database can grow to several gigabytes and become a performance bottleneck.

Database maintenance practices:

- Monitor database size: Check

C:\ProgramData\Google\mcdc\data\folder size weekly - Storage requirements: Plan for 2-5MB per discovered server for historical data

- Backup strategy: Export collected data to Migration Center before major discovery client upgrades

- Database corruption: Use the recovery commands if database issues occur

The discovery client periodically cleans up old data, but environments with frequent re-scanning can accumulate significant data volumes.

Integration with Enterprise Tools

CMDB and Asset Management Integration

Migration Center doesn't integrate directly with enterprise Configuration Management Databases (CMDBs), but you can bridge this gap with the CSV import functionality. Export server data from ServiceNow, BMC Remedy, or other CMDB tools and import into Migration Center to supplement automated discovery.

Common integration scenarios:

- Asset ownership data: Application owners, business units, cost centers

- Compliance classifications: PCI, HIPAA, SOX scope servers

- Change management schedules: Maintenance windows, upgrade schedules

- Business criticality: Tier 1/2/3 application classifications

This manual data enrichment is essential for meaningful migration wave planning and risk assessment.

Monitoring and Alerting Integration

The discovery client generates logs that you can forward to Cloud Logging, but enterprise environments need integration with existing monitoring tools. Export discovery client logs to SIEM platforms like Splunk or elastic stack for centralized monitoring.

Key metrics to monitor:

- Discovery success rates: Percentage of servers successfully scanned per cycle

- Authentication failures: Credential expiration or permission changes

- Network timeouts: Firewall or connectivity issues preventing discovery

- Resource utilization: Discovery client CPU, memory, and storage usage

Set up alerts for discovery success rates below 95% - this indicates systematic issues that need immediate attention.

Essential Resources for Scale Operations:

- Discovery Client Performance Tuning - Configuration best practices for large environments

- Enterprise Migration Architecture - Multi-phase planning approach for complex migrations

- Credential Management Guide - Security controls and rotation strategies

- Network Dependencies Analysis - Planning integration points and mapping

- Cloud Migration Best Practices - Organizational readiness and validation techniques

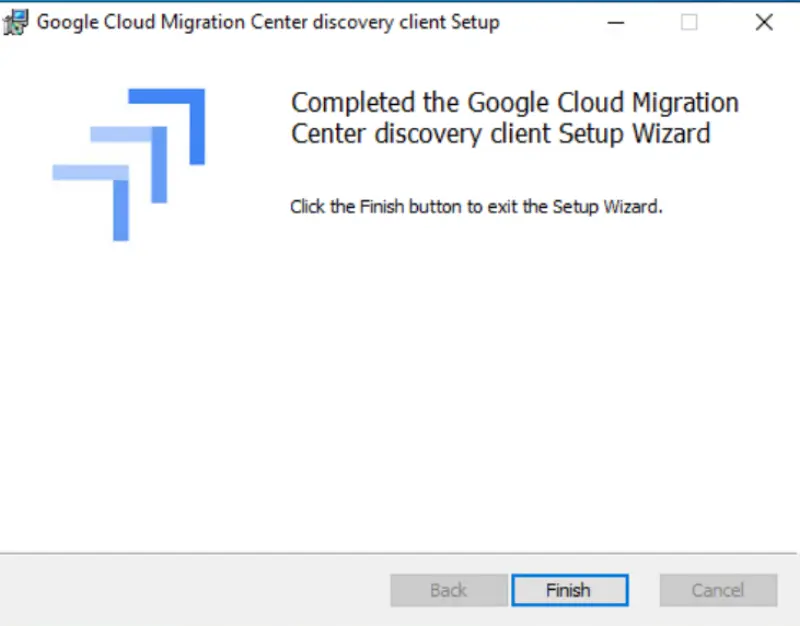

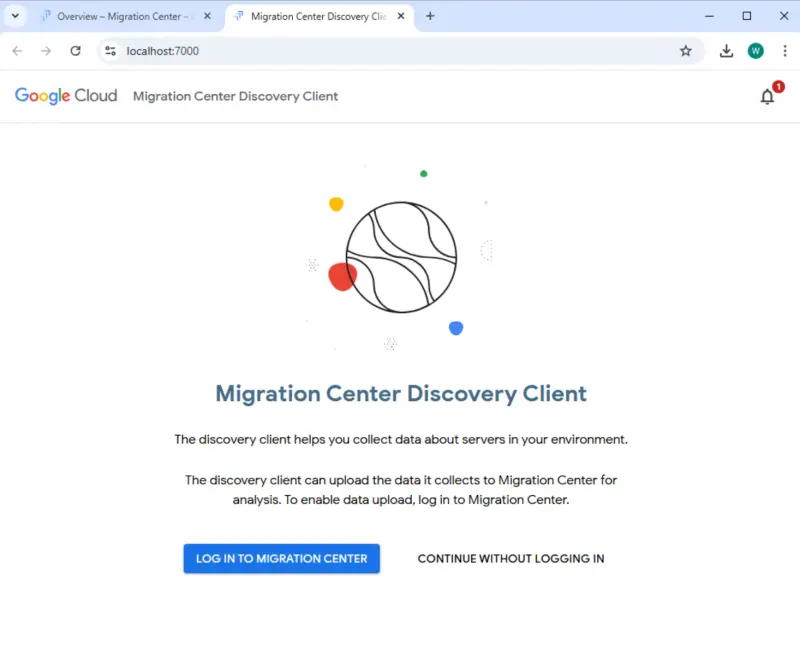

- Discovery Client Installation Process - Step-by-step deployment instructions

- Discovery Client Installation Requirements - System requirements and network planning

- Discovery Client Collection Methods - WMI, SSH, and credential configuration

- Start Asset Discovery - Server and VM discovery configuration

- Google Cloud Security Best Practices - Enterprise security configurations and controls

- Migration Center Service Perimeter - VPC Security Controls for enterprise environments

- Disconnected Environment Support - Air-gapped network deployment