The Enterprise AI Reality Check: It's Already Happening

Enterprise Features Reality Check:

Here's what's actually going down in September 2025. Your senior engineers are copy-pasting from ChatGPT into production code. Your junior devs are using Claude to write entire modules. Your security team has no idea what AI-generated code is shipping to customers.

20 million developers are already using some form of Copilot, and Gartner says GitHub is winning the AI coding war. But here's the thing nobody mentions in those polished reports: most enterprises have zero control over this shit.

Why Enterprise Actually Matters (And Why You'll Hate It)

Repository indexing sounds amazing until it backfires: Repository indexing means the AI learns from your entire codebase. Sounds great until it starts suggesting patterns from that legacy PHP monolith from 2015, or recommends using mysql_real_escape_string for everything because that's what it learned from your technical debt.

I watched one team get excited about organization-wide context until Copilot started suggesting their deprecated internal API methods in new code. The AI was technically right - this IS how the company writes code. It just learned from all the wrong examples.

Administrative control is security theater: Sure, you get centralized policies, audit logging, and SAML integration. But here's what actually happens: audit logs generate 50,000 entries per day of noise, security teams can't tell which code is AI-generated without manually reviewing every commit, and your SAML integration breaks every time Microsoft updates something.

The Security Dashboard Illusion:

Copilot Spaces replaces Knowledge Bases (September 12, 2025): Knowledge bases are getting killed for Copilot Spaces. The migration is "automatic" but what they don't tell you is that half your curated internal documentation will get scrambled in the transition. Plan to spend 2 weeks fixing broken links and missing context.

The Features That Actually Work (And The Ones That Don't)

Custom models take 6 months and still suck: Custom model training sounds like the holy grail - AI that understands YOUR code patterns. Reality check: it takes 6 months to train, requires your senior architects to babysit the process, and then breaks spectacularly when someone refactors the main architecture.

One company spent $500K training a model on their Java microservices. The AI learned perfectly... including all their Spring Security configuration mistakes from 2019. Now it confidently suggests vulnerable authentication patterns.

IP indemnification is mostly meaningless: GitHub offers copyright indemnification which sounds reassuring until you read the fine print. Sure, they'll defend you in court, but you'll still spend millions in legal fees proving the code was actually generated by Copilot vs. copied by a developer. Good luck with that audit trail.

Enterprise "integration" means more complexity: Yes, it works with GitHub Advanced Security and Actions. What they don't mention is that every integration point is another failure mode. Your CI/CD pipeline that took 3 minutes now takes 8 minutes because of AI security scanning. Your Advanced Security alerts go from 100/day to 500/day because the AI generates code that triggers every possible warning.

The ROI Bullshit vs. Reality

GitHub's research claims amazing productivity gains. Here's what those numbers actually mean:

"55% faster coding" = mostly autocomplete: Yeah, developers type boilerplate faster. But they spend 3x longer debugging the AI's creative interpretations of what they actually wanted. The AI writes perfect syntactically correct code that does completely the wrong thing.

"39% code quality improvement" = debatable: The AI writes consistent code. Unfortunately, it's consistently bad. It doesn't understand your performance requirements, your error handling patterns, or that you stopped using jQuery in 2018. Quality depends on whether you consider "follows the same bad patterns" an improvement.

"68% positive developer experience" = Stockholm syndrome: Developers like AI assistance the same way they like stack overflow - it saves time until it doesn't. Ask the same developers 6 months later when they're debugging AI-generated race conditions and see how positive they feel.

Mercedes-Benz says developers use saved time for "creative problem-solving." Translation: they spend the time they saved on boilerplate fixing the creative problems the AI introduced.

What Actually Changed in 2025 (And What Broke)

Multiple AI models = choice paralysis: Now you get GPT-4o, Claude 3.5, o1-preview, and Gemini. Sounds great until your team spends 2 hours in Slack debating which model is best for React vs. Python. Pro tip: they all suggest slightly different approaches to the same problem, creating consistency nightmares in code reviews.

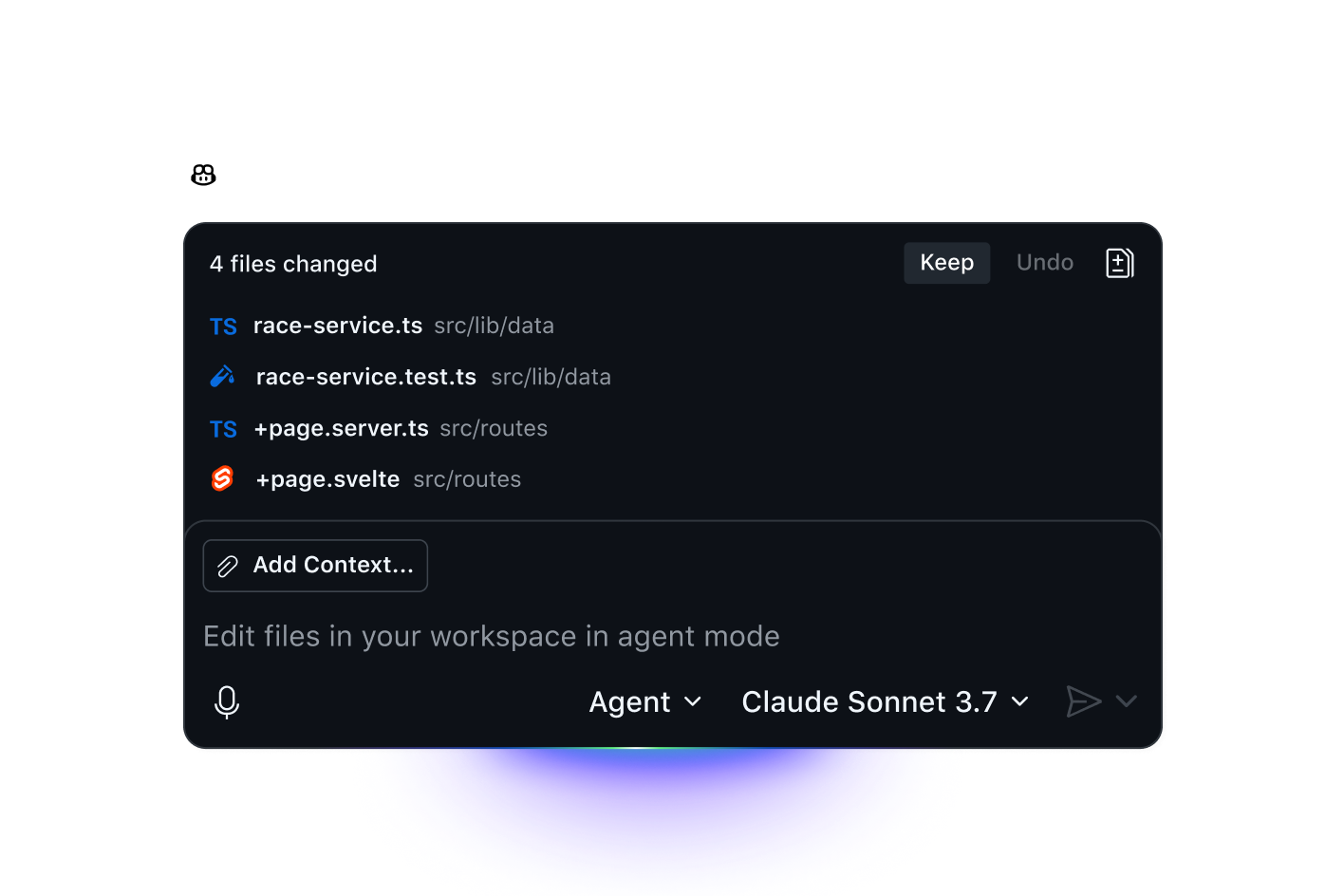

Coding agents are terrifying: Copilot Coding Agents can be assigned GitHub issues and create PRs automatically. I watched one agent close 50 issues as "working as intended" because it learned from the support team's responses. Another agent spent 3 days implementing a feature that had been cancelled 6 months ago because nobody updated the issue tags.

"Enterprise governance" = bureaucracy nightmare: Sure, you can control which models teams access and exclude repositories. What you can't control is developers using their personal ChatGPT accounts when Copilot fails them. Your "comprehensive policies" become security theater while the real work happens outside your governance.

The Reality of Enterprise Implementation

Forget those bullshit "strategic phases." Here's what actually happens:

Week 1-8: Fighting with procurement and security - Your CISO wants a 47-page security review. Procurement needs three vendor comparisons. Legal wants to understand copyright liability. By week 8, your developers have already moved on to Claude Pro subscriptions.

Week 9-24: Configuration hell - Content exclusion policies break your CI/CD because everything depends on shared libraries. Audit logging fills your Splunk license with noise. SAML integration fails every other Tuesday.

Month 6-12: The AI learns your bad habits - Custom models trained on your codebase start suggesting all your deprecated patterns. Repository indexing means the AI confidently recommends code from that intern project that should never have been committed.

Month 12+: Maintenance nightmare - Models drift, APIs change, integrations break. You now have a full-time AI platform engineer whose job is keeping this shit running.

The brutal truth: Enterprise AI isn't about technology maturity. It's about organizational readiness to debug AI-generated problems at 3AM while your CEO asks why the AI revolution hasn't transformed productivity yet.