Automated Policy Management and SCIM Integration

Moving Beyond Manual Registry Hell

Manual policy management works for small teams, but enterprise-scale deployments require automation or you'll spend your life updating allowlists.

Managing Docker Registry Access Management manually is fine until you hit about 50 developers. Then it becomes a nightmare of constant policy updates, developers complaining they can't pull images, and admins who hate their lives. SCIM provisioning with Docker Business automatically syncs user and group changes, which sounds great until it breaks. Trust me, once you hit 100 developers, manual registry management becomes your personal hell. When employees join teams, SCIM should grant registry access automatically. When they leave, access should disappear immediately. In practice, it fails during team transitions and nobody notices until someone can't deploy. The SCIM 2.0 specification defines the protocol details, but implementation gotchas are common.

SCIM Integration Architecture:

Here's how SCIM actually works (when it's not broken): Your IdP talks to Docker, Docker updates policies. In theory, when someone joins Platform Engineering, they get infrastructure registry access instantly. In practice, SCIM shits itself whenever someone changes teams.

What Actually Happens:

- SCIM sync fails silently when someone moves between departments

- Policy propagation is anywhere from 5 minutes to "fuck it, I'm manually restarting everything"

- Emergency registry additions require jumping through approval hoops while production burns

- The allowlist becomes a mess of registries nobody remembers why they added

Policy-as-Code Reality Check:

GitOps sounds great on paper, but try explaining to your CTO why the hotfix is delayed for a fucking pull request review. Great for governance, terrible for "the build is broken and needs this registry NOW."

SCIM 2.0 integration with Docker Business syncs user changes - when it works. SCIM shits itself whenever someone changes teams rapidly.

Advanced SCIM Configuration Patterns:

{

"schemas": ["urn:ietf:params:scim:schemas:core:2.0:Group"],

"displayName": "Engineering-Platform-Team",

"members": [

{

"value": "user123",

"$ref": "https://api.docker.com/v2/scim/Users/user123"

}

],

"urn:docker:2.0:scim:extension:group": {

"registryAllowlist": [

"internal.company.com",

"123456789012.dkr.ecr.us-west-2.amazonaws.com",

"harbor.platform.internal"

],

"inheritFromParentGroup": true,

"effectiveDate": "2024-03-15T00:00:00Z"

}

}

Dynamic Allowlist Management: Use SCIM group attributes to automatically manage registry access based on team membership. Platform teams get infrastructure registries, application teams get their specific namespaces, and security teams get vulnerability scanning registries.

Identity Provider Integration Patterns:

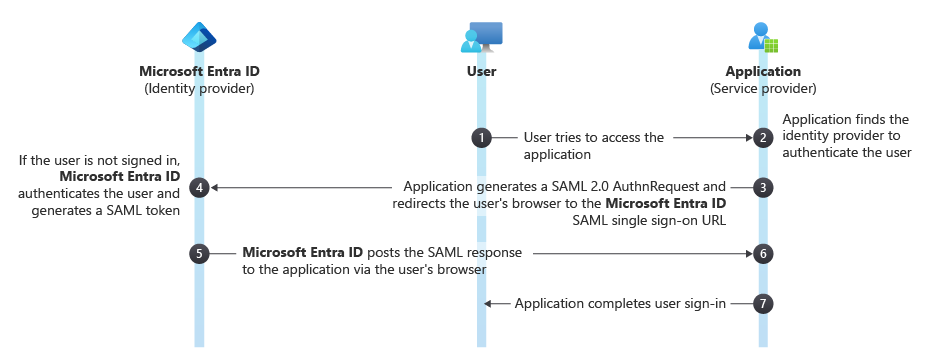

For Microsoft Entra ID integration, you map AD groups to Docker access. Platform team gets Kubernetes stuff, web devs get Node.js registries. Works fine until some asshole in IT decides to "optimize" the group structure without telling anyone, then nobody can pull images for a week.

Policy-as-Code Implementation

GitOps Security Workflow: Registry policies are stored in Git → CI/CD pipeline validates changes → Automated deployment to Docker Business → Policy propagation to all users. This makes sure every registry change goes through proper paperwork hell.

GitOps-Driven Registry Management:

## docker-ram-policies.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: docker-ram-policies

namespace: docker-admin

data:

production-registries.json: |

{

"allowlist": [

"docker.io",

"registry.company.com",

"123456789012.dkr.ecr.us-west-2.amazonaws.com",

"harbor.prod.company.com"

],

"environment": "production",

"lastUpdated": "2024-03-15T04:00:00Z",

"approvedBy": "platform-team"

}

development-registries.json: |

{

"allowlist": [

"docker.io",

"registry.company.com",

"dev.company.com",

"harbor.dev.company.com",

"quay.io",

"gcr.io"

],

"environment": "development",

"lastUpdated": "2024-03-15T04:00:00Z"

}

Automated Policy Updates: Deploy registry policy changes through CI/CD pipelines with approval workflows, automated testing, and rollback capabilities. When new registries are approved through change management, they're automatically added to the appropriate environment allowlists.

Policy Validation and Testing: Before deploying registry policy changes, automated tests verify that critical base images remain accessible and that newly blocked registries don't affect production workflows.

Performance Optimization and Policy Propagation

Accelerating Policy Distribution

Docker's default 24-hour policy propagation is complete garbage for production environments.

Seriously, who designed this? 24 hours to update a fucking allowlist? I've had production outages because policy changes took most of a day to propagate. Picture this: hotfix ready to deploy, registry blocked, site's down, and Docker's like "policy will update sometime tomorrow, probably." Enhanced policy distribution through API automation and strategic configuration can reduce this to under 5 minutes, but you'll need to fight Docker's terrible defaults. Registry performance optimization and load balancing strategies are critical for enterprise deployments. The Docker Registry HTTP API documentation covers optimization techniques, though community insights often provide more practical solutions.

Immediate Policy Propagation Strategies:

- Automated Sign-out/Sign-in Orchestration: Deploy scripts that force Docker Desktop policy refresh across the organization:

#!/bin/bash

## nuclear option when policies won't update

if pgrep -f "Docker Desktop" > /dev/null; then

echo "Restarting Docker Desktop to force policy refresh..."

# try to save current context

CURRENT_CONTEXT=$(docker context show 2>/dev/null)

docker logout

sleep 2

# re-auth with SSO (if the token still works)

if [ -f ~/.docker/sso-token ]; then

docker login --username "$(cat ~/.docker/sso-username)" --password-stdin < ~/.docker/sso-token

else

echo "SSO token missing, manual login required"

fi

# restore context

if [ ! -z "$CURRENT_CONTEXT" ]; then

docker context use "$CURRENT_CONTEXT" || echo "context restore failed"

fi

else

echo "Docker Desktop not running"

fi

- Registry Mirror Configuration: Deploy internal registry mirrors that bypass policy checks for frequently-used base images:

{

"registry-mirrors": [

"https://mirror.company.com",

"https://docker-mirror.internal.com"

],

"insecure-registries": [

"internal.company.com:5000"

],

"hosts": {

"docker.io": "https://mirror.company.com/docker-hub",

"gcr.io": "https://mirror.company.com/gcr",

"quay.io": "https://mirror.company.com/quay"

}

}

Registry Mirror Benefits:

- Eliminates policy lookup latency for cached images

- Provides availability during Docker Hub outages

- Enables air-gapped environment support

- Reduces external bandwidth consumption

Advanced Monitoring and Performance Metrics

Real-Time Policy Effectiveness Monitoring:

Container security monitoring architecture: Application containers → Runtime monitoring (Falco) → Security events → Alert aggregation → Incident response. This provides visibility into registry policy violations and container behavior anomalies.

## Prometheus alerting rules for Docker RAM

groups:

- name: docker-ram-performance

rules:

- alert: DockerRAMPolicyLag

expr: |

(time() - docker_ram_policy_last_updated) > 300

for: 2m

annotations:

summary: "Docker RAM policy propagation delayed"

description: "Policy updates not propagated within 5 minutes"

- alert: DockerRAMHighDenialRate

expr: |

rate(docker_registry_denied_total[5m]) > 0.1

for: 5m

annotations:

summary: "High Docker registry denial rate"

description: "{{ $value }} registry denials per second"

Performance Analytics Dashboard:

Track key metrics that indicate RAM operational effectiveness:

- Policy propagation time across different user segments (monitoring best practices)

- Registry denial rates by team and time period (alerting strategies)

- Authentication failure patterns indicating configuration issues (security monitoring)

- Network latency impact on registry operations (performance tuning)

Enterprise Identity Integration and SSO Hardening

Advanced Single Sign-On Configuration

SSO integration goes beyond basic SAML connectivity - when it works, which isn't always.

Docker Business SSO supports SAML and OIDC. Sounds great on paper. Reality? Some asshole in IT will "improve" the IdP config during lunch and not tell anyone. Suddenly nobody can pull images, deployments are fucked, and guess who gets the angry Slack messages? Not the IT guy who broke it. Docker's SSO also has this amazing ability to fail precisely when you're pushing a critical hotfix at 2am.

SAML 2.0 Advanced Configuration:

<!-- Enhanced SAML configuration with custom claims -->

<saml2:AttributeStatement>

<saml2:Attribute Name="http://schemas.docker.com/enterprise/team">

<saml2:AttributeValue>platform-engineering</saml2:AttributeValue>

</saml2:Attribute>

<saml2:Attribute Name="http://schemas.docker.com/enterprise/registries">

<saml2:AttributeValue>internal.company.com,harbor.platform.company.com</saml2:AttributeValue>

</saml2:Attribute>

<saml2:Attribute Name="http://schemas.docker.com/enterprise/access-level">

<saml2:AttributeValue>advanced</saml2:AttributeValue>

</saml2:Attribute>

</saml2:AttributeStatement>

Multi-Organization Management:

Large enterprises often require multiple Docker organizations with different policies:

- Production Organization: Restricted registry allowlist, enhanced security policies

- Development Organization: Broader registry access, relaxed policies for experimentation

- Contractor Organization: Limited registry access, time-based access controls

Identity Provider Attribute Mapping:

Map organizational attributes to Docker registry policies automatically:

{

"attributeMapping": {

"department": {

"engineering": {

"registries": ["internal.company.com", "harbor.eng.company.com"],

"permissions": ["pull", "push"]

},

"security": {

"registries": ["*"],

"permissions": ["pull", "audit"]

},

"contractors": {

"registries": ["public-only.company.com"],

"permissions": ["pull"],

"expires": "90d"

}

}

}

}

Zero-Trust Network Integration

Network Security Layers: Network policies create multiple security boundaries: Pod-level egress rules → Namespace isolation → Service mesh policies → External firewall rules. This creates defense-in-depth for registry access beyond just Docker Business controls.

Network-Level Registry Controls:

Combine Docker RAM with network-level controls for defense-in-depth. Network policy best practices and Calico documentation provide implementation guidance. For comprehensive security, follow NIST container guidelines and CIS Docker benchmarks:

## Calico NetworkPolicy for registry access control

apiVersion: projectcalico.org/v3

kind: NetworkPolicy

metadata:

name: docker-registry-access

namespace: development

spec:

selector: app == 'docker-desktop'

types:

- Egress

egress:

- action: Allow

destination:

nets:

- 10.0.0.0/8 # Internal registries

ports:

- 443

- 5000

- action: Allow

destination:

namespaceSelector:

name: "approved-registries"

- action: Deny

destination: {}

Certificate-Based Registry Authentication:

Deploy client certificates for additional registry security:

#!/bin/bash

## Deploy client certificates for enhanced registry security

## Generate client certificate for registry access

openssl genrsa -out client.key 4096

openssl req -new -key client.key -out client.csr \

-subj "/CN=docker-client/O=company/OU=engineering"

## Sign with company CA

openssl x509 -req -in client.csr -CA company-ca.crt -CAkey company-ca.key \

-CAcreateserial -out client.crt -days 365

## Install in Docker Desktop

cp client.crt ~/.docker/certs.d/registry.company.com/

cp client.key ~/.docker/certs.d/registry.company.com/

cp company-ca.crt ~/.docker/certs.d/registry.company.com/ca.crt

Enhanced Container Isolation Integration

ECI Reality Check: Registry controls stop most bad stuff, but ECI is your insurance policy for when something malicious still makes it through. Because it will.

Combining RAM with ECI (When It Actually Works)

ECI is supposed to prevent container breakouts even if sketchy images slip past your registry filters. In practice, it also breaks half your development workflows.

Enhanced Container Isolation adds runtime security on top of registry filtering, which sounds great until developers start complaining that their legitimate containers can't access the files they need. The goal is defense-in-depth, but the reality is debugging why perfectly normal containers are getting blocked by overly aggressive isolation policies.

ECI Configuration for RAM Environments:

{

"eci": {

"enabled": true,

"settings": {

"privilegedAccess": false,

"hostNetworking": false,

"hostPidNamespace": false,

"hostFileSystem": "read-only",

"syscallFilter": "restricted",

"capabilities": "minimal"

},

"registryPolicies": {

"trustedRegistries": [

"internal.company.com",

"harbor.company.com"

],

"untrustedRegistryRestrictions": {

"networkAccess": "none",

"fileSystemAccess": "none",

"privilegeEscalation": false

}

}

}

}

Runtime Security Monitoring:

Combine ECI with runtime monitoring to detect policy violations:

## Falco rules for RAM + ECI monitoring

- rule: Unauthorized Registry Access Attempt

desc: Detect attempts to access non-allowlisted registries

condition: >

container and

net_connection and

not registry_allowed and

registry_port

output: >

Unauthorized registry access (user=%user.name command=%proc.cmdline

connection=%fd.name container=%container.name)

priority: HIGH

- rule: Container Privilege Escalation with External Registry

desc: Detect privilege escalation in containers from external registries

condition: >

spawned_process and

proc.name in (sudo, su, doas) and

container.image.repository not in (allowed_registries)

output: >

Privilege escalation attempt in external registry container

(user=%user.name command=%proc.cmdline container=%container.name)

priority: CRITICAL

Air-Gapped and Disconnected Environment Configuration

Complete Registry Isolation:

For highly secure environments, combine RAM with air-gapped containers:

{

"air-gapped": {

"enabled": true,

"allowedRegistries": [

"internal.company.com",

"offline.registry.company.com"

],

"externalRegistryBlocking": "enforced",

"offlineImageManagement": {

"importPath": "/secure-storage/docker-images",

"verificationRequired": true,

"signatureValidation": "mandatory"

}

}

}

Image Import and Verification Workflow:

#!/bin/bash

## Secure image import for air-gapped environments

## Verify image signature before import

docker trust inspect "external-registry.com/app:v1.0" || {

echo "Image signature verification failed"

exit 1

}

## Export verified image

docker save "external-registry.com/app:v1.0" | gzip > app-v1.0.tar.gz

## Transfer to air-gapped environment (secure media)

## Import on air-gapped system

docker load < app-v1.0.tar.gz

## Tag for internal registry

docker tag "external-registry.com/app:v1.0" "internal.company.com/app:v1.0"

## Push to internal registry

docker push "internal.company.com/app:v1.0"

## Update RAM allowlist to include only internal registry

Operational Excellence and Incident Response Automation

Automated Incident Response Procedures

Manual incident response for registry access issues doesn't scale. Automation reduces resolution time from "crying in the datacenter" to "actually fixing things".

At 3am when production is down and your deployment is blocked because some registry got removed from the allowlist, you don't want to be manually adding it through Docker's admin UI. I've been that guy - site's burning, everyone's on the incident call, and I'm trying to navigate Docker's portal while half asleep. It sucks.

Automated Emergency Registry Addition:

#!/usr/bin/env python3

## emergency registry addition script

import requests

import json

import time

from datetime import datetime, timedelta

class DockerRAMEmergencyManager:

def __init__(self, api_key, org_name):

self.api_key = api_key

self.org_name = org_name

self.base_url = "https://hub.docker.com/v2"

def emergency_registry_addition(self, registry_url, justification, approver):

# log the emergency request

emergency_log = {

"timestamp": datetime.utcnow().isoformat(),

"registry": registry_url,

"justification": justification,

"approver": approver,

"status": "emergency_approved",

"review_date": (datetime.utcnow() + timedelta(days=7)).isoformat(),

}

# add to allowlist

response = self.add_registry_to_allowlist(registry_url)

if response.status_code == 200:

self.schedule_emergency_review(emergency_log)

self.force_policy_refresh()

return {

"success": True,

"message": f"Emergency registry {registry_url} added",

"review_scheduled": emergency_log["review_date"]

}

else:

return {

"success": False,

"error": f"Failed to add registry: {response.text}"

}

def force_policy_refresh(self):

# trigger policy propagation

# TODO: implement this

pass

Intelligent Registry Request Analysis:

def analyze_registry_request(registry_url, team, use_case):

"""AI-assisted registry request analysis and risk assessment"""

risk_factors = {

"domain_reputation": check_domain_reputation(registry_url),

"ssl_certificate": verify_ssl_certificate(registry_url),

"registry_type": classify_registry_type(registry_url),

"team_history": analyze_team_request_history(team),

"use_case_validity": validate_use_case(use_case)

}

risk_score = calculate_risk_score(risk_factors)

if risk_score < 30:

return "auto_approve"

elif risk_score < 70:

return "manual_review_required"

else:

return "deny_with_security_review"

Advanced Monitoring and Alerting Automation

Predictive Policy Management:

## Advanced monitoring configuration

monitoring:

registry_usage_analytics:

enabled: true

prediction_window: "30d"

auto_suggest_additions: true

usage_threshold: 0.1 # Suggest adding if >10% of requests

security_monitoring:

enabled: true

threat_intel_integration: true

automated_blocking:

suspicious_domains: true

newly_registered_domains: true

known_malicious_registries: true

compliance_reporting:

enabled: true

export_format: ["json", "csv", "pdf"]

schedule: "weekly"

recipients: ["security@company.com", "compliance@company.com"]

Automated Policy Optimization:

def optimize_registry_allowlist():

"""Automatically optimize allowlist based on usage patterns"""

# Analyze usage data

usage_data = get_registry_usage_last_90_days()

recommendations = {

"unused_registries": [],

"frequently_denied": [],

"consolidation_opportunities": []

}

for registry in current_allowlist:

if usage_data[registry]["pulls"] == 0:

recommendations["unused_registries"].append(registry)

for registry, denials in get_denial_statistics():

if denials > 50: # High denial rate

recommendations["frequently_denied"].append({

"registry": registry,

"denials": denials,

"business_justification": analyze_business_need(registry)

})

return recommendations

The advanced configuration patterns covered here enable enterprise-scale Docker Registry Access Management that's both secure and operationally efficient. These approaches reduce administrative overhead while providing the security controls required for regulated industries and large-scale development organizations.

Next steps: Implement these advanced patterns gradually, starting with automation of your most common administrative tasks, then expanding to include predictive management and sophisticated security integrations.