Google should ship the Pixel 10 with C2PA Content Credentials built-in, but knowing Google, they'll probably announce it, get everyone excited, then kill it in two years like they did with Google Reader. If they actually followed through, it'd be like a digital receipt that proves your photo is real and not AI-generated bullshit.

What the Hell Is C2PA?

C2PA is basically a cryptographic signature system for photos and videos. When you snap a pic with the Pixel 10, the phone signs it with a digital certificate that says "yes, this was taken by a real camera at this exact time and place." The crypto stuff behind this is solid - it uses the same math that secures Bitcoin transactions.

The signature includes a timestamp, location (if you allow it), device info, and a hash of the actual image data. If someone edits the photo, the signature breaks and you know it's been tampered with.

The Reality Check - What Actually Works

If Google actually implemented C2PA properly (big if), here's what that would theoretically give you:

The good news: If Google actually implemented this, photos would get tamper-proof signatures that are genuinely hard to forge. Hardware-based signing would use the phone's crypto chip, making it nearly impossible to fake with software.

The bad news: Even if it worked, this would only function inside Google's ecosystem. You'd see a checkmark in Google Photos showing authenticity, but share it via WhatsApp or Instagram? The signature gets stripped faster than metadata from a JPEG.

The realistic impact: C2PA would add about 15KB to each photo, which adds up fast for heavy camera users. Battery impact would be minimal, but verification checks would probably slow down the gallery app.

This would be amazing if Google actually delivered on it. But knowing Google's track record with walled gardens, I guarantee the verification will work perfectly in Google Photos and disappear faster than free pizza at a startup the moment you share anywhere else. Classic Google - build something technically impressive that only works in their ecosystem.

Why This Matters (And Why It Doesn't)

Look, AI-generated images are getting scary good. Midjourney and DALL-E 3 can create photos that fool most people. Google's fighting back with the one thing AI can't fake: hardware-level cryptographic signatures.

But here's the problem - this only helps if platforms actually check the signatures. Adobe, Microsoft, and Meta all say they support C2PA, but implementation has been slow as hell.

The C2PA specification is technically solid, and the Content Authenticity Initiative has backing from major players. But platform adoption requires cooperation from Twitter/X, TikTok, Snapchat, and other social media giants who haven't fully committed to verification workflows.

The User Experience (If It Existed)

Taking photos would feel exactly the same - the signing would happen automatically in the background. Theoretically, you'd get some kind of authenticity indicator in Google Photos showing whether images are verified, edited, or unverified.

The UX would probably be decent, assuming Google doesn't fuck it up like they did with Google Buzz. Most crypto features require a PhD to understand, but Google's good at making complex things simple.

The real problem would be explaining to your mom why her photos look different when shared outside Google Photos. Good luck with that conversation.

Pro tip: If this actually existed and you wanted to test C2PA, you'd discover that WhatsApp strips it, Telegram strips it, even Gmail attachments strip it. This would be a nightmare to debug when platforms randomly strip signatures without warning. Only Google Photos and maybe Adobe's tools would preserve the signatures.

I guarantee you'd spend 2 hours wondering why your "verified authentic" photo shows as unverified after sharing it to Slack, only to discover that Slack's image processing pipeline strips all metadata including C2PA signatures. Good luck explaining that to your non-technical colleagues.

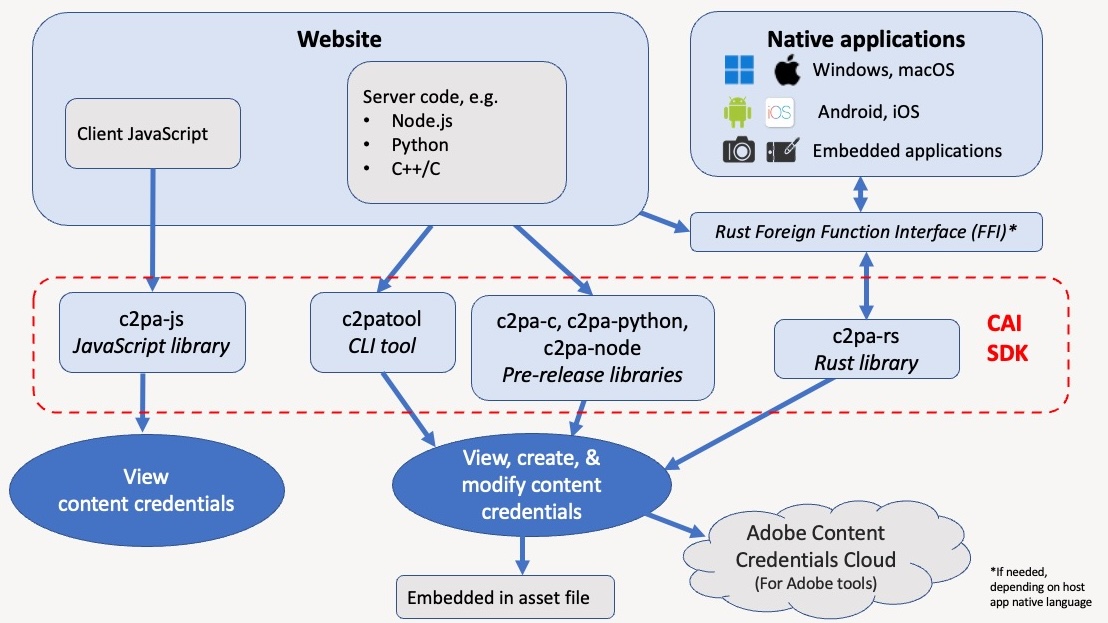

For technical details, check the C2PA Technical Specification, Project Origin's implementation, and Adobe's open source tools. The Coalition for Content Provenance maintains standards, while Digimarc's digital watermarks provide another layer of protection.