The Billing Setup Will Fail Twice Before It Works

Here's what actually happens when you try to get Claude API working:

First, you'll hit console.anthropic.com thinking this will be quick. Nope. The sign-up form rejects your perfectly valid email because their spam filter hates custom domains. Learned this after trying 3 different work emails. Just use Gmail - it's the only thing that works reliably.

Real account setup process: Check Anthropic's account setup guide for the official steps, but expect delays not mentioned in their docs.

Step 1: Navigate The Billing Maze

Email verification takes forever - not the "instant" bullshit they promise. Then you hit the billing wall. Claude API has zero free tier, unlike OpenAI's $5 credit. You're dropping $5 minimum before making one goddamn API call. Check the current Claude pricing structure for the latest minimum billing requirements.

The credit card rejection dance:

- Your card gets declined the first time (fraud protection)

- Second attempt works, but charges you immediately

- Phone verification randomly required (usually for US cards)

- International cards need manual review (24-48 hour delay)

Pro tip: Use a business credit card if you have one - personal cards trigger more fraud alerts. This pattern is common across AI services - see Google Cloud AI pricing and Azure OpenAI billing for comparison. If you're an enterprise, prepare for even more bureaucracy with Anthropic's Claude for Work.

Step 2: The Phone Verification Trap

Here's the fun part: phone verification is "optional" until it isn't. Random accounts get flagged and you'll get locked out until you verify. SMS codes take 5-15 minutes to arrive, and the form times out after 10 minutes.

If you're outside the US: Good luck. International SMS is spotty and customer support takes forever to respond. I learned this the hard way when trying to set up Claude for a London client - what should have been a 10-minute setup turned into a three-day ordeal. Their SMS system kept fucking up the verification codes, and customer support took 4 days to manually verify the account.

Gotcha that cost me an hour: The console sometimes breaks in older browser versions. Had to switch to Firefox to even see the verification form properly. Of course this isn't mentioned anywhere in their docs.

Step 3: API Key Generation (The Part That Actually Works)

Once you're past the billing nightmare:

- Hit API Keys in the sidebar

- Click Create Key

- Name it something useful like "dev-testing" or "prod-client-project"

- Copy it NOW - they don't show it again and recovering keys is a pain

Your key starts with sk-ant-api03- followed by a long random string.

Security stuff that actually matters (not the usual "best practices" bullshit):

- Stick it in a

.envfile:ANTHROPIC_API_KEY=sk-ant-api03-your-key-here - Add

.envto.gitignoreNOW before you accidentally commit it (I've done this twice) - For production, use AWS Secrets Manager or HashiCorp Vault - not environment variables on the server

The Models and Costs That'll Surprise You

Current models as of September 2025 (pricing changes monthly, so check the console):

| Model | What It's Actually Good For | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|---|

| Claude Haiku 3.5 | Quick responses, cheap experiments | $0.80 | $4.00 |

| Claude Sonnet 4 | Most stuff, best balance of speed and intelligence | $3.00 | $15.00 |

| Claude Opus 4.1 | Complex reasoning, expensive as hell | $15.00 | $75.00 |

Reality: I've been using Sonnet 4 in production since June. It's good enough for most tasks and won't bankrupt you like Opus will.

Reality check: My first production deploy in July - estimated like $50/month, actual bill ended up being $170 or $180, something crazy like that. Why? Token counting is a mindfuck. A "simple question" is ~50 tokens, but Claude loves to ramble and hits 500+ tokens in the response. One customer support chatbot we built burned through a bunch of money in a single day when a user started asking it to write entire Python scripts. Compare with GPT-4 pricing and Gemini pricing for context.

Token counting is bullshit: Their "4 characters = 1 token" is approximate. JSON, code blocks, and special characters screw with the count. Always budget 25% more than estimates. Use Anthropic's tokenizer for actual counts.

Testing Your Setup (And Why It'll Fail)

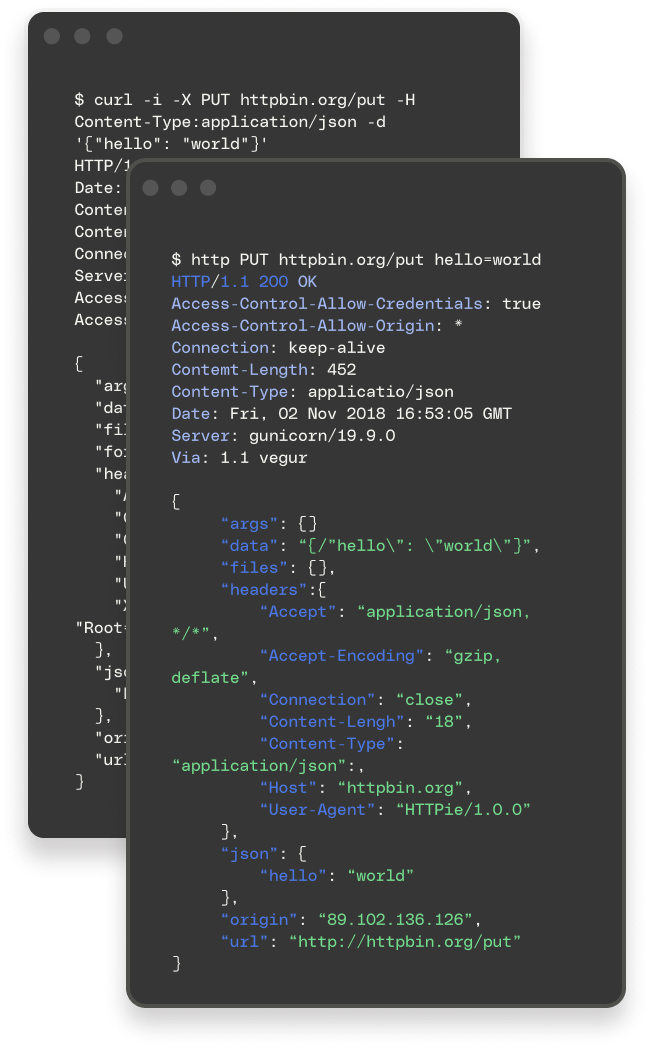

Here's the cURL. It'll probably fail because everything does:

## Test API endpoint - requires POST with authentication headers

curl -X POST \"https://api.anthropic.com/v1/messages\" \

-H \"Content-Type: application/json\" \

-H \"x-api-key: $ANTHROPIC_API_KEY\" \

-H \"anthropic-version: 2023-06-01\" \

-d '{ \

\"model\": \"claude-sonnet-4-20250514\",\

\"max_tokens\": 100,\

\"messages\": [\

{\"role\": \"user\", \"content\": \"Hello! Is this working?\"}\

]\

}'

When it fails (and it will):

- 401 Unauthorized: Your API key is wrong or you typoed it

- 402 Payment Required: Billing isn't set up properly (most common)

- 429 Rate Limited: You hit their 50 requests/minute limit

- 400 Bad Request: Missing the

anthropic-versionheader (this one's stupid but required)

Nuclear option: If nothing works, delete your API key and make a new one. Sometimes their key generation glitches. Check Anthropic's status page first.

What's Next

Assuming you got a JSON response back (congrats!), you're ready for the real pain: actually integrating this into your app. The next sections cover how to do this in Python and JavaScript without losing your mind.

![How to Get Claude API Key EASILY (FULL GUIDE) [2024] by WiseUp thumbnail](/_next/image?url=https%3A%2F%2Fimg.youtube.com%2Fvi%2F5yf-8Wz1CDM%2Fmaxresdefault.jpg&w=3840&q=75)