Before MCP, connecting AI tools to your actual data was like having a different key for every door in a 50-story building. Every integration was a special snowflake - custom API wrappers, authentication handling, and data formatting for each service. Want Claude to read your Slack messages? Build a custom integration. Need it to query your database? Another fucking custom integration. Scale this across an enterprise with dozens of systems and you're looking at months of engineering work just to get basic connectivity working.

The Real Problem MCP Addresses

Integration Hell: We tried connecting Claude to our customer database last year. Took 3 weeks to build a secure wrapper that could handle our OAuth setup, another week to make it not crash when someone queried a large table, and 2 more weeks of security review. By the time we were done, the use case had evolved and we needed different data access patterns.

Authentication Chaos: Every AI tool integration needed its own auth flow. Our Okta admin was getting pinged constantly for new service accounts, API keys were scattered across config files, and nobody knew which tokens had access to what. One intern accidentally committed database credentials to GitHub because the auth setup was so convoluted.

Maintenance Burden: Six months after building custom integrations, half of them were broken. APIs changed, authentication methods got updated, error handling wasn't robust enough for production load. We were spending more time maintaining AI integrations than building new MCP features.

What MCP Actually Does

MCP standardizes the boring stuff so you can focus on the useful stuff. It's JSON-RPC 2.0 with a specific schema for how AI applications discover and use external tools and data sources.

Standardized Discovery: Instead of hardcoding which tools are available, MCP clients can ask servers "what can you do?" and get back a structured list of capabilities. This means you can swap out backend systems without changing client code.

Unified Authentication: OAuth 2.1 support means your existing identity infrastructure works with MCP servers. No more per-service auth flows or scattered API keys.

Transport Flexibility: Local servers use stdio (fast, simple), remote servers use HTTP with Server-Sent Events for streaming. Same protocol, different pipes.

Real Implementation Gotchas You'll Hit

SSO Integration Pain: The MCP OAuth spec is solid but implementing it with enterprise SSO providers like Okta takes patience. The token validation flow broke 4 times during our pilot because our identity team changed token claim formats without warning.

Permission Modeling Complexity: MCP servers need to understand your existing RBAC models. We spent 2 weeks figuring out how to map Okta groups to database access permissions in a way that didn't require hardcoding business logic into the MCP server.

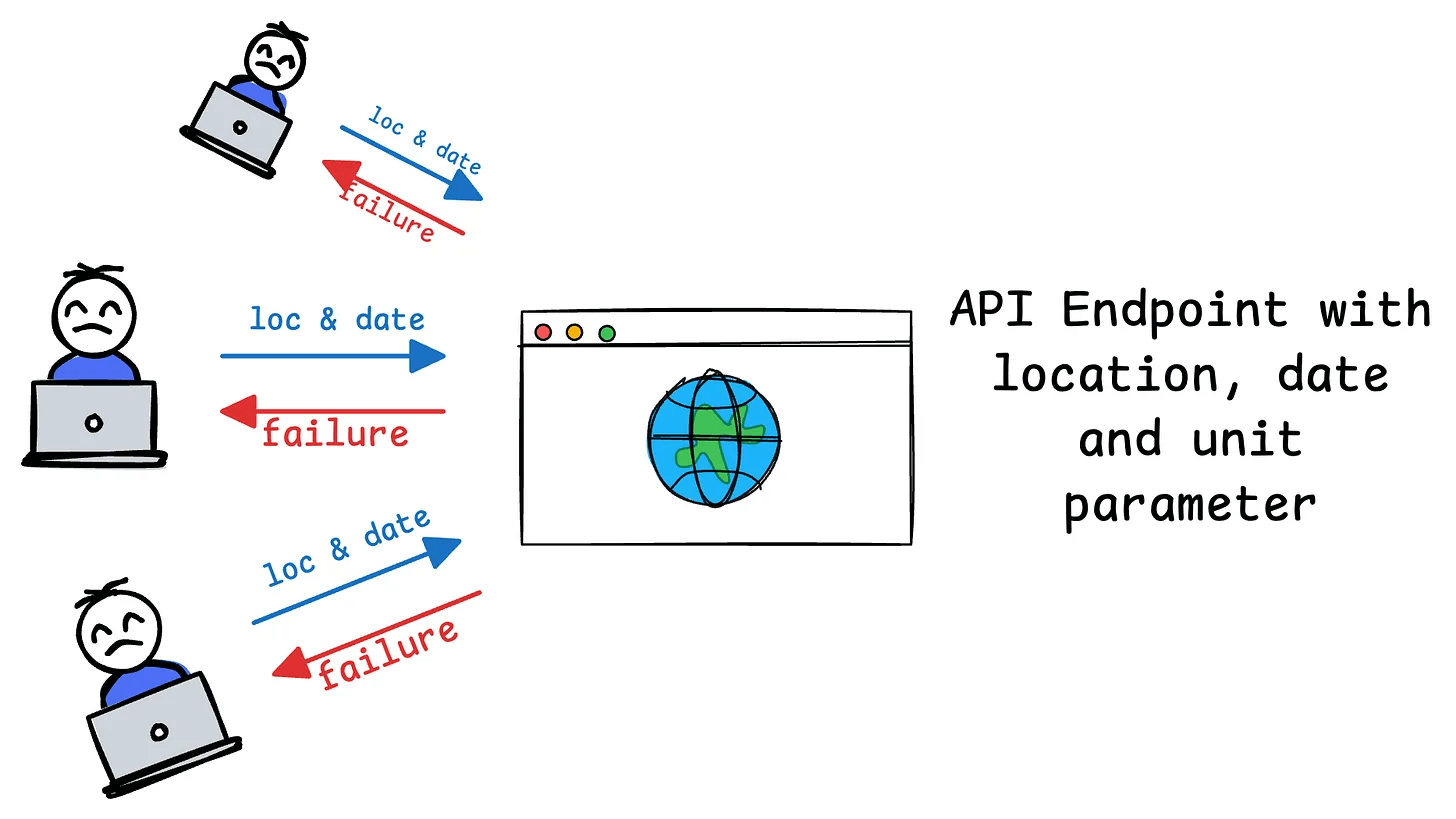

Error Handling Reality: The docs say MCP servers should gracefully handle errors, but they don't tell you Claude will hammer your backend with retries when shit breaks. Found this out the hard way when a database timeout triggered 3 exponential backoff retries that basically DDoSed our own API. Rate limiting at the MCP server level isn't a nice-to-have, it's survival.

Monitoring and Debugging: When MCP connections fail, the error messages are about as useful as a chocolate teapot. "Connection closed unexpectedly" could mean network timeout, permissions issue, or the server just decided to take a nap. Building actual logging and monitoring into your MCP servers isn't optional - it's the difference between debugging for 5 minutes vs spending your whole weekend figuring out what broke.

The official Anthropic announcement makes MCP sound simple, and conceptually it is. But like most protocols, the devil is in the production deployment details.

Enterprise Reality: Why Generic MCP Advice Falls Short

Most MCP tutorials assume you're a solo developer building personal projects. Enterprise deployments are different beasts entirely. You need OAuth integration with existing identity providers, audit logging for compliance teams, role-based access controls that map to your org chart, and monitoring that alerts the right people when things break.

I've seen teams scale from pilot to dozens of production MCP servers, and the operational complexity grows fast. Key insight: the technical MCP protocol is straightforward, but the operational infrastructure around it determines success or failure. Security reviews, deployment automation, monitoring, and incident response procedures matter more than the JSON-RPC message format.