Containers have been breaking out of their cages all year. CVE-2025-9074 is just the latest example of how Docker's security model is fundamentally fucked, but it's the worst one yet.

CVE-2025-9074: Docker Desktop's Epic Fail

CVE-2025-9074 is a 9.3 CVSS score nightmare that makes Docker Desktop on Windows basically useless for anything important. Any container - doesn't matter how locked down you think it is - can hit the Docker API at 192.168.65.7:2375 and get full admin access to your machine.

The official CVE entry describes it as "Server-Side Request Forgery (SSRF) vulnerability in Docker Desktop" but that's corporate bullshit language. This is a container escape, plain and simple. Docker's security advisory tries to downplay it but they had to rush out Docker Desktop 4.44.3 specifically to fix this clusterfuck.

Felix Boulet's research showed exactly how stupid easy this exploit is. Two HTTP requests and you've basically rooted the host:

POST /containers/createwith a bind mount of/to/hostPOST /containers/{id}/start

That's it. No privilege escalation needed, no fancy kernel exploits, just Docker being Docker and exposing its API where containers can reach it.

On Windows, this is catastrophic because Docker runs through WSL2. Once they mount your filesystem, they can read your SSH keys, browser saved passwords, crypto wallets - everything. They can even overwrite system DLLs to get permanent admin access. BleepingComputer's analysis breaks down the full impact.

The real kicker? Enhanced Container Isolation doesn't do shit against this. Docker marketed ECI as solving container escape problems, but CVE-2025-9074 walks right through it like it's not even there.

CVE-2024-45310: The Race Condition That Shouldn't Matter But Does

CVE-2024-45310 affects runc 1.1.13 and earlier. It's "only" a 3.6 CVSS score, but don't let that fool you - it's still dangerous in the right circumstances. The official CVE details are available if you want the technical breakdown.

The bug is in volume sharing between containers. There's a race condition in os.MkdirAll that lets attackers create arbitrary files on the host filesystem. Sure, it only creates empty files, but that's enough for privilege escalation if you know what you're doing.

This one hits Docker, Kubernetes, basically everything that uses runc. Which is everything. Check the runc security releases page to see if your version is vulnerable.

Real Talk: Container "Isolation" is Security Theater

Here's what actually happens when containers "escape":

Privileged containers are the obvious problem. If you're running --privileged, you deserve what's coming. Might as well just run everything as root on the host.

Namespace fuckery is getting more sophisticated. Recent kernel bugs let attackers break out of PID, USER, and NETWORK namespaces. Linux namespaces were never designed to be a security boundary anyway. Container security research explains the fundamental limitations.

Syscall exploitation keeps happening because container runtimes have to talk to the kernel somehow. Every syscall is a potential attack vector, and seccomp profiles are a pain in the ass to get right. The NIST container security guide covers these attack vectors in excruciating detail.

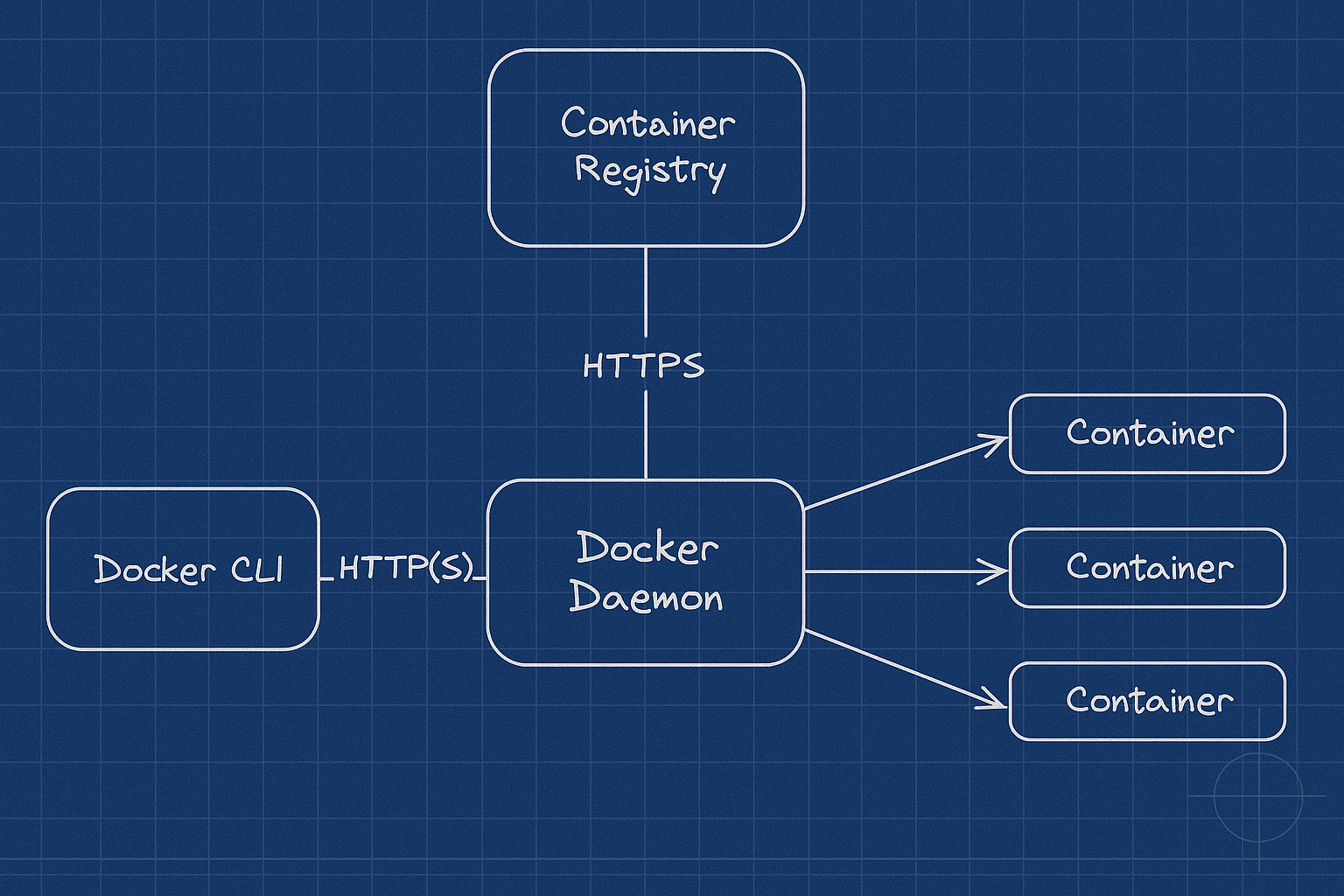

Volume mounts are the classic footgun. Mount /var/run/docker.sock into a container and congratulations, you just gave that container full Docker API access. Mount /proc or /sys and you've basically given up.

The real problem is that containers aren't actually isolated. They share the kernel with the host, so any kernel bug becomes a container escape. We've known this for years but keep pretending containers provide real security boundaries.

I've seen prod environments get owned because someone mounted the Docker socket "just for this one debugging container" that never got cleaned up. Container escapes are getting automated now - threat actors have exploit chains that reliably break out and pivot to the host. CyberArk's container security research documents these automated attack chains.

The OWASP Container Security Top 10 covers the most critical vulnerabilities, while SANS container security guide provides comprehensive threat modeling. Docker's official security documentation explains their defense mechanisms, but Trail of Bits' container security audit shows how easily these defenses fail in practice.

Kubernetes security documentation outlines cluster-level protections, but CISA's Kubernetes Hardening Guide reveals the gap between theory and operational security. Microsoft's container security research maps actual attack vectors, while Aqua Security's threat intelligence tracks emerging exploitation techniques.