When your Python app is slowly eating through server memory and you need answers fast, Pympler delivers where others fail. It works everywhere Python works, no compilation required. It's not the fastest profiler, but it's the one that actually installs without fighting your system for an hour. Been around since August 2008 and still gets regular updates - version 1.1 dropped in June 2024 with Python 3.12 support.

The real value isn't the fancy architecture - it's that when your Django app is eating 4GB of RAM and you have no idea why, Pympler actually tells you what's wrong. Spent most of Saturday debugging this memory leak that was killing our production server every 12 hours. Turned out to be 10,000 unclosed database connections because some genius decided to create a new connection pool in a class initializer that got called on every request. This brought down production during our busiest shopping weekend.

Three Tools That Actually Do Different Things

asizeof - Finally tells you how much memory your objects are really using. Unlike sys.getsizeof() which lies about nested structures, asizeof recursively calculates the actual footprint. When you think a dictionary is 200 bytes but it's actually 20MB because of all the shit it references, asizeof shows you the truth.

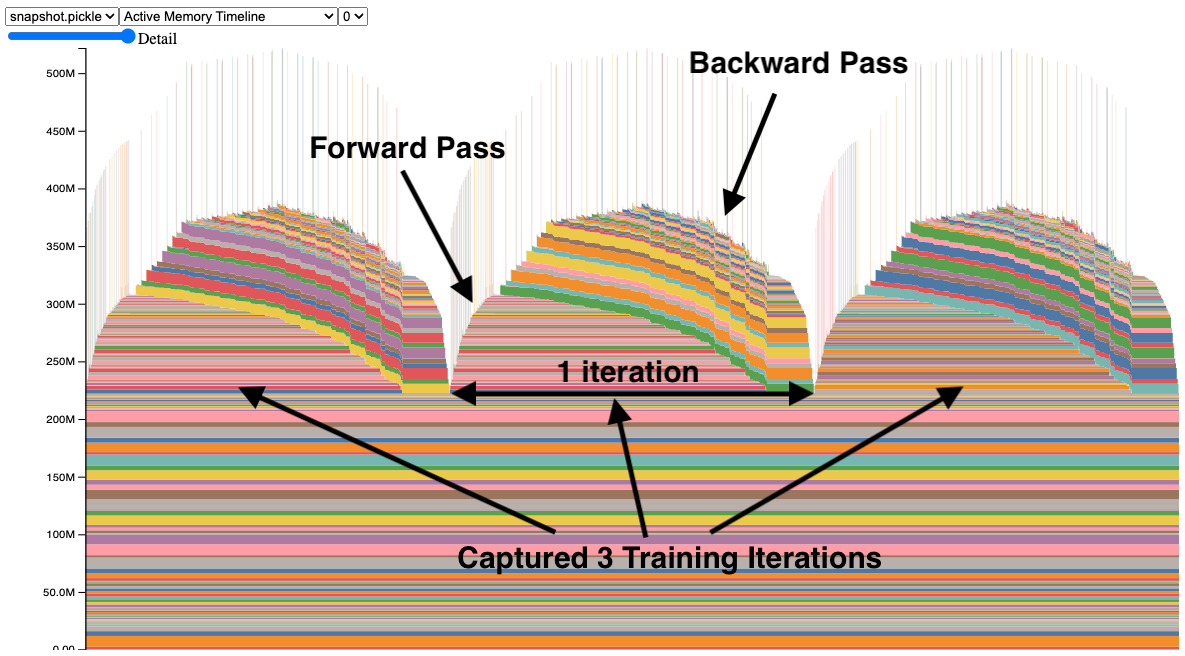

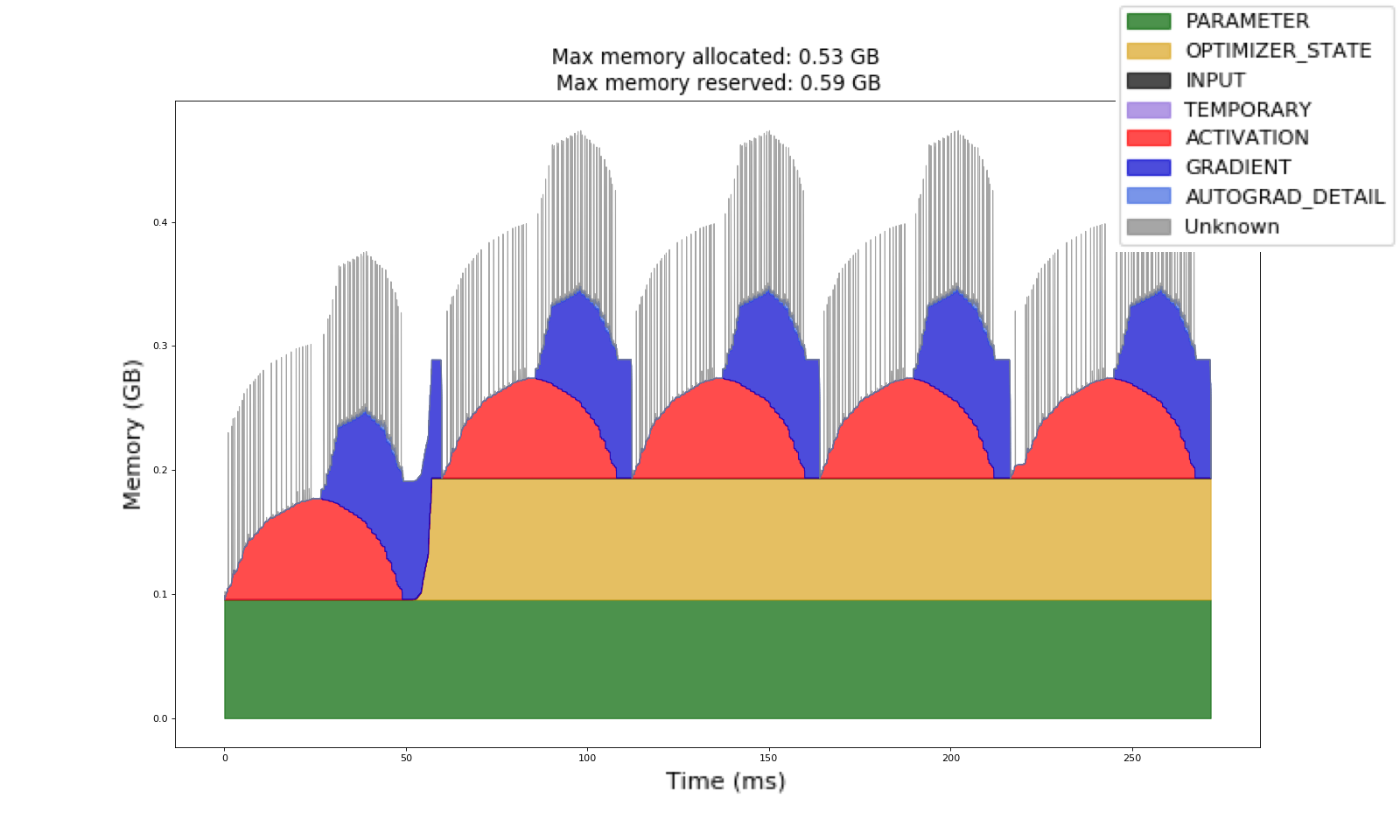

muppy - Tracks memory in real-time so you can see what's growing over time. Perfect for finding those slow leaks that only show up after your app runs for days. This will tank your performance if you run it constantly. We trigger it when memory usage spikes above 80% - works great for finding which endpoints are memory hogs.

Class Tracker - Monitors specific object types throughout their lifecycle. Essential when you need to know why certain classes keep creating objects that never get garbage collected. Saved us when we discovered a third-party library was creating thousands of uncollected objects in a background thread.

The Web Interface That Doesn't Suck

The web interface is actually useful, which is rare. Instead of dumping raw data to your terminal, you get interactive charts showing memory growth, object counts, and garbage collection stats. Your PM can actually understand the output without learning command-line profiling. Plus it runs in any browser, so you can debug memory issues on remote servers through SSH tunneling.

Works On Everything (Even Windows)

Python 3.6 through 3.12 on Linux, macOS, and Windows. On Windows you need pywin32 but pip usually handles that. The pure Python implementation means no compilation hell - it just works. Unlike Memray which is technically better but good luck getting it to compile on your machine. Sometimes "just works" beats "technically superior."

Production Reality Check

Don't run this constantly in production unless you hate performance. It's going to slow things down - maybe 20-30% if you're monitoring everything. We've seen Flask APIs drop from normal throughput to crawling when you turn on full monitoring. Use it during maintenance windows or when debugging specific memory spikes. The overhead is worth it when you're trying to figure out why your app crashes with OOMKilled every few hours.