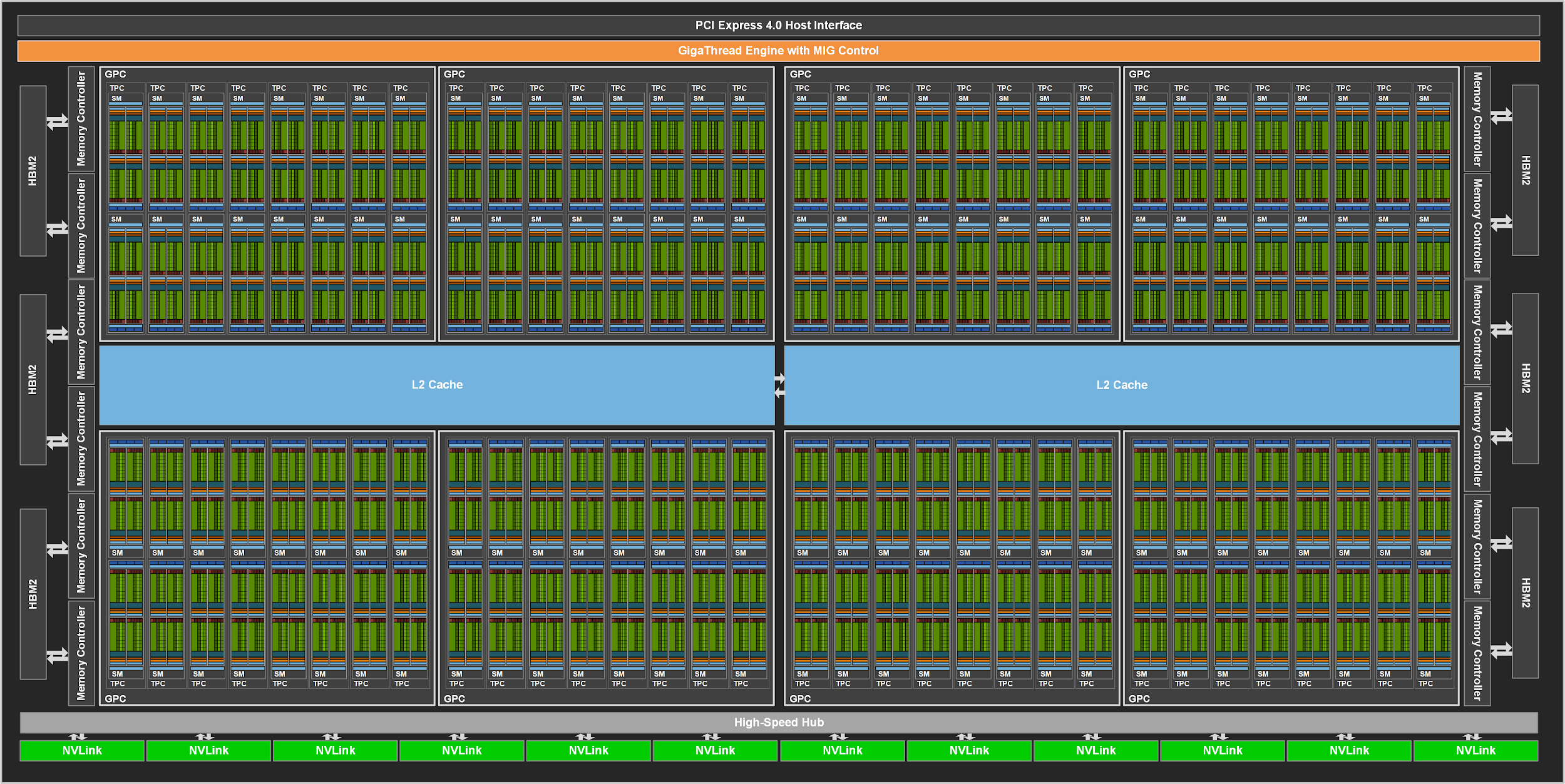

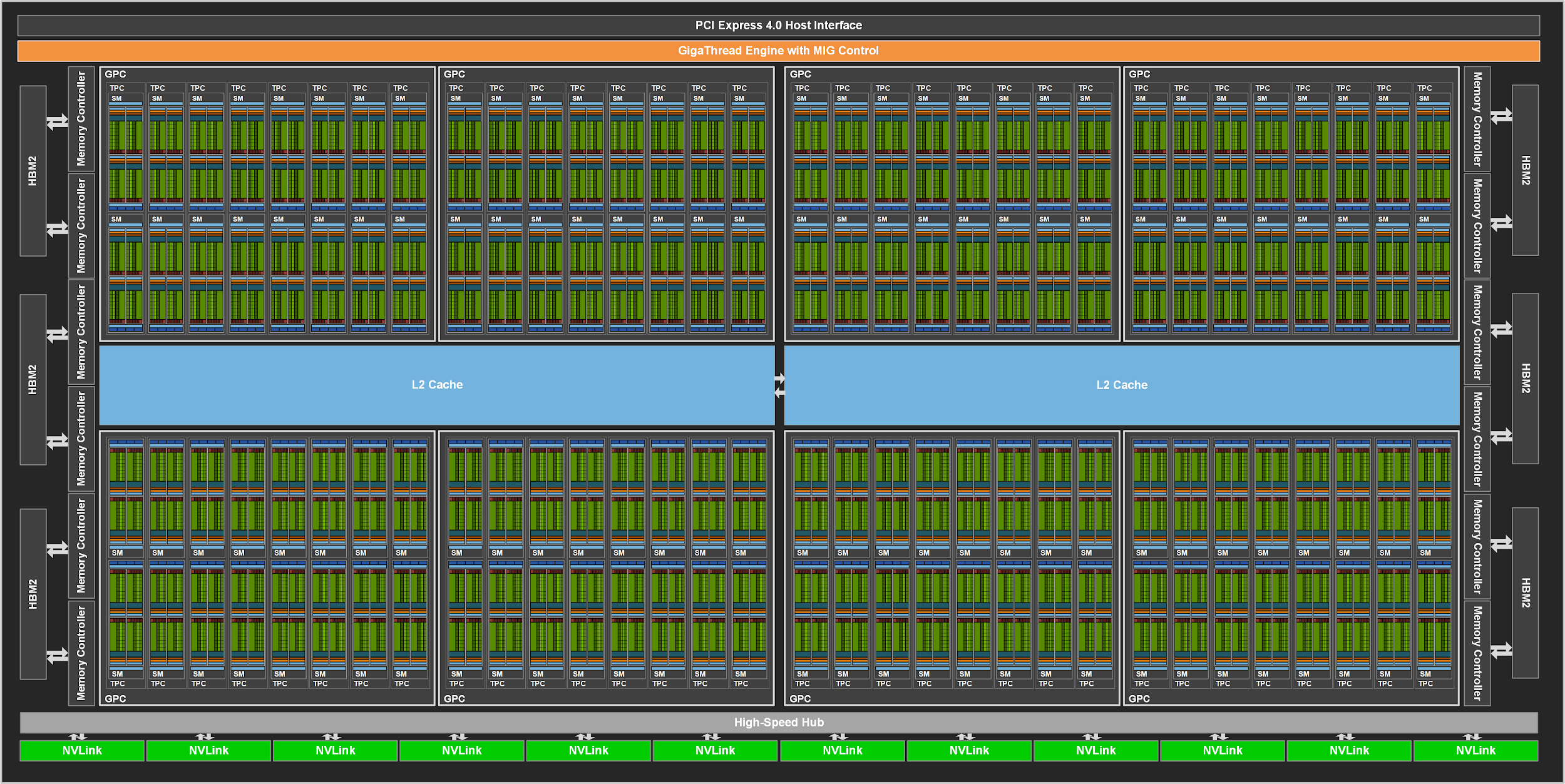

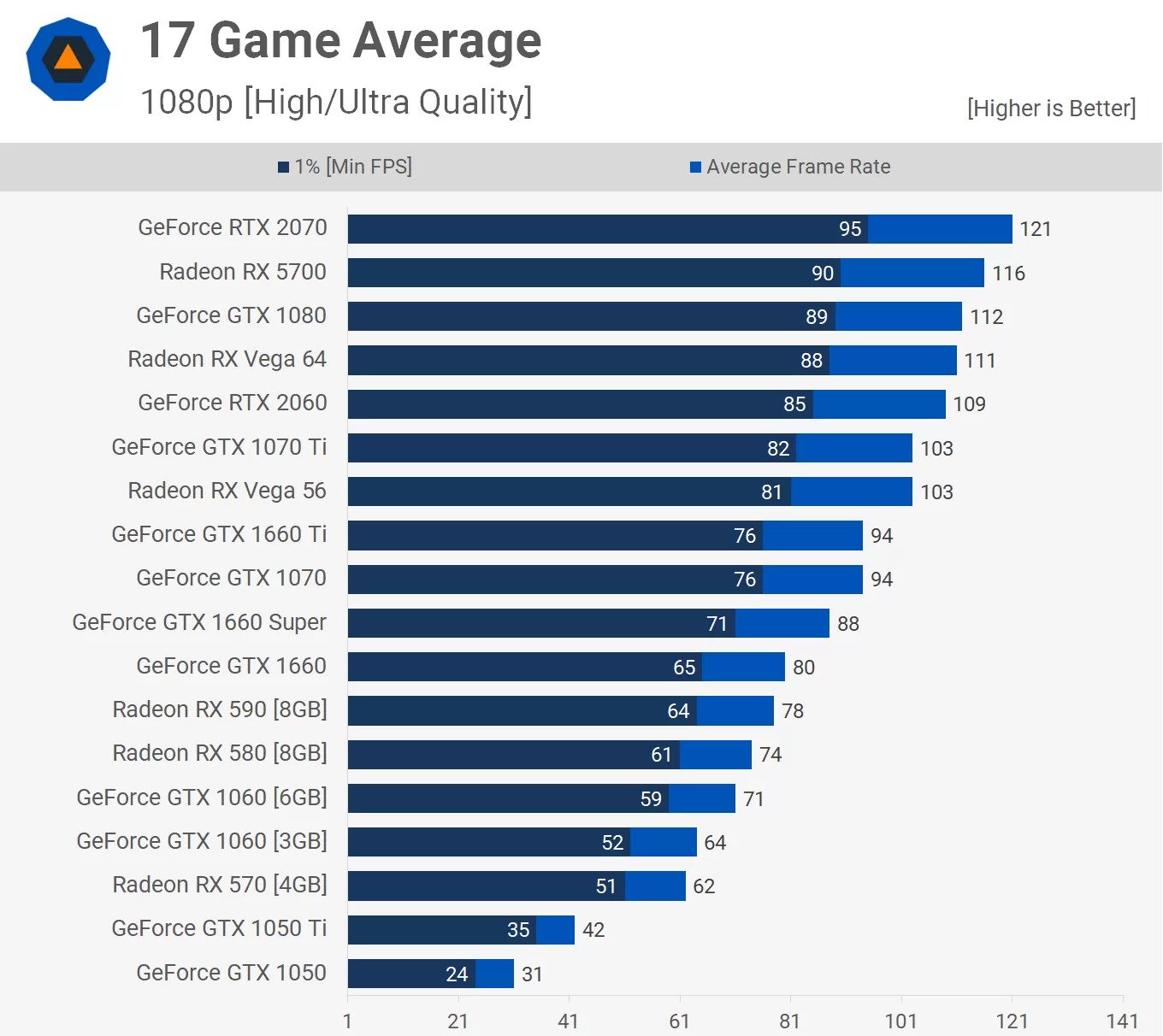

This model needs serious hardware. 140GB+ VRAM for full precision means you're either dropping $20k+ on GPUs or using cloud APIs. RTX 4090 isn't enough - you'll get OOM errors immediately.

The Hardware Reality Check

What you actually need for local deployment:

- GPU Memory: 140GB+ VRAM for full precision (good luck affording that)

- System RAM: 32GB minimum, but get 64GB or you'll hate your life during long conversations

- Storage: 150GB+ just for the model, plus whatever OS bloat you're running

- Realistic Setup: 2x A100 80GB ($15k each), 4x RTX 4090 ($1600 each), or pray to the quantization gods

Quantization: Your Wallet's Best Friend

- 4-bit: Drops requirements to ~40GB VRAM. Quality takes a small hit, but your bank account survives.

- 8-bit: Need ~70GB VRAM. Better quality, still expensive.

- CPU-only: Don't. Just don't. You'll get 2-4 tokens/second and question your life choices.

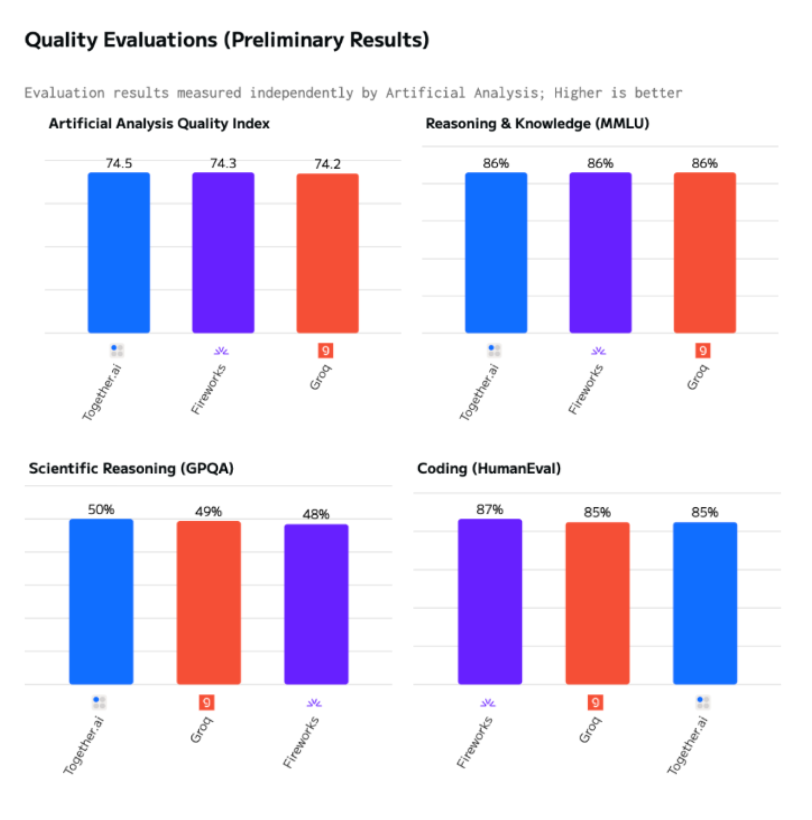

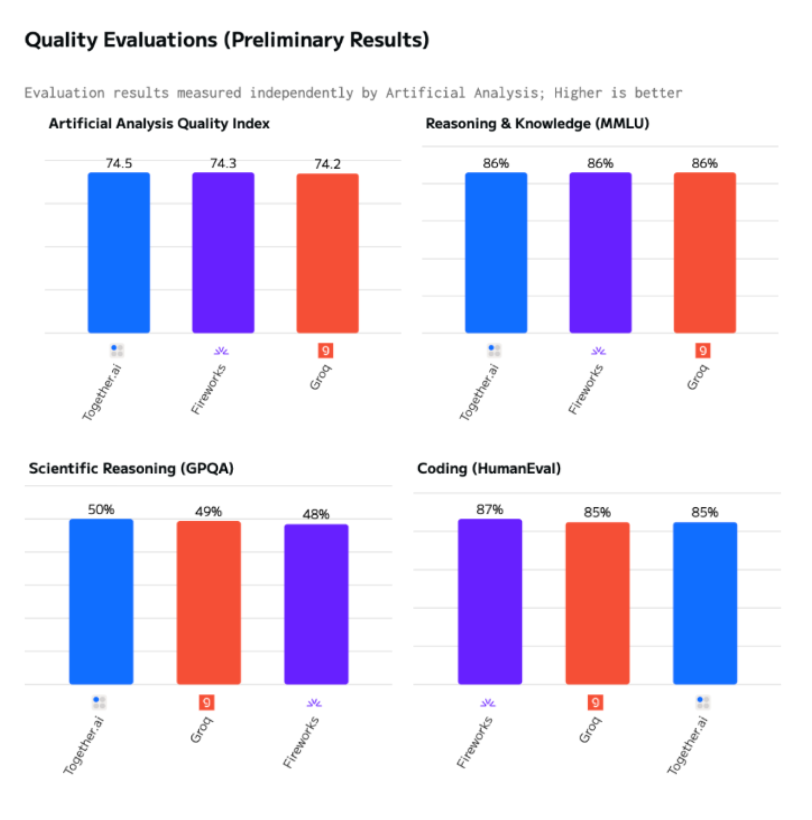

Start with APIs unless you enjoy debugging CUDA drivers. Together AI (pricing) or Groq (speed tests) work reliably. Migrate to local later when API costs get annoying. Cost comparison.

API Integration That Actually Works

Start with APIs (seriously, start here):

Skip the hardware pain and use API providers. Here's what actually works in production:

## Together AI - reliable, reasonably priced

import openai

client = openai.OpenAI(

base_url="https://api.together.xyz/v1",

api_key="your-together-api-key" # Get this from their dashboard

)

## Pro tip: Set timeouts or you'll hang forever on slow responses

response = client.chat.completions.create(

model="meta-llama/Llama-3.3-70B-Instruct-Turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain quantum computing in simple terms."}

],

max_tokens=1000,

temperature=0.7,

timeout=30 # Add this or regret it later

)

AWS Bedrock Integration:

For enterprise deployments requiring compliance features and guaranteed SLAs, AWS Bedrock provides managed access:

import boto3

bedrock = boto3.client('bedrock-runtime', region_name='us-east-1')

response = bedrock.invoke_model(

modelId='meta.llama3-3-70b-instruct-v1:0',

body=json.dumps({

"prompt": "Your prompt here",

"max_gen_len": 1000,

"temperature": 0.7,

"top_p": 0.9

})

)

Local Deployment (Prepare for Pain)

Ollama: The "Easy" Way

Ollama says it makes local deployment simple. It does, if you consider downloading 140GB and watching your GPU melt "simple":

## Install Ollama (this part actually works)

curl -fsSL https://ollama.com/install.sh | sh

## Download Llama 3.3 70B - grab coffee, this takes forever

ollama run llama3.3:70b

## When you inevitably get OOM errors, try this:

ollama run llama3.3:70b-q4 # 4-bit quantized version

## Test it (if your GPU survived)

## This API call works when Ollama is running locally on port 11434

Reality check: Ollama claims it works with 16GB RAM. Complete fucking lie. You'll get CUDA out of memory: 140GB requested, 6GB available errors or watch your system swap itself to death while you wait 5 minutes for "Hello World." I learned this the hard way at 2am trying to demo to a client - spent 3 hours debugging why the model kept crashing with RuntimeError: CUDA error: device-side assert triggered, only to realize Windows 11 was hogging 8GB for its bloated UI and background bullshit. Restarted Ollama 15 times, kept having to redownload the entire 140GB because corrupted downloads. Docker Desktop was eating another 4GB. Get 32GB minimum or you'll hate your life, and if you're on Windows with WSL2, add another 8GB because Windows will steal your memory like a fucking vampire. Also, Ollama v0.1.47 breaks with CUDA 12.3 - you need exactly CUDA 12.1 or it silently fails with zero useful error messages.

Fun fact: WSL2 adds around 8GB memory overhead that nobody mentions in the docs, and Docker Desktop on top of that steals another 2-3GB. Your "16GB" machine suddenly has 6GB usable. Hardware requirements and optimization tips.

vLLM: For When You Have Real Hardware

If you've got the GPU budget and want maximum performance, vLLM is your friend:

## Install vLLM (make sure you have CUDA 11.8+ or it'll break)

pip install vllm

## Start inference server - adjust tensor-parallel-size based on your GPU count

python -m vllm.entrypoints.openai.api_server \

--model meta-llama/Llama-3.3-70B-Instruct \

--tensor-parallel-size 4 \

--dtype float16 \

--max-model-len 32768 # Reduce if you run out of memory

🚨 CRITICAL WARNING: vLLM will devour every byte of VRAM like it's starving and crash your entire system without mercy. You'll see RuntimeError: CUDA out of memory followed by a completely frozen desktop that requires a hard reboot. Set --gpu-memory-utilization 0.8 or watch your desktop environment die horribly when you try to open anything else.

Also, if you get ModuleNotFoundError: No module named 'vllm._C', you probably installed the wrong CUDA version. vLLM v0.3.2+ is picky as hell about CUDA versions - needs exactly CUDA 11.8 or 12.1, anything else breaks in mysterious ways. CUDA 12.4? Nope. CUDA 11.7? Fuck no. I've seen people spend entire weekends reinstalling CUDA drivers because they tried to run vLLM v0.3.3 with CUDA 11.7 and got ImportError: /usr/local/lib/python3.10/site-packages/vllm/_C.cpython-310-x86_64-linux-gnu.so: undefined symbol: _ZN3c104cuda20CUDACachingAllocator9allocatorE - completely useless error message that just means "wrong CUDA version, genius." Installation guide and performance tuning.

Llama 3.3 70B uses a specific chat format that must be followed for optimal performance:

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

You are a helpful assistant.<|eot_id|><|start_header_id|>user<|end_header_id|>

What is machine learning?<|eot_id|><|start_header_id|>assistant<|end_header_id|>

Effective Prompt Patterns:

For Code Generation:

<|start_header_id|>system<|end_header_id|>

You are an expert Python developer. Write clean, well-documented code.

<|eot_id|><|start_header_id|>user<|end_header_id|>

Create a function to calculate the Fibonacci sequence up to n terms.

<|eot_id|><|start_header_id|>assistant<|end_header_id|>

For Analysis Tasks:

<|start_header_id|>system<|end_header_id|>

You are a data analyst. Provide clear, actionable insights.

<|eot_id|>user<|end_header_id|>

Analyze the following sales data and identify trends...

<|eot_id|><|start_header_id|>assistant<|end_header_id|>

Speed depends on where you run it:

Provider performance varies significantly:

Context Window: 128K Tokens of Joy and Pain

The 128K context window is great until it isn't. After 100K tokens, inference slows to a crawl and the model gets Alzheimer's - it'll forget your initial instructions completely. Spent hours debugging why the model kept contradicting itself - turned out it forgot what framework we were using. Trimming context fixed it instantly:

def manage_context(conversation_history, max_tokens=120000):

"""Keep conversation sane or watch performance die"""

token_count = count_tokens(conversation_history)

if token_count > max_tokens:

# Nuclear option: keep system prompt + recent stuff

return conversation_history[:1] + conversation_history[-10:]

# Pro tip: trim at 100K, not 128K, trust me

if token_count > 100000:

return conversation_history[:1] + conversation_history[-8:]

return conversation_history

Batch Processing for Efficiency:

For high-throughput applications, batch processing significantly improves efficiency:

## Process multiple requests in parallel

import asyncio

async def process_batch(prompts, model_client):

tasks = [model_client.complete(prompt) for prompt in prompts]

return await asyncio.gather(*tasks)

Fine-tuning and Customization

One of Llama 3.3 70B's significant advantages over proprietary models is the ability to fine-tune for specific domains or use cases:

Parameter-Efficient Fine-tuning (PEFT):

- LoRA (Low-Rank Adaptation): Efficient fine-tuning with minimal computational requirements

- QLoRA: Quantized LoRA for even lower resource usage

- Adapter Methods: Task-specific adaptation layers

Domain Specialization Examples:

- Legal Document Analysis: Fine-tuned on legal texts for contract review

- Medical Applications: Adapted for clinical decision support (with appropriate validation)

- Code Generation: Specialized for specific programming languages or frameworks

- Scientific Research: Optimized for technical paper analysis and generation

Production Deployment Considerations

Monitoring and Observability:

Production deployments require comprehensive monitoring:

## Example monitoring integration

import logging

from datetime import datetime

def log_inference(prompt, response, latency, cost):

logging.info({

"timestamp": datetime.now().isoformat(),

"prompt_length": len(prompt),

"response_length": len(response),

"latency_ms": latency,

"estimated_cost": cost,

"model": "llama-3.3-70b"

})

Cost Management:

Implement cost controls to prevent unexpected expenses:

- Rate Limiting: Control requests per user/application

- Response Length Limits: Prevent excessively long generations

- Caching: Store and reuse responses for repeated queries

- Model Routing: Use smaller models for simple tasks, Llama 3.3 70B for complex ones

Security and Compliance:

- Input Sanitization: Validate and clean user inputs before processing

- Output Filtering: Screen generated content for inappropriate material

- Data Privacy: Implement proper data handling for sensitive information

- Audit Logging: Maintain comprehensive logs for compliance requirements

Bottom Line: Is It Worth It?

If you're tired of burning money on OpenAI and want something you actually control, Llama 3.3 70B is your best bet. It's not perfect - you'll still hit edge cases and weird failures - but it's the first open model that actually competes with GPT-4 while costing a fraction to run.

Start with APIs, test it on your use cases, and migrate to local deployment when the monthly bills get scary. Just don't expect it to work flawlessly out of the box - budget time for troubleshooting, especially if you go the local route.

The hardware requirements are brutal, but the cost savings over proprietary models make it compelling for any serious development work. Plus, when OpenAI inevitably raises prices again, you'll be glad you're not locked into their ecosystem.

Still have questions about whether this thing is worth the hassle? Here are the answers to everything you're probably wondering about Llama 3.3 70B.

![]()

Cloud providers: Groq (300+ tokens/sec), Together AI (80-90 tokens/sec), AWS Bedrock (40-60 tokens/sec). Local hardware: 2x A100 80GB (20-25 tokens/sec), 4x RTX 4090 (12-18 tokens/sec), 2x RTX 3090 with quantization (8-12 tokens/sec). CPU-only: 2-4 tokens/sec (not practical for most applications). Speed varies based on prompt length, model quantization, and concurrent request load.

Cloud providers: Groq (300+ tokens/sec), Together AI (80-90 tokens/sec), AWS Bedrock (40-60 tokens/sec). Local hardware: 2x A100 80GB (20-25 tokens/sec), 4x RTX 4090 (12-18 tokens/sec), 2x RTX 3090 with quantization (8-12 tokens/sec). CPU-only: 2-4 tokens/sec (not practical for most applications). Speed varies based on prompt length, model quantization, and concurrent request load.