The Docker CLI is just making HTTP calls to /var/run/docker.sock. That's literally it. Run docker ps? Boom, GET /containers/json. Docker randomly marking your container as "unhealthy" for the 50th fucking time today? That's the API lying to your face.

The current API is version 1.51 in recent Docker Engine releases. But here's the shit they don't mention in the marketing: version compatibility is an absolute nightmare. Your CI runs 1.41, prod is stuck on 1.43, and your local machine is on 1.51. Good fucking luck making that work consistently.

What You Can Actually Do With This Thing

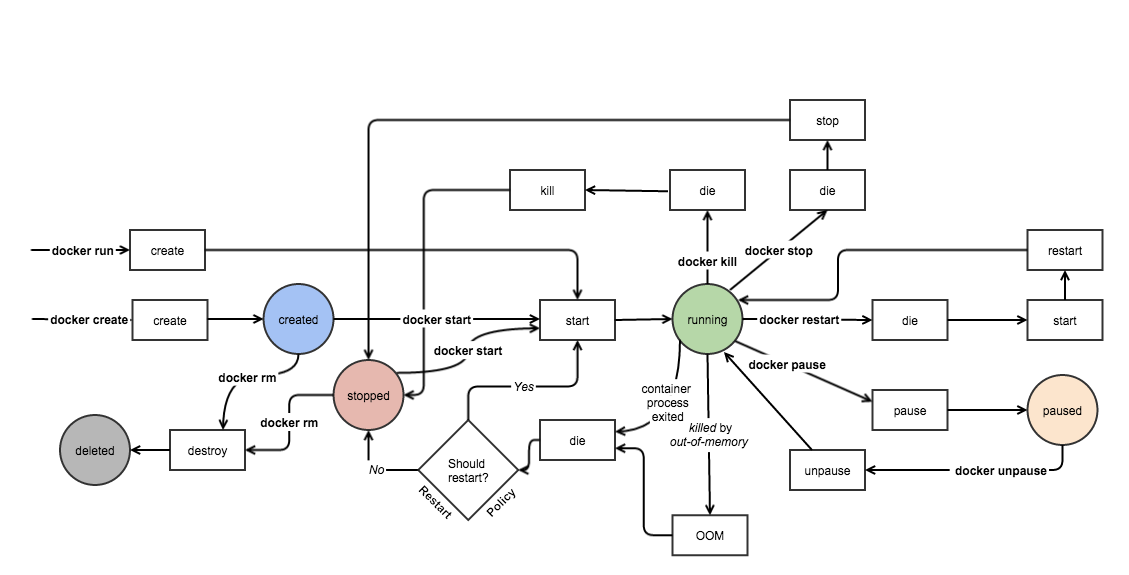

Container Management: Start containers, watch them crash, restart them, watch them crash again. The container lifecycle endpoints work great until they don't. When they fail, you get helpful error messages like:

Error response from daemon: OCI runtime create failed:

container_linux.go:380: starting container process caused:

exec: "app": executable file not found in $PATH: unknown

Which tells you absolutely nothing useful.

Image Operations: Pull images and pray to whatever deity you believe in that you don't hit the Docker Hub rate limit. The image API endpoints pull images just fine until boom - ERROR: toomanyrequests: You have reached your pull rate limit after 100 measly pulls in 6 hours. Anonymous users get 100 pulls per 6 hours. Authenticated users get 200. Because fuck your CI pipeline, I guess.

Docker Hub Rate Limit Error Example:

ERROR: toomanyrequests: You have reached your pull rate limit.

You may increase the limit by authenticating and upgrading.

Network Bullshit: Docker networking is where hope goes to die. The network API lets you create networks with /networks/create, but good luck figuring out why containers can't talk to each other. The bridge driver works until it doesn't. The host driver exposes everything. Custom networks break randomly.

Volume Management: Volumes work fine until you need them on Windows, then it's pure hell. The /volumes/create endpoint creates volumes, but Windows paths will make you want to defenestrate your laptop: "C:\Users\dev\AppData\Local\Docker\wsl\data\ext4.vhdx" because of course it's buried 5 levels deep in some bullshit WSL directory.

System Info: The system endpoints tell you Docker is "fine" right before it crashes and takes down your entire development environment. The system events stream is great for watching everything break in real time.

Where This API Actually Gets Used (And Breaks)

CI/CD Pipelines: GitLab CI uses this API and it works great until your runner dies. GitHub Actions calls the API too, but don't expect helpful error messages when your workflow randomly fails with Error response from daemon: pull access denied for private-repo, repository does not exist or may require 'docker login'.

Monitoring Disasters: cAdvisor hits /containers/{id}/stats to get container metrics. Great in theory. In practice, it'll crash your monitoring stack when Docker stats goes nuts and returns 50GB/s network usage for a container doing nothing.

Docker Desktop UI: Docker Desktop is just a pretty wrapper around these same API calls. When the UI shows "Starting" for 10 fucking minutes, it's because the API call is hanging like a Windows 95 application. Force quit and restart - it's literally always the answer.

Custom Tooling: We built a deployment tool that calls /containers/create and /containers/start. Worked fine for months until Docker Engine 24.0.6 had a bug where containers would start but immediately go into restart loops. Downgraded to 24.0.5, everything worked again.

How to Talk to This Thing

Raw HTTP: Hit /var/run/docker.sock directly. On Linux, it just works. On Mac with Docker Desktop, it works until it randomly doesn't and you spend 3 hours troubleshooting. On Windows... good fucking luck with named pipes - may the odds be ever in your favor.

## This works most of the time - lists all containers

curl --unix-socket /var/run/docker.sock \

"http://localhost/v1.51/containers/json"

## This fails with cryptic errors when Docker is having a bad day

curl --unix-socket /var/run/docker.sock -X POST \

"http://localhost/v1.51/containers/{id}/start"

These examples show the actual Docker API endpoints you'll be hitting. The /containers/json endpoint lists containers, while the /containers/{id}/start endpoint starts them. For complete API reference documentation, check the official Docker docs.

Python SDK: Use docker-py. It's decent but the documentation lies about error handling. You'll get APIError: 500 Server Error: Internal Server Error and have to guess what actually went wrong.

Go SDK: The official Go client is what the Docker CLI uses. If it's good enough for them, it's good enough for you. Still crashes on weird edge cases like containers with malformed labels.

Node.js: dockerode works fine until you need to stream container logs and it decides to buffer everything in memory. Your app will OOM on large log files.

Everything Else: There are Java, Ruby, and PHP libraries. They're all variations on "HTTP client that wraps Docker API calls". Some handle errors better than others. None of them handle the soul-crushing reality of Docker's random failures.

Version Hell and Compatibility Nightmares

"Backward compatible" is Docker's most audacious lie. Yeah, API v1.24 technically works with newer engines, but good fucking luck when your old client tries to use features that don't exist or behave completely differently. The version matrix looks all neat and organized until you discover the edge cases that will ruin your week aren't documented anywhere.

Version Negotiation: SDKs try to auto-negotiate API versions. Sometimes this works. Sometimes your app mysteriously breaks because it negotiated down to v1.38 and now volumes don't mount properly. Pin your versions: DOCKER_API_VERSION=1.51 in production.

Breaking Changes: Docker claims "rare breaking changes" but forgets to mention the subtle behavior changes that aren't technically breaking but will ruin your day. API v1.41 "changed" restart policies - meaning your containers stop restarting after updates. Fun times.

Security Stuff That'll Bite You

Socket Permissions: /var/run/docker.sock is basically root access. Adding users to the docker group gives them root access to the host. Don't do this in production. Use rootless Docker or docker-socket-proxy if you must.

Remote Access: Don't expose the Docker API to the internet. Ever. Even with TLS authentication. Some genius will find a way to break out of containers and pwn your host. Use SSH tunnels or VPNs.

Container Breakouts: Containers aren't VMs. Privileged containers can escape. Even non-privileged ones can break out if you're not careful with capabilities. The API doesn't stop you from creating dangerous containers - that's your job.

Production Reality Check

High Availability: Multiple Docker engines behind a load balancer? Sure, but containers are tied to specific hosts. When that host dies, your containers are gone. Use Kubernetes if you need real HA.

Monitoring: The /system/events endpoint sounds great for monitoring until it starts spamming your logs with 10,000 events per second because someone decided to restart all containers at once. Rate limit or your log bill will bankrupt you.

When Everything Breaks: Docker daemon crashes are inevitable. Fucking plan for it. Have monitoring that actually detects when the API stops responding. Have restart scripts that work. Have backups of your container configs. Have your resume updated and a different job lined up.

This API is powerful but fragile. It'll work great for months, then randomly break in production at 3 AM on a Friday. Now that you understand what you're getting into, let's see how it compares to the alternatives (spoiler: they all suck in different ways).