The Multi-Model Problem We All Had

The multi-model nightmare is real: you're building an agent and end up with GPT-4 for quick responses, o1 for complex questions, and a mess of routing logic that breaks at 3am. You're dealing with different APIs, handling failovers when one is down, and your error handling looks like shit because you're managing two completely different systems.

I spent 6 hours last month debugging why our agent kept timing out - turns out we were hitting o1's rate limits during peak usage and our fallback logic was broken. DeepSeek V3.1 actually fixes this by giving you both modes in one model. Same API endpoints, same error handling, same monitoring. Just flip a switch between deepseek-chat and deepseek-reasoner.

How the Mode Switching Actually Works

The whole thing works through chat templates - basically DeepSeek's way of letting you toggle between 'fast and wrong' and 'slow but actually thinks'. You wrap your prompt in <think> tags and pray it doesn't timeout on you. Without the tags, it responds in 3-4 seconds. With the tags, it takes 30-60 seconds but shows you its entire thought process. The technical implementation uses a hybrid architecture that switches between inference modes dynamically.

Why DeepSeek's Architecture Actually Works

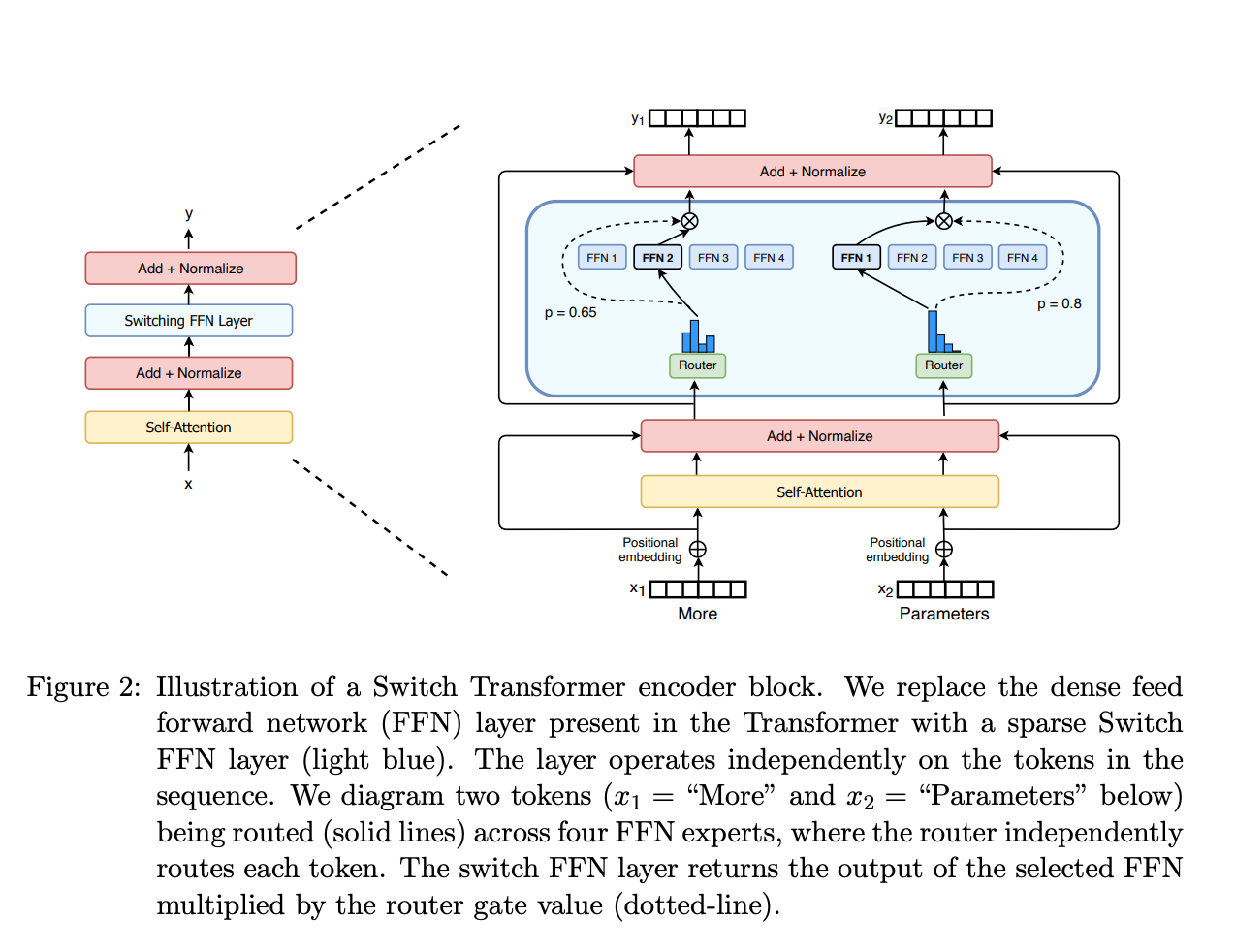

DeepSeek's API randomly returns 502s during peak hours (usually middle of the night PST - because nothing ever breaks during business hours), and their rate limiting resets at weird times. But when it works, the MoE architecture is solid: 671B parameters total but only 37B active per token, so you get massive model capacity without the computational nightmare.

Here's what actually happens:

- Non-thinking mode: 3 second responses, good enough for most queries, will confidently bullshit you on complex stuff

- Thinking mode: 45-60 second responses, shows step-by-step reasoning, catches its own mistakes

- Mode switching: Works mid-conversation but your UI needs to handle the latency difference

- Context preservation: 128K tokens maintained across switches (actually works unlike some models)

Look, non-thinking mode is basically GPT-4 speed with DeepSeek quality. Thinking mode is where it shines - you can see exactly where the reasoning goes wrong instead of getting a confident wrong answer. But thinking mode will timeout on super complex problems and sometimes gets stuck in reasoning loops.

Benchmarks That Actually Matter

The Aider coding tests show V3.1 hitting 71.6% pass rate in thinking mode, which beats Claude Opus (70.6%). That's impressive because Aider tests real code generation, not toy problems. In practice, thinking mode is overkill for simple code completion but crucial for debugging complex logic.

Our thinking mode requests started taking 180+ seconds during peak hours. Turns out DeepSeek throttles response speed based on load, but doesn't document this anywhere. Found out through Discord complaints after we spent 6 hours debugging what looked like connection timeouts - their official support is useless.

SWE-bench Verified results are more interesting: 66.0% vs R1's 44.6%. This benchmark tests fixing real GitHub issues from open source projects. The fact that V3.1 outperforms its pure reasoning predecessor suggests the hybrid approach isn't just convenient - it's actually better.

Cost breakdown: Even with thinking mode's 1.5x pricing, you're still paying about $1 per million tokens vs GPT-4's $30. Our API costs dropped by about two-thirds after switching from GPT-4 to DeepSeek V3.1, and that's with heavy thinking mode usage.

What This Means for Building Agents

The big advantage is you don't need separate models anymore. Before V3.1, I was running GPT-4 for chat and o1 for complex reasoning, which meant:

- Two different API keys and rate limits to manage

- Separate error handling for each model

- Different response formats and timing expectations

- Users getting confused when response times varied wildly

With V3.1, you get both in one package. Start conversations in fast mode, escalate to thinking mode when needed, and your users aren't waiting 60 seconds for "What's the weather like?"

The catch: Mode switching adds complexity to your agent. You'll spend time deciding when to use thinking mode, figuring out how to handle 60-second delays without users giving up, and planning for when thinking mode just... stops working. Your UI needs to show progress for 60-second responses, and your retry logic needs to handle both modes differently.

Production Deployment Reality

V3.1 simplifies deployment in some ways and complicates it in others. The good: one model to deploy, manage, and monitor. The bad: thinking mode can randomly take 90+ seconds, and you need timeout handling that doesn't suck.

Our production deployment handles this by:

- Default 15-second timeout for non-thinking mode

- 120-second timeout for thinking mode (it will hit this sometimes)

- Automatic fallback to non-thinking if thinking mode fails

- User messaging that sets expectations for thinking mode delays

The cost savings are real - we went from $800/month in GPT-4 costs to $120/month with DeepSeek V3.1, including thinking mode usage. But the engineering time to handle dual modes properly took about a week.