The compiler is your first and most important performance tool. I'm using GCC 12 and Clang 15 on my dev box - the optimization passes have been pretty stable since GCC 10, so unless you're hitting specific bugs, the version doesn't matter much. These compilers contain decades of optimization research, but they're still dumb as rocks when it comes to understanding your actual intent. You need to understand what they can and can't do, and how to give them the information they need without them shitting the bed.

Optimization Levels: The Good, The Bad, and The Debugging Nightmare

-O0 (No optimization): What you get by default. Produces slow, debugger-friendly code that directly translates your C statements to assembly. Every variable gets its own memory location, every calculation happens in source order. Perfect for debugging, terrible for performance.

-O1 (Basic optimization): Eliminates obvious waste without changing program structure. Removes dead code, combines common expressions, does basic register allocation. Safe for debugging, modest performance gains. This is what you want during development when you need both speed and debuggability.

-O2 (Aggressive optimization): The sweet spot for most production code. Enables aggressive loop optimizations, function inlining, and instruction scheduling. GCC enables about 50 different optimization passes at this level. Can break poorly written code that relies on undefined behavior.

-O3 (Maximum optimization): Adds auto-vectorization and more aggressive inlining. Can make your binary significantly larger and sometimes slower due to instruction cache pressure. I've hit compiler bugs where newer optimization levels make things slower instead of faster. Use only when benchmarks prove it helps your specific workload, and prepare to debug weird shit.

-Ofast: Enables -O3 plus fast math optimizations that violate IEEE floating-point standards. Will break code that depends on precise floating-point behavior, but can dramatically speed up numerical computations.

-Os (Optimize for size): Prioritizes smaller code size over speed. Essential for embedded systems with memory constraints. Often faster than -O2 on systems with small instruction caches.

The Flags That Actually Matter

Here's what actually works. I've wasted enough time on broken optimization flags:

## Development builds

gcc -O1 -g3 -Wall -Wextra -fno-omit-frame-pointer

## Production builds

gcc -O2 -DNDEBUG -march=native -mtune=native \

-flto -fuse-linker-plugin

## Performance-critical inner loops

gcc -O3 -march=native -mtune=native -funroll-loops \

-fprefetch-loop-arrays -ffast-math

-march=native -mtune=native: Optimizes for your specific CPU architecture. Can provide 10-20% performance improvements by using newer instructions. Don't use this for distributable binaries unless you control the deployment environment.

-flto (Link-Time Optimization): Enables cross-translation-unit optimizations. The compiler can inline functions across source files and eliminate dead code globally. LTO can provide 5-15% performance improvements with minimal effort using whole-program analysis. Fair warning: LTO occasionally breaks in weird ways with large static data, and the error messages are usually cryptic as hell.

-ffast-math: Trades IEEE compliance for speed. Allows the compiler to reorder floating-point operations and assume no NaN/infinity values. Can break numerical code, but essential for high-performance computing.

Profile-Guided Optimization: The Secret Weapon

Profile-Guided Optimization (PGO) uses runtime profiling data to inform compiler optimizations. It's the closest thing to magic in performance optimization - often providing 10-30% improvements with zero code changes.

## Step 1: Build with profiling instrumentation

gcc -O2 -fprofile-generate program.c -o program

## Step 2: Run typical workload to collect profile data

./program < typical_input_data

## Step 3: Rebuild using profile data

gcc -O2 -fprofile-use program.c -o program_optimized

PGO works by telling the compiler which branches are taken most frequently, which functions are hot, and how data flows through your program. The compiler uses this information for better instruction scheduling, branch prediction optimization, and function layout.

I once used PGO on a JSON parser and got a decent performance improvement - I think it was around 20-something percent, mostly from better branch prediction. The compiler reorganized the code so that the most common parsing paths had better instruction cache behavior. Here's the brutal reality though: PGO works great until your training data changes, then suddenly everything is way slower and you have no idea why. I've debugged production incidents where PGO-optimized code performed like shit because the real workload didn't match the training profiles.

Link-Time Optimization: Whole-Program Analysis

LTO defers optimization until link time, allowing the compiler to see your entire program at once. This enables interprocedural optimizations impossible during normal compilation:

- Cross-module inlining: Inline functions defined in other source files

- Dead code elimination: Remove unused functions across the entire program

- Better register allocation: Global register usage optimization

- Constant propagation: Propagate constants across translation units

The performance impact varies wildly by codebase. I've seen programs get 5% faster and others get 25% faster, depending on how much cross-module optimization opportunity exists. The downside? Build times go from 5 minutes to 45 minutes when you enable LTO. Your CI/CD pipeline will hate you, your developers will hate you, and ops will definitely hate you when debug builds take forever.

When Optimization Goes Wrong

Debug builds expose different bugs than release builds. I've debugged production crashes that only happened with -O2 because the optimizer eliminated undefined behavior that "worked" in debug builds. Always test optimized builds extensively.

Aggressive optimization can hurt performance. -O3 can make programs slower by increasing code size and instruction cache pressure. I've seen 10% performance regressions from too much inlining. Always benchmark your specific workload, because the compiler's idea of "optimization" might be your performance nightmare.

Architecture-specific optimizations aren't portable. Code compiled with -march=native on a Zen 4 processor will use AVX-512 instructions that crash older CPUs with a spectacular SIGILL. I've seen production deployments fail because someone compiled with -march=native on their shiny new dev machine, then deployed to servers running 5-year-old Xeons. Use generic optimization flags for distributable software unless you enjoy 3am emergency calls.

The Compiler Isn't Magic

Modern compilers are incredibly sophisticated, but they're not miracle workers. They can't fix algorithmic complexity, they can't optimize away inherently cache-hostile access patterns, and they can't vectorize code with complex control flow.

What compilers excel at:

- Eliminating redundant calculations

- Optimizing register usage

- Scheduling instructions for modern pipelines

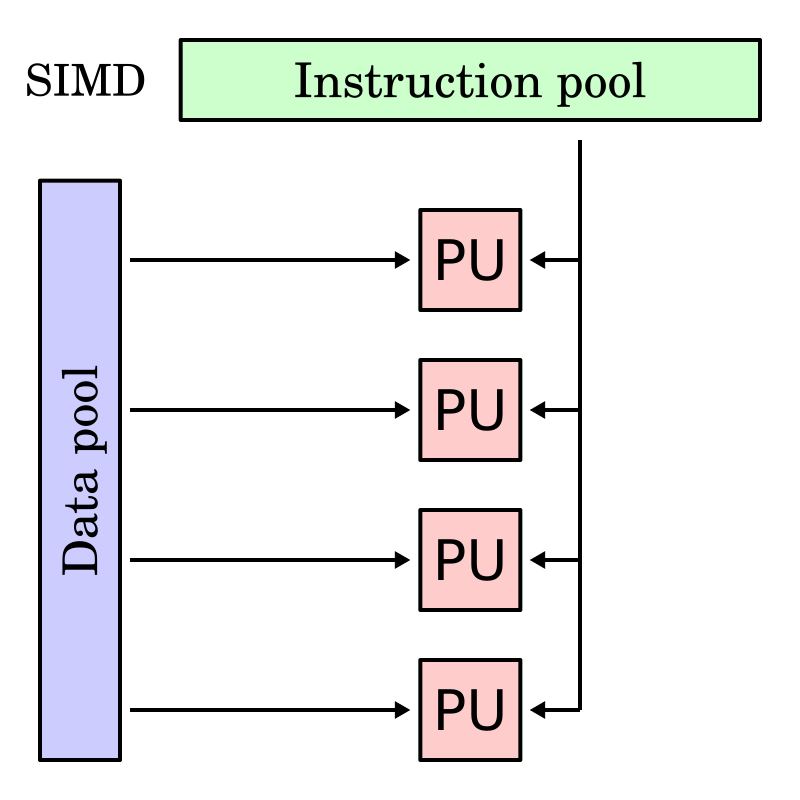

- Auto-vectorizing simple loops

- Inlining function calls

What compilers struggle with:

- Understanding higher-level data structure relationships

- Optimizing across abstraction boundaries

- Predicting cache behavior for complex access patterns

- Parallelizing loops with dependencies

- Optimizing for specific hardware quirks

The best performance comes from writing code that's compiler-friendly: simple control flow, predictable memory access patterns, and clear data dependencies. Give the compiler clean, straightforward code and it will generate surprisingly fast machine code.

Practical Optimization Workflow

- Start with

-O2for all production code unless you have specific reasons to use something else - Add

-march=nativeif you control the deployment environment - Enable LTO (

-flto) for final production builds - Try PGO for performance-critical applications with representative workloads

- Benchmark everything - optimization flags interact in unexpected ways

The most important lesson: measure, don't guess. What works for one codebase may hurt another. The compiler has hundreds of optimization flags, but only a handful matter for any specific program. Find the combination that works for your workload and stick with it.

OK, compiler flags covered. Now for the fun part - what actually makes things faster.