Why Confluence Cloud Backup Is Broken by Design

Atlassian's Enterprise backup features are only available if you're paying Enterprise prices, and even then they're garbage. Found this out the hard way when a client paying $800/user annually discovered they still couldn't restore individual pages.

The fundamental problems with native Confluence Cloud backup:

- Enterprise-only access: Standard and Premium customers get exactly nothing for automated backup

- 14-day expiration: Backups expire after 14 days in Atlassian storage - good luck with that monthly compliance audit

- 48-hour manual backup limits: You can only create manual backups every 48 hours, because apparently data disasters follow Atlassian's schedule

- No application-level restore: You get XML dumps, not the ability to restore specific pages or spaces

- Attachment limitations: File attachments aren't included in regular backups unless you specifically request them

Watched this disaster happen in real-time. Contractor was supposed to clean up old test spaces, deleted production engineering docs instead. Backup was 5 days old because manual backups on weekends? Yeah, right. Spent 3 days rebuilding deployment procedures from whatever screenshots people had in Slack.

Shared Responsibility Model: Where It All Goes Wrong

Here's Atlassian's responsibility model in plain English: they keep the servers running, everything else is your fucking problem. IT teams think "Cloud" means they can forget about backups. Spoiler alert: they can't.

What Atlassian protects:

- Hardware failures and infrastructure outages

- Database corruption and system-level backups

- Platform availability and disaster recovery

- Basic data durability (99.9% availability SLA)

What you're responsible for:

- User data loss from accidental deletion or misconfiguration

- Marketplace app failures that corrupt content

- Ransomware or security breaches affecting content

- Compliance retention requirements beyond 14 days

- Application-level restore and business continuity

Atlassian's backup is garbage for real enterprise needs. That gap is filled with expensive consulting hours and user frustration when things break.

Data Center Backup: More Control, More Problems

Confluence Data Center disaster recovery gives you complete control over backup strategies, but that control comes with operational complexity most teams underestimate.

Data Center backup advantages:

- Full system backups: Database, file system, configurations, and marketplace apps

- Flexible scheduling: Run backups as frequently as needed

- Retention control: Keep backups for years if compliance requires it

- Granular restore: Restore individual spaces, pages, or system configurations

- Integration options: Connect with enterprise backup solutions like Veeam or Commvault

Data Center backup reality check:

- Database consistency: Application-level backups while Confluence is running can create corrupt restores

- File system coordination: Database and attachment storage must be backed up simultaneously

- Storage requirements: Full backups of large Confluence instances consume massive storage

- Restore testing: Regular restore testing is essential but rarely done properly

- Clustering complexity: Multi-node setups require sophisticated backup orchestration

Had this nightmare at a healthcare client running Confluence 7.18.1 on Data Center. Main DB server died at 2:15am during the backup window. Backup had been timing out for 6 days because some idiot uploaded 8GB of MRI scans to a procedure page. PostgreSQL logs showed ERROR: canceling statement due to lock timeout but the backup script still returned exit code 0.

Lost 4 days of critical OR procedure updates. IT team worked straight through the weekend trying to reconstruct shit from email attachments and whatever doctors had saved locally on their phones.

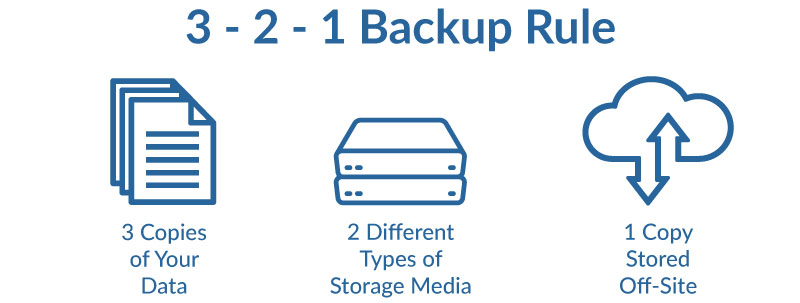

Database vs. Application-Level Backups

Most teams backup databases and call it done. But Confluence consists of database content, file attachments, search indexes, configurations, and marketplace app data. Missing any component creates incomplete restores.

Database-only backup approach:

-- MySQL backup example

mysqldump --single-transaction --routines --triggers confluence > confluence_backup.sql

Problems with database-only backups:

- Attachments stored separately aren't included

- Search indexes must be rebuilt (hours for large instances)

- Marketplace app configurations and data missing

- System settings and customizations not captured

Application-level backup approach:

- Use Confluence's built-in XML backup for complete content export

- Backup attachment storage directories separately

- Export configuration settings and marketplace app data

- Document custom integrations and system modifications

Atlassian's backup guide says use application-level backups but conveniently skips the part where you need to coordinate database snapshots, file system backups, and search index exports. Because that would be helpful.

The Third-Party Backup Ecosystem

Enterprise-grade Confluence backup requires third-party solutions that understand the application architecture and provide features Atlassian doesn't.

Marketplace Backup Solutions

Revyz Data Manager is the leading Confluence Cloud backup solution with enterprise features:

- Automated daily backups with customizable retention policies

- Granular restore capabilities for spaces, pages, and individual content

- Cross-instance migration for moving content between Confluence instances

- Compliance reporting with audit trails for backup and restore operations

- External storage integration with AWS S3, Azure Blob, and Google Cloud

Revyz costs around $3-8 per user monthly. For 500 users, you're looking at something like $18-50k annually just for backup capability that should be included with Confluence.

Alternative marketplace solutions:

- GitProtect - Multi-platform backup with version control integration

- HYCU - Enterprise backup with automated restore testing

- Keepit - SaaS data protection with unlimited retention

- Acronis Cyber Backup - Comprehensive backup with anti-ransomware protection

- Carbonite Safe - Cloud backup for business applications

- Druva inSync - Enterprise endpoint and cloud application backup

Enterprise Backup Integration

Large organizations integrate Confluence backup with existing enterprise solutions:

Veeam Backup & Replication for Data Center:

- Application-aware backups that understand Confluence dependencies

- Automated restore testing to verify backup integrity

- Integration with existing backup infrastructure and monitoring

- Disaster recovery orchestration with failover automation

Commvault Complete Backup & Recovery:

- Enterprise-grade retention and compliance features

- Cross-platform backup with cloud and on-premises support

- Advanced deduplication and compression for storage efficiency

- Legal hold capabilities for regulatory requirements

The integration complexity means most enterprises either over-pay for backup features they don't need or under-invest and discover gaps during disasters.

Additional enterprise backup resources:

- IBM Spectrum Protect - Enterprise data protection with advanced deduplication

- Dell PowerProtect - Data protection software for diverse workloads

- Rubrik backup solutions - Modern backup and recovery for hybrid cloud environments

- Cohesity DataPlatform - Next-generation backup and data management

Disaster Recovery Planning That Actually Works

Backup technology is only useful if you can restore data when needed. Most organizations focus on backup frequency and retention but ignore restore procedures and business continuity planning.

Recovery Time and Point Objectives

Recovery Time Objective (RTO): How long can you be down before business impact becomes unacceptable?

Recovery Point Objective (RPO): How much data loss can you tolerate?

Atlassian's published RTO/RPO targets vary by backup size:

- Small instances (under 30GB): 12-hour RTO, 24-hour RPO

- Large instances (over 120GB): More than 24 hours, contact support for assistance

These numbers assume everything works perfectly. Real disasters are complete chaos.

Enterprise RTO/RPO planning:

- Critical documentation (runbooks, procedures): Around 1-hour RTO, 4-hour RPO if you're lucky

- General collaboration content: 24-hour RTO, 24-hour RPO or whatever

- Archive/historical content: 1-week RTO, 1-week RPO (nobody cares)

- Development documentation: 4-hour RTO, 8-hour RPO (devs will complain if longer)

Disaster Scenarios and Response Plans

Scenario 1: User Error Data Loss

- Trigger: User accidentally deletes critical space or pages

- Detection: User reports missing content, space administrators notice

- Response: Restore specific content from most recent backup

- Timeline: 1-4 hours depending on backup solution capabilities

Scenario 2: Marketplace App Data Corruption

- Trigger: Marketplace app update corrupts page content or metadata

- Detection: Users report formatting issues, broken macros, or missing functionality

- Response: Identify affected content, restore from pre-update backup

- Timeline: 4-12 hours including impact assessment and selective restore

Scenario 3: Security Breach Content Compromise

- Trigger: Unauthorized access leads to data manipulation or deletion

- Detection: Security monitoring alerts, user reports of unauthorized changes

- Response: Isolate affected instances, forensic analysis, restore clean data

- Timeline: 24-72 hours including security investigation and full restore

Scenario 4: Infrastructure Outage (Data Center)

- Trigger: Hardware failure, natural disaster, or facility outage

- Detection: Monitoring systems, user access failures

- Response: Activate disaster recovery site, restore from offsite backups

- Timeline: 4-24 hours depending on DR site readiness and data volume

Restore Testing and Validation

Most backup solutions aren't tested until disasters strike. Regular restore testing identifies problems before they become business-critical.

Monthly restore testing process:

- Select representative content: Different content types, spaces, and time periods

- Perform restore to isolated environment: Separate instance or staging environment

- Validate content integrity: Check attachments, macros, links, and formatting

- Document issues and gaps: Failed restores, missing content, performance problems

- Update procedures: Incorporate lessons learned into disaster response plans

Quarterly disaster recovery drills:

- Full system restore simulation: Complete instance rebuild from backup

- Cross-team coordination: IT, business users, and management involvement

- Business continuity validation: Verify critical processes can continue during outage

- Communication plan testing: Internal and external stakeholder notification

Found out the hard way why testing matters. Client running Confluence 8.1.2 thought their backups were solid. Test restore revealed they'd been missing 40% of attachments for 3 months. Backup window was 2 hours, but 300GB of PowerPoints and CAD files took 6+ hours to transfer to S3. Job timed out every night with ERROR: Connection reset by peer (104) but the script only checked if the DB dump worked.

Nobody noticed until I restored to a test instance and half the engineering diagrams were broken links.

Compliance and Legal Requirements

Enterprise Confluence deployments must meet regulatory requirements that native backup solutions don't address.

Data Retention and Legal Hold

GDPR Article 17 Right to Erasure:

- Users can request deletion of personal data from Confluence

- Backup systems must support selective deletion without corrupting other data

- Retention policies must respect regional data protection requirements

SOX Section 404 Internal Controls:

- Financial services organizations must maintain audit trails for documentation changes

- Backup and restore activities require logging and approval workflows

- Retention periods often exceed Atlassian's 14-day backup expiration

HIPAA Data Protection:

- Healthcare organizations must encrypt backups and control access to PHI

- Business Associate Agreements (BAAs) with backup vendors required

- Breach notification requirements include backup data compromise

Compliance reality check: Most Confluence deployments are fucked from a compliance perspective because nobody thought about regulatory requirements when setting up backups. Retrofitting compliance costs 10x more than doing it right the first time.

International Data Residency

Confluence Cloud operates in multiple AWS regions, but backup data location isn't always obvious:

Atlassian has data centers in:

- US, EU, Australia - those I'm sure about

- Germany, Singapore, maybe Canada? You'd have to check

- Other regions might exist but I don't know for sure

Third-party backup data residency:

- Many marketplace backup solutions store data in US-based cloud storage

- Cross-border data transfer may violate local data protection laws

- Some solutions offer region-specific storage but at premium pricing

Organizations discover data residency violations during compliance audits when backup data crosses borders they didn't expect.

Data residency and privacy resources:

- Microsoft Azure Data Residency - Global cloud data location guidance

- AWS Data Protection and Privacy - Cloud data protection compliance framework

- Google Cloud Security - Enterprise data governance and compliance tools

- Privacy by Design Framework - Privacy engineering principles for data systems

Cost Analysis: What Confluence Backup Actually Costs

Backup costs go far beyond software licensing. Real enterprise backup strategies require infrastructure, staff time, storage, and operational overhead.

Cloud Backup Cost Breakdown (500 users)

Atlassian native backup (Enterprise only):

- Included with Enterprise licensing ($800-1200/user annually)

- 14-day retention, 48-hour manual backup limits

- Amazon S3 storage costs ($50-200 monthly for backup storage)

- Total: $400k-600k annually (but you're paying for Enterprise features, not just backup)

Marketplace backup solution (Revyz example):

- Software licensing: $3-8/user monthly ($18k-48k annually)

- External storage costs: $100-500 monthly

- Implementation and training: $10k-25k one-time

- Total: $28k-73k annually plus implementation costs

DIY scripted backup approach:

- Developer time: 2-4 weeks initial development ($20k-40k)

- Infrastructure costs: $200-800 monthly

- Ongoing maintenance: 10-20 hours monthly ($15k-30k annually)

- Total: $40k-70k annually plus initial development

Data Center Backup Cost Breakdown (500 users)

Enterprise backup software integration:

- Veeam/Commvault licensing: $15k-50k annually

- Storage infrastructure: $30k-100k hardware plus ongoing storage costs

- Implementation services: $25k-75k professional services

- Total: $70k-225k annually plus hardware and implementation

Open source backup solutions:

- Software costs: $0 (but support contracts recommended)

- Infrastructure and storage: $40k-120k annually

- Staff time: 0.5-1.0 FTE dedicated backup administrator ($75k-150k annually)

- Total: $115k-270k annually including staff costs

The "free" solutions aren't free when you factor in operational overhead and business risk.

Hidden Costs Nobody Budgets For

Restore testing and validation:

- Monthly testing procedures: 8-16 hours ($2k-4k annually)

- Quarterly disaster recovery drills: 40-80 hours ($8k-16k annually)

- Annual testing overhead: $10k-20k

Compliance and audit preparation:

- Legal review of backup policies and procedures: $5k-15k

- Compliance reporting and documentation: $10k-25k annually

- External audit support and remediation: $15k-40k annually

- Annual compliance overhead: $30k-80k

Disaster recovery execution costs:

- Emergency support and consulting: $5k-25k per incident

- Business disruption and productivity loss: $50k-500k depending on outage duration

- Communication and customer impact management: $10k-50k per incident

- Potential disaster costs: $65k-575k per incident

The goal of backup investment is to avoid these disaster costs, but most organizations under-invest until they experience real data loss.

Cloud vs. Data Center: Strategic Backup Considerations

The fundamental architecture differences between Cloud and Data Center create different backup strategies and trade-offs.

Cloud Backup Strategic Advantages

Simplified infrastructure management:

- No database administration or storage management

- Automatic scaling during backup operations

- Reduced technical complexity for small IT teams

Integrated disaster recovery:

- Atlassian handles infrastructure failover and recovery

- Multi-region deployment options for geographic disaster recovery

- Automatic software updates and security patches

Cloud Backup Strategic Disadvantages

Limited control and customization:

- Backup scheduling and retention controlled by Atlassian or third-party solutions

- No access to underlying database or file system for custom backup strategies

- Marketplace app dependency for advanced backup features

Vendor lock-in and compliance risk:

- Backup data format may not be portable to other platforms

- Compliance and regulatory requirements may not be fully addressed

- Third-party backup vendors create additional vendor relationships

Data Center Backup Strategic Advantages

Complete control and customization:

- Custom backup schedules, retention policies, and storage locations

- Integration with existing enterprise backup and disaster recovery infrastructure

- Granular control over compliance and regulatory requirements

Platform independence:

- Backup data format supports migration to other platforms or vendors

- No dependency on marketplace vendors or third-party backup solutions

- Custom disaster recovery procedures and business continuity planning

Data Center Backup Strategic Disadvantages

Operational complexity and overhead:

- Database administration, storage management, and backup infrastructure

- Staff expertise requirements for backup software and disaster recovery procedures

- Hardware and software maintenance responsibilities

Higher total cost of ownership:

- Infrastructure, software licensing, and staff costs often exceed Cloud alternatives

- Disaster recovery site setup and maintenance costs

- Ongoing software updates and security management

The strategic choice depends on organizational risk tolerance, compliance requirements, and IT operations capabilities. Most organizations underestimate the operational overhead of Data Center backup or the limitations of Cloud backup options.

Advanced Backup Strategies for Enterprise Scale

Large-scale Confluence deployments require sophisticated backup approaches that go beyond basic daily backups and retention policies.

Cross-Instance Backup and Migration

Multi-instance backup coordination:

- Synchronized backups across development, staging, and production instances

- Content migration between instances for testing and disaster recovery

- Configuration and user data synchronization across environments

Content archival strategies:

- Automated identification and archival of unused spaces and content

- Long-term storage of archived content with retrieval capabilities

- Compliance-driven retention and disposal of archived data

Integration with Enterprise Backup Ecosystems

Centralized backup monitoring and reporting:

- Integration with enterprise backup dashboards and alerting systems

- Unified reporting across all enterprise applications and data sources

- Automated compliance reporting and audit trail generation

Policy-based backup management:

- Different backup frequencies and retention periods based on content classification

- Automatic backup scheduling based on content change frequency and business impact

- Storage tier management with automatic migration to lower-cost storage

Global Deployment Backup Strategies

Multi-region backup replication:

- Cross-region backup replication for geographic disaster recovery

- Data residency compliance with region-specific backup storage

- Network optimization for efficient backup data transfer

Follow-the-sun backup operations:

- Backup scheduling optimized for global user activity patterns

- Regional backup validation and restore testing procedures

- Time zone coordination for disaster recovery and business continuity

The complexity of enterprise backup strategies requires dedicated expertise and significant investment in automation and monitoring tools.

Bottom line: Confluence backup is way more complicated than Atlassian wants you to think. Plan accordingly or plan to be fucked when shit hits the fan.