Every data center engineer's nightmare came true in Daejeon, South Korea yesterday. What started as a routine Friday evening turned into an all-night lithium battery disaster that took down the entire government.

The National Information Resources Service facility caught fire around 8:15 PM on September 26th. Not a little server rack fire that you can handle with halon - we're talking about hundreds of lithium-ion battery packs turning into an unstoppable inferno that ate through the building's fire suppression systems like they were made of paper.

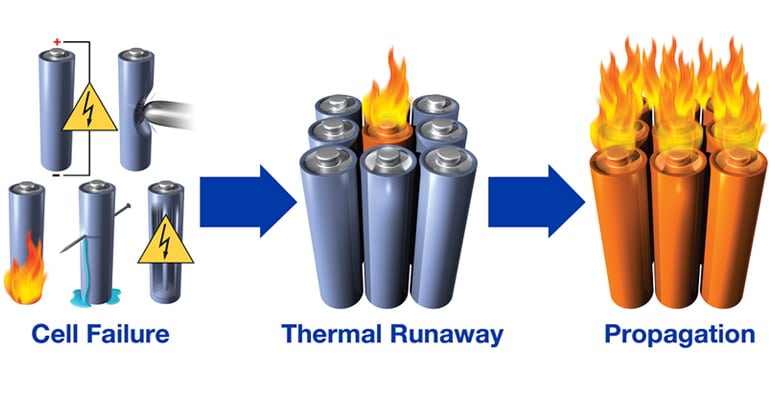

Here's what actually happened: Those fancy UPS batteries that are supposed to keep your systems running during power outages? They became the biggest single point of failure imaginable. When lithium batteries catch fire, you can't just spray water on them - they burn at 2000°F, create their own oxygen, and reignite hours after you think you've put them out.

The Technical Clusterfuck

Hundreds of government IT systems went offline instantly. Not "degraded performance" or "some services temporarily unavailable" - completely dead. We're talking about:

- All postal services (good luck getting mail)

- Tax systems during end-of-quarter filing season

- Immigration services (border control went manual)

- Social security payments (people couldn't access their benefits)

- Government employee portals (nobody could log into work systems)

The fire department took nearly a full day to fully extinguish it. If you've ever had to explain to management why the email server has been down for 2 hours, imagine explaining why the entire government network has been dark for almost 24 hours.

What Went Wrong (Besides Everything)

This wasn't some freak accident - it's the kind of disaster that happens when you centralize everything in one location without proper redundancy planning. The NIRS facility in Daejeon hosts critical systems for a country of 52 million people, violating every disaster recovery best practice.

The battery fire started in the air conditioning system area on the 5th floor. Once it spread to the UPS room, game over. Those hundreds of battery packs weren't just providing backup power - they were sitting next to all the primary cooling infrastructure. When the cooling died, the servers started overheating even faster than the fire could reach them.

Here's the real kicker: The fire suppression system was designed for electrical fires, not lithium battery thermal runaway. Halon systems work great when your server catches fire, but lithium batteries create their own chemistry set. They release hydrogen fluoride gas (which is toxic as hell) and burn hot enough to melt through steel.

Recovery Reality Check

As of this morning, services are "gradually resuming" - which in government speak means "we're frantically trying to bring systems online one by one and hoping nothing else breaks."

The real recovery time for something like this? Plan on weeks, not days. Even if the hardware survived (spoiler: most of it didn't), you're looking at:

- Hardware replacement and configuration

- Data restoration from backups (assuming they work)

- Network reconfiguration and testing

- Security audits for every system

- Staff working 16-hour days trying to fix everything at once

This is what happens when you treat infrastructure like an afterthought until it's on fire.