Apple's iOS 26 update finally adds Apple Intelligence features that aren't complete dogshit. After two years of Siri pretending to be smart and text suggestions that actively made my writing worse, these features might actually be worth the hype.

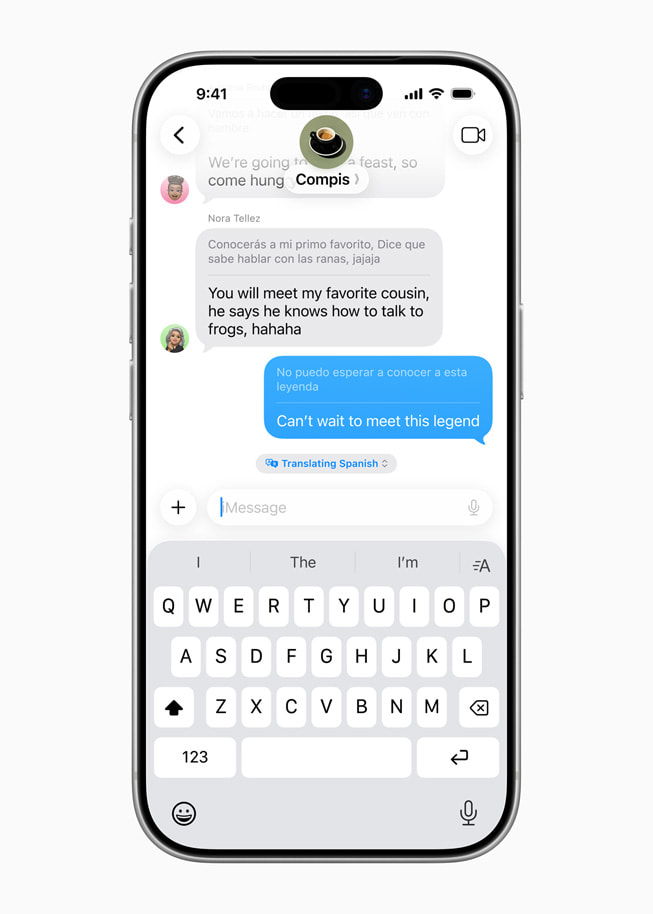

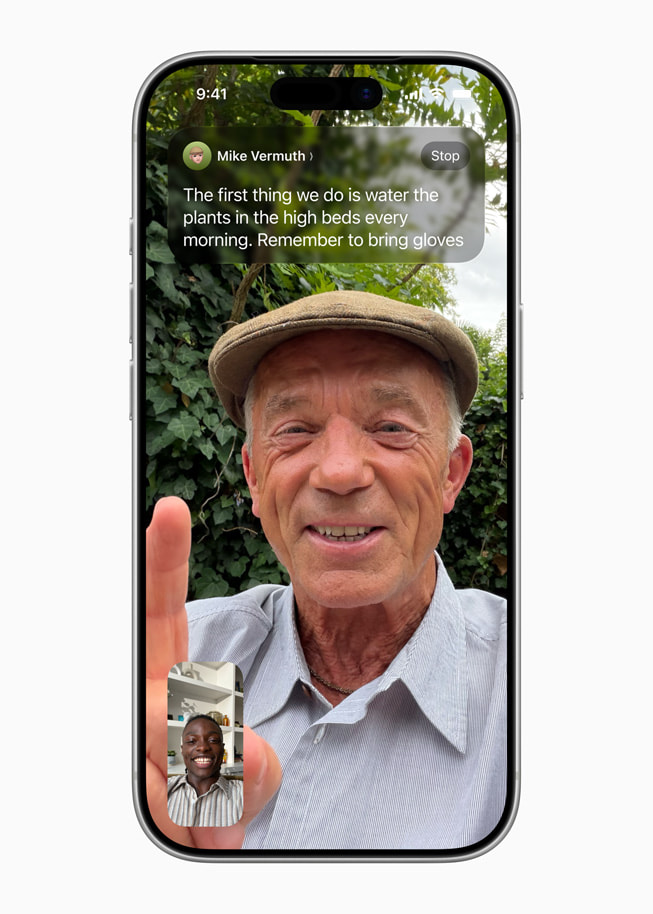

The big one is Live Translation in Messages, FaceTime, and phone calls. Apple claims it works "seamlessly" - we'll see. Google Translate still mangles half my attempts at Spanish, so Apple's version better be a lot smarter than "real-time" translation usually is. The FaceTime captions sound useful if they actually work reliably, which is a big if.

The AirPods Pro 3 real-time translation trick sounds cool until you try using it in a noisy restaurant or with someone who talks fast. I've been burned by too many "revolutionary" voice features that work perfectly in Apple's demo videos but shit the bed in real situations.

Visual Intelligence Gets Practical (Maybe)

Visual Intelligence is basically reverse image search with Apple polish. Screenshot something, highlight an object, and it searches Google, eBay, Poshmark, and Etsy for similar items. The "see a jacket in an Instagram story and find where to buy it" example sounds great until you realize most influencers are wearing custom shit that costs more than your rent.

The event extraction from flyers could be useful if it actually works. OCR has been hit-or-miss for years, so we'll see if Apple's version can handle shitty concert posters and handwritten food truck schedules. The ChatGPT integration is just another way to avoid opening the ChatGPT app, which is fine I guess.

Workout Buddy: Because We Need AI to Tell Us We're Out of Shape

Workout Buddy is Apple's AI personal trainer that knows all your fitness data. It analyzes your workout history, heart rate, and pace to give you "personalized motivation" during exercise. The AI supposedly matches the energy of Fitness+ trainers, which sounds like it'll be either motivating or incredibly annoying depending on your tolerance for enthusiastic AI cheerleading.

The idea is that it knows when you're having a shit run compared to your usual pace and adjusts its encouragement accordingly. Could be useful, could be another feature that works great for the first week before you get tired of your watch telling you to push harder when you're already dying.

Third-Party Apps Get AI Powers (Finally)

Apple opened up their on-device LLM so other developers can build AI features without sending your data to the cloud or paying OpenAI API fees. The early apps are exactly what you'd expect:

- Streaks suggests to-do tasks (probably better than Siri's suggestions, but that's a low bar)

- CARROT Weather added conversational features in its usual sarcastic style

- Detail: AI Video Editor generates teleprompter scripts from outlines

The offline processing is genuinely useful - no internet required, no data leaving your device, no usage limits. If more developers actually use this instead of defaulting to ChatGPT APIs, it could be a real advantage for Apple's ecosystem.

Shortcuts Gets Smarter (For People Who Actually Use Shortcuts)

AI-powered Shortcuts let you build automations with Apple Intelligence. Compare meeting transcriptions to your notes, summarize documents, extract data from PDFs and dump it into spreadsheets. The usual workflow automation stuff, but with AI doing the heavy lifting.

This is genuinely useful if you're already deep into Shortcuts. You could automate extracting action items from meeting recordings or automatically categorizing documents by content. But let's be real - most people still don't use Shortcuts beyond basic stuff like turning on Do Not Disturb. This is power user territory.

Privacy Claims Put to the Test

Apple keeps pushing their privacy angle - most stuff runs on your device, complex requests go to Private Cloud Compute, they pinky swear not to store your data. They even let security researchers inspect their server code, which is more transparent than most AI companies.

Whether you trust Apple's privacy claims is up to you, but at least they're making the effort instead of just saying "trust us" like everyone else.

Language Support: Good Luck If You Speak Anything Else

iOS 26 works with nine languages initially: English, French, German, Italian, Portuguese (Brazil), Spanish, Chinese (simplified), Japanese, and Korean. More coming "soon" including Danish, Dutch, Norwegian, and others.

If you speak anything outside the big languages, you're still waiting. Apple's definition of "global" AI is pretty narrow.

The Little Stuff That Might Actually Matter

Some smaller improvements that could be useful:

- Reminders pulls tasks from emails and notes automatically

- Wallet summarizes all your order tracking in one place

- Messages suggests polls and generates chat backgrounds

Not sexy features, but the kind of thing that saves you 30 seconds here and there.

The Reality Check

iOS 26 is the first Apple Intelligence release that doesn't feel like a tech demo. Live Translation could be genuinely useful if it works as advertised. Visual Intelligence is reverse image search with better UX. Shortcuts automation is powerful if you're into that.

But Apple's been promising "revolutionary" AI features for two years. These are solid improvements, not world-changing breakthroughs. Whether that's enough to justify upgrading depends on how much you trust Apple's promises versus their actual track record with AI features.