Million qubits by 2030. IBM and Google are back with more quantum promises. I remember when practical quantum computing was coming in 2020. Then 2025. Now 2030.

Working with quantum systems for the past eight years taught me this: every roadmap assumes the hard problems will magically solve themselves. Error rates still suck. Coherence times are shit. These machines need temperatures colder than deep space and die if you look at them wrong.

But hey, maybe this time is different.

IBM's Quantum Loon: New Name, Same Problems

IBM's betting everything on their Quantum Loon processor. The marketing calls it "enhanced connectivity architecture designed for high-rate quantum Low-Density Parity-Check codes." That's enterprise speak for "we're still trying to fix the error rate problem."

I've debugged quantum circuits on IBM's hardware. Their Heron processors are decent - when they work. Error rates hover around 0.1% for single-qubit gates, which sounds good until you realize a useful quantum algorithm needs thousands of operations. Do the math: 0.1% error × 10,000 operations = your computation is garbage.

The 16,632 qubit system they're building? Those aren't logical qubits that do useful work. They're physical qubits, and you need hundreds of physical qubits to make one error-corrected logical qubit that doesn't immediately shit the bed.

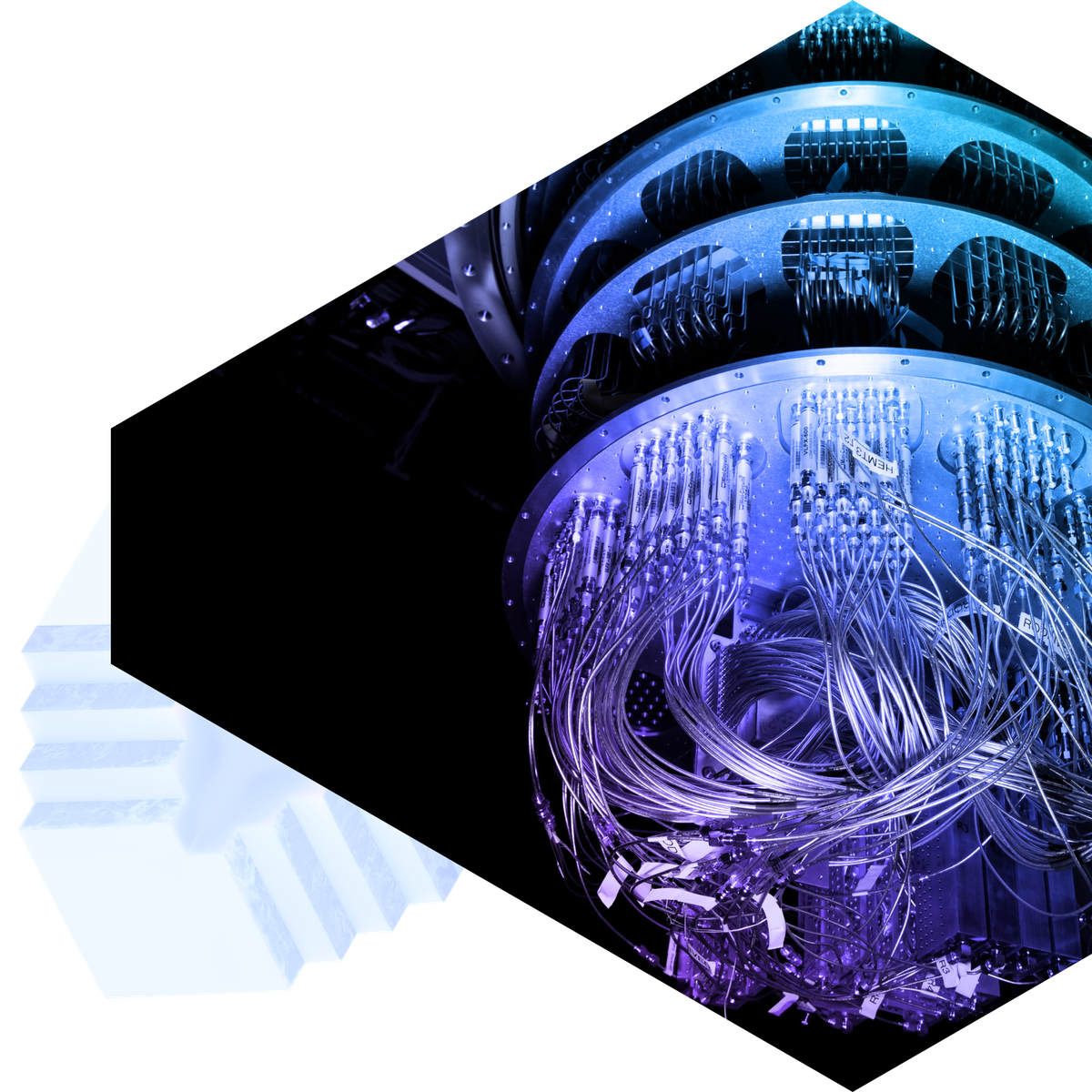

IBM's quantum data center sounds impressive. I toured their Poughkeepsie facility last year. Dilution refrigerators everywhere, each one costing more than my house. The engineers are brilliant, but they're fighting physics with bigger refrigerators.

Google's Surface Code Gambit

Google's taking a different approach with surface codes. Their Sycamore processor hit 70 qubits and demonstrated quantum supremacy on a problem nobody cares about. Useful quantum advantage? Still waiting.

Surface codes need thousands of physical qubits to make one good logical qubit. Google's roadmap shows them scaling to millions of qubits by 2030, but they're still stuck at dozens. That's not an engineering gap - that's a physics canyon.

I've talked to Google quantum engineers. They're optimistic because they have to be. But privately? They know the error correction overhead is brutal. Every breakthrough paper shows beautiful theoretical results that fall apart when implemented on real hardware. Quantinuum's recent claims about crossing error correction thresholds sound great until you see they tested it on toy problems.

The Money Keeps Flowing

McKinsey counts 191 quantum patents from IBM and 168 from Google in 2024. Patents don't solve decoherence, but they look good to investors.

Government funding hit $1.2 billion for quantum computing in the infrastructure bill. That money mostly goes to the same universities publishing papers about how quantum computing will revolutionize everything, someday, maybe.

I've watched startups burn through venture funding building quantum software for computers that don't exist yet. The whole ecosystem runs on hope and DARPA grants. Current quantum computing trends show more investment chasing the same unsolved problems.

What Actually Works Today

Here's what quantum computers can do right now: solve optimization problems that you could solve faster on a GPU cluster. They're good at quantum simulations of small molecules, assuming you don't need precise answers.

Financial firms keep funding quantum research for portfolio optimization. I've seen these prototypes. Classical algorithms still win on real trading problems.

Drug discovery companies love quantum hype. Molecular simulation sounds perfect for quantum computers until you realize most interesting molecules have too many atoms for current systems to handle. Current quantum drug discovery applications are limited to proof-of-concept studies that don't capture real-world drug development complexity.

The useful quantum algorithms need fault-tolerant quantum computers. We don't have those. We might not have them in 2030.

China Changes Everything

China's pouring $15 billion into quantum research. That's not venture capital - that's strategic competition. When the Pentagon starts talking about "quantum security gaps," politicians write bigger checks.

The cryptography angle is real. Shor's algorithm would break RSA encryption if you could run it on a fault-tolerant quantum computer. The NSA already mandates post-quantum cryptography for classified systems.

The 2030 Reality Check

Million-qubit quantum computers by 2030? Maybe. Useful million-qubit quantum computers? Different question entirely.

The hard part isn't building more qubits. It's making them stay quantum long enough to finish a calculation. Current coherence times measure in milliseconds. Complex algorithms need minutes or hours.

Every quantum roadmap assumes exponential improvement in error rates, coherence times, and connectivity. That's not how physics works. Error correction scales horribly - you need more error correction qubits to fix the errors in your error correction qubits. Even Oxford's record-breaking gate fidelity of 10⁻⁷ error rates doesn't solve the scaling problem.

I want quantum computing to work. I've spent my career on this stuff. But 2030 is five years away, and we're still fighting the same fundamental problems we had in 2020. Around 100-200 quantum computers exist worldwide, but none solve useful problems better than classical computers. Million qubits won't help if they're all broken.