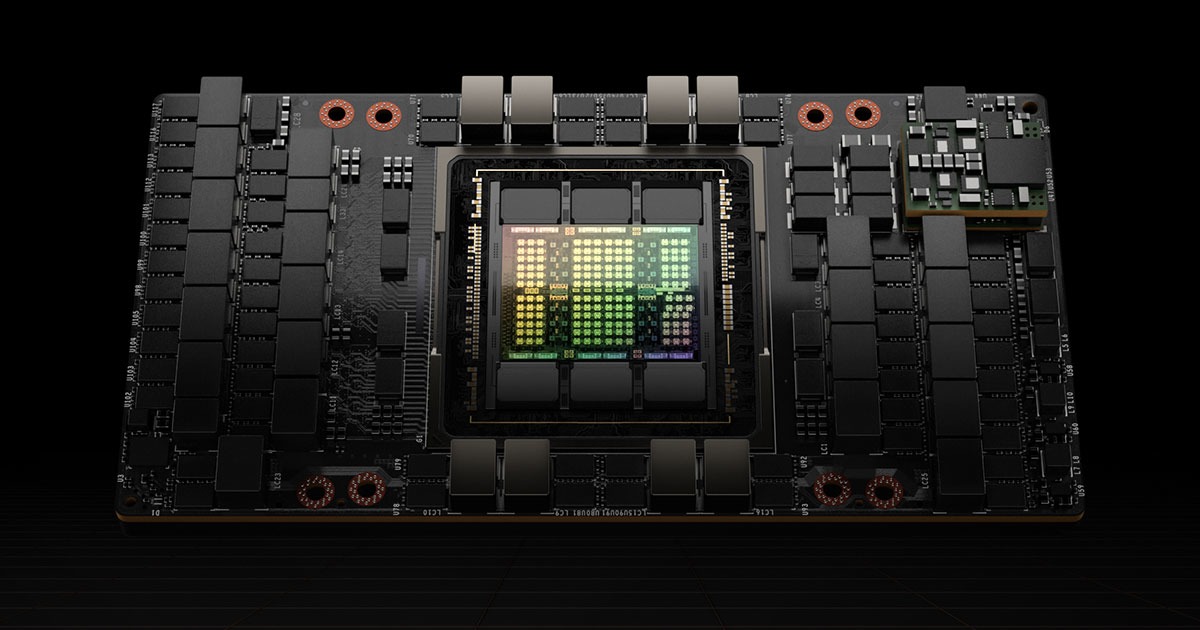

So xAI built a huge data center in Memphis. According to the announcement, they've got hundreds of thousands of NVIDIA H100 GPUs all connected together. NVIDIA confirmed this is their largest Ethernet-based supercomputer deployment to date. Sounds impressive until you realize that's basically what every major AI company is doing - just throwing money at NVIDIA and hoping scale solves their problems.

The Reality of Hundreds of Thousands of GPUs

Look, putting hundreds of thousands of H100s in one location is genuinely nuts from an infrastructure perspective. Each H100 draws about 700 watts under load, so we're talking about 140-280 megawatts for the whole setup. That's enough power for a small city, and Memphis isn't exactly known for having unlimited electricity. The Tennessee Valley Authority is probably scrambling to upgrade their grid infrastructure.

The networking alone is a nightmare. You need crazy fast interconnects to keep all these GPUs talking to each other without bottlenecking. We're probably looking at InfiniBand or NVIDIA's NVLink switches, which cost more than most people's houses. Supermicro built the rack infrastructure with liquid cooling systems that pump thousands of gallons per minute. One bad cable and you've got a $50 million paperweight.

From Grok to... What Exactly?

xAI's Grok chatbot is fine, I guess. It's basically ChatGPT with fewer guardrails and access to Twitter data. But Musk keeps talking about "understanding the universe" and "revealing deepest secrets" like this GPU cluster is going to solve physics.

Here's the thing: throwing more compute at transformer models doesn't magically make them understand quantum mechanics or discover new laws of physics. You need actual breakthroughs in model architecture, training methods, and data quality. More GPUs just means you can train bigger models faster - it doesn't mean they'll be smarter.

Infrastructure Challenges Nobody Talks About

The Memphis facility is going to have some serious operational challenges:

Power: Tennessee Valley Authority is probably freaking out about the grid impact. Data centers this size need dedicated substations and backup generators that cost millions.

Cooling: Memphis summers are brutal. You're looking at massive HVAC systems and probably chilled water loops. The cooling infrastructure costs as much as the GPUs themselves.

Maintenance: When you have hundreds of thousands of GPUs, something breaks every few minutes. You need a small army of technicians and a massive spare parts inventory.

Networking: The moment one switch fails, you've got thousands of GPUs sitting idle. The redundancy requirements are insane - NCCL (NVIDIA's communication library) has edge cases that only show up at massive scale.

Competition or Just Deep Pockets?

Musk positions this as competing with OpenAI and Google, but honestly it's just catching up. OpenAI has been training on massive clusters for years, and Google's TPU farms are purpose-built for this stuff.

The only real advantage xAI has is money and willingness to burn through it. Tesla stock pays for a lot of H100s, and Musk isn't worried about quarterly profits like public companies. But that doesn't make xAI technically superior - just better funded.

The Real Test: What Comes Next

Building the data center is the easy part. The hard part is training models that actually justify this massive infrastructure investment. So far, we've got Grok, which is decent but not revolutionary.

If xAI can actually produce models that outperform GPT-4 or Claude, then maybe this Memphis facility makes sense. But if they're just building a bigger version of existing models, it's an expensive way to play catch-up in the AI arms race.