Supabase Realtime is an Elixir cluster that syncs data over WebSockets. Built on Phoenix Framework, it can supposedly handle millions of connections across regions. Works great in demos, flaky as hell in production.

Phoenix Channels handle the pub/sub stuff using Elixir processes. Messages supposedly take the shortest path between regions - when it works. Sometimes your Singapore users get routed through Virginia for no fucking reason.

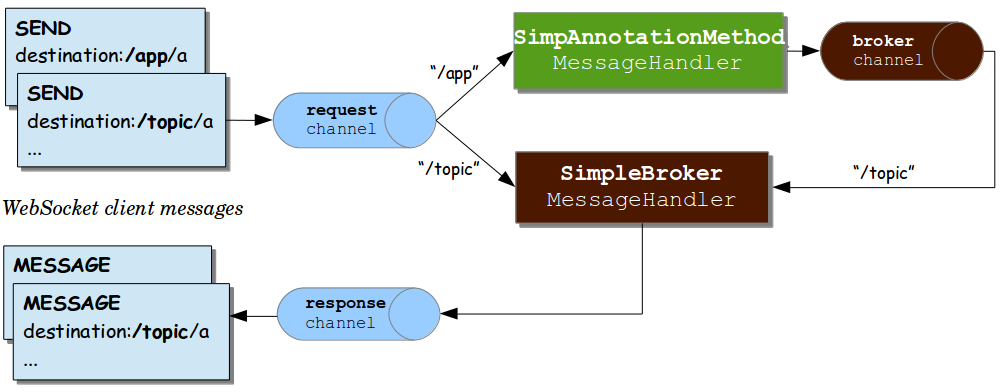

Core Components

Phoenix Channels run the messaging using Phoenix.PubSub. Works fine until it doesn't.

Global state sync supposedly keeps presence data consistent across regions. In reality, ghost users pile up like digital zombies and your "online users" count becomes meaningless.

Database integration streams changes from PostgreSQL's WAL through replication slots. When your database gets hammered, WAL replication lags like hell and your "real-time" updates turn into "whenever-the-fuck-they-feel-like-it" updates.

Broadcast from Database (2025 Update)

The latest Broadcast from Database feature sends messages when database changes happen. Creates a partitioned realtime.messages table that publishes changes over WebSockets. Built-in authorization through RLS policies actually works.

Messages get purged after 3 days by dropping partitions - at least that part doesn't require manual cleanup.

Fault-tolerant my ass. Here's what actually breaks in production:

When Realtime Breaks (And It Will)

The "fault-tolerant" system still fails in predictable ways. I've debugged all of these at 3am:

Connection Pool Death Spiral: During traffic spikes, connection pools get exhausted and new WebSocket connections start timing out. Your users get the dreaded "connection failed" error with zero useful context. The solution? Restart everything and pray.

WAL Replication Lag Hell: WAL replication lags like hell when your DB is getting hammered. Your "real-time" changes turn into "eventual-time" changes and users see stale data while thinking everything is live.

Message Ordering Chaos: Broadcast messages don't arrive in order during network congestion. Your collaborative cursor app turns into a seizure-inducing mess as cursors jump randomly around the screen. There's no built-in message sequencing - you'll need to add timestamps and handle out-of-order delivery yourself.

The Phantom Presence Problem: Users who force-quit their browser or lose WiFi stay "online" forever. Ghost users pile up like digital zombies until your user list is meaningless. The CRDT "eventual consistency" sometimes means "never consistent."

Regional Routing Madness: Messages "usually" take the shortest path between regions, except when AWS has connectivity issues and your Singapore users get routed through Virginia for no fucking reason. Latency spikes from 50ms to 500ms and there's nothing you can debug.

The Database Connection Black Hole: When your database goes down, Realtime tries to reconnect from the "nearest available region." In practice, this means 30-60 seconds of complete silence while your users wonder if their internet broke.

Deep Dive References

For more technical details on Realtime's architecture and implementation:

- Elixir Language Official Guide - Understanding the language behind Realtime's performance

- Phoenix Framework Documentation - Core framework powering Realtime's WebSocket handling

- Phoenix Channels Deep Dive - How real-time communication actually works

- Phoenix PubSub Architecture - The messaging system beneath broadcasts

- Erlang Process Groups - How messages get routed globally

- PostgreSQL Logical Replication - How database changes get streamed

- Write-Ahead Logging in PostgreSQL - The foundation of Postgres Changes

- Conflict-Free Replicated Data Types - How Presence maintains consistency

- WebSocket Protocol Specification - Understanding the underlying connection protocol

- AWS Global Network Infrastructure - How multi-region message routing actually works

- Ably's WebSocket Architecture Guide - Industry best practices for real-time systems